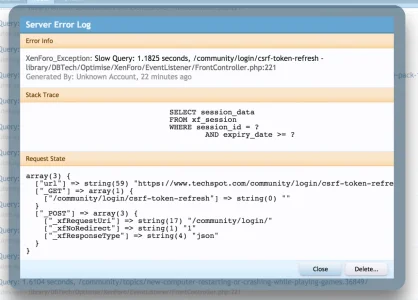

Error Info

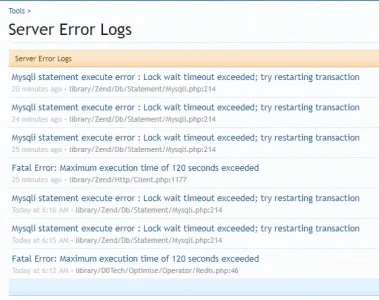

Zend_Db_Statement_Mysqli_Exception: Mysqli statement execute error : Lock wait timeout exceeded; try restarting transaction - library/Zend/Db/Statement/Mysqli.php:214

Generated By: Unknown Account, 1 minute ago

Stack Trace

#0 /library/Zend/Db/Statement.php(297): Zend_Db_Statement_Mysqli->_execute(Array)

#1 /library/Zend/Db/Adapter/Abstract.php(479): Zend_Db_Statement->execute(Array)

#2 /library/XenForo/Model/DataRegistry.php(164): Zend_Db_Adapter_Abstract->query('\r\n\t\t\tINSERT INT...', Array)

#3 /library/DBTech/Optimise/XenForo/Model/DataRegistry.php(124): XenForo_Model_DataRegistry->set('PrefixesThreads...', Array)

#4 /library/PrefixForumListing/Model/PrefixListing.php(115): DBTech_Optimise_XenForo_Model_DataRegistry->set('PrefixesThreads...', Array)

#5 /library/PrefixForumListing/Extend/ControllerPublic/Forum.php(159): PrefixForumListing_Model_PrefixListing->updateThreadCountCache(34, Array)

#6 /library/Andy/NewContentLimit/ControllerPublic/Forum.php(8): PrefixForumListing_Extend_ControllerPublic_Forum->actionForum()

#7 /library/CTA/FeaturedThreads/ControllerPublic/Forum.php(215): Andy_NewContentLimit_ControllerPublic_Forum->actionForum()

#8 /library/DailyStats/ControllerPublic/Forum.php(53): CTA_FeaturedThreads_ControllerPublic_Forum->actionForum()

#9 /library/SV/MultiPrefix/XenForo/ControllerPublic/Forum.php(20): DailyStats_ControllerPublic_Forum->actionForum()

#10 /library/XenForo/FrontController.php(369): SV_MultiPrefix_XenForo_ControllerPublic_Forum->actionForum()

#11 /library/XenForo/FrontController.php(152): XenForo_FrontController->dispatch(Object(XenForo_RouteMatch))

#12 /index.php(13): XenForo_FrontController->run()

#13 {main}

Request State

array(3) {

["url"] => string(77) "

https://domain.com/forums/forum-software-usage.34/?prefix_id=14"

["_GET"] => array(2) {

["/forums/forum-software-usage_34/"] => string(0) ""

["prefix_id"] => string(2) "14"

}

["_POST"] => array(0) {

}

}