@Anthony Parsons

Just from the conversations via email, have you tried dedos at all?

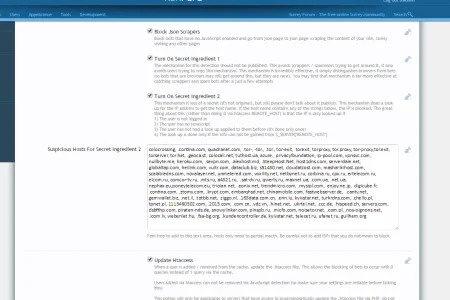

It comes with an options for stopping suspicious hosts, I think I've prepoulated it with quite a few, but these are some of them picked up from other methods that dedos uses

colocrossing, .contina.com, quadranet.com, .tor-, -tor., .tor., tor-exit, torexit, torproxy, tor.proxy, tor-proxy,tor.exit, torserver, tor.het, .geoca.st, colocall.net, tuthost.ua, azure., privacyfoundation, ip-pool.com, vpnsvc.com, nullbyte.me, heroku.com, .sevpn.com, .alexhost.md, .SteepHost.Net, host1dns.com, serverdale.net, globaltap.com, heilink.com, vultr.com, dataclub.biz, s51430.net, cloudatcost.com, masharikihost.com, scalabledns.com, novalayer.net, unmetered.com, voxility.net, netbynet.ru, corbina.ru, cpx.ru, ertelecom.ru, elcom.ru, comcor-tv.ru, .mts.ru, a4321.ru, .sat-dv.ru, qwerty.ru, maxnet.ua, .com.ua, .net.ua, nephax.eu,poneytelecom.eu, triolan.net, .eonix.net, trendmicro.com, .mysipl.com, enjoy.ne.jp, .digicube.fr, .contina.com, .ztomy.com, .krypt.com, embarqhsd.net, chinamobile.com, fastwebserver.de, .cantv.net, gemwallet.biz, .net.il, .totbb.net, ziggo.nl, .163data.com.cn, .enn.lu, kyivstar.net, turkrdns.com, .chello.pl, tpnet.pl, 1113460302.com, .2015.com, .com.cn, .vdc.vn, .hinet.net, .ukrtel.net, .ccc.de, .hispeed.ch, servers.com, dsbfthp.com, piraten-nds.de, snowylinker.com, pinspb.ru, .micfo.com, noisetor.net, .com.pl, .nos-oignons.net, .icom.lv, webenlet.hu, .fsa-bg.org, .kundencontroller.de, kyivstar.net, telecet.ru, ufanet.ru, guilhem.org

most of them are hosting sites that I use myself for automating (but white hat automating for my own site).. If a user is navigating from a server, for instance Amazon Web Services, chances are, they are using automated methods, they're defiantly not the typical non bot user

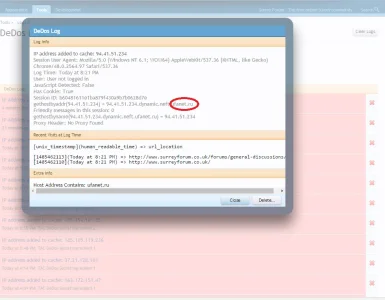

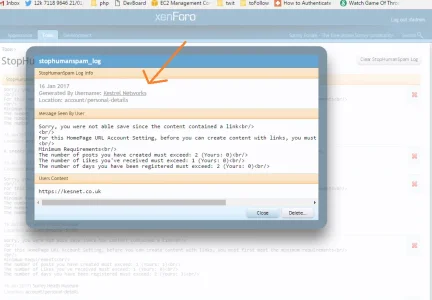

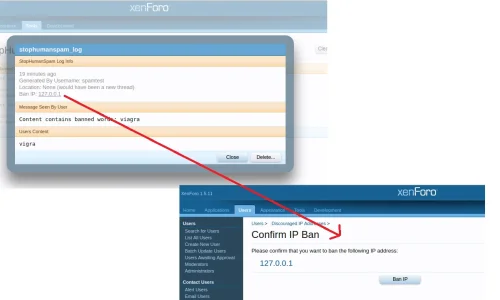

And they are quite easy to see in the logs, for instance, I block some hosts that contain certain words (such as tor),I sniff out other hosts using the magic ingredient 1

There is the option to update the htacess file, so certain IP's are blocked for 48 hours (the ones that are scrapping / hitting the site over an over)

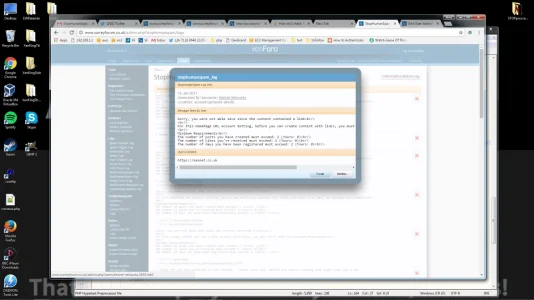

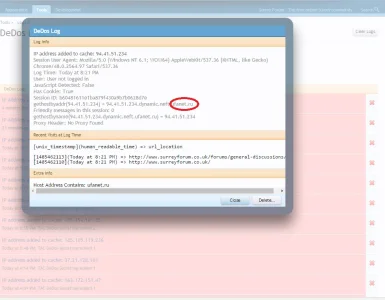

logs example: