D

Deleted member 172653

Guest

Good evening JAXEL,

We use add-on version 2.0.5 because our xenforo is not migrated to the latest version (all the add-on that we use has not yet been updated by their developer).

We want to backup to an EXTERNAL ftp server.

We are facing a connection difficulty ...

The server elements are as follows

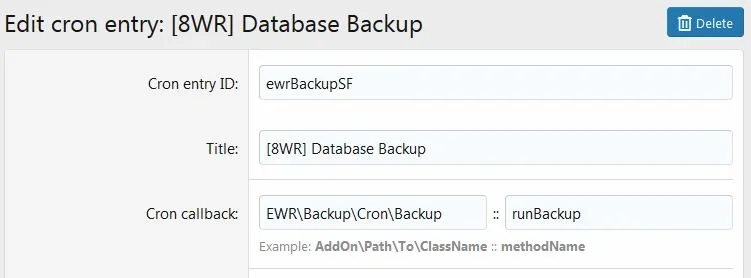

On the other hand, the same parameters on the ADD-on do not work (see below screen capture) with this message (in _ftplog.txt)

Can you help us ? and tell us where we are wrong

Thank you in advance for your answer,

Regards,

We use add-on version 2.0.5 because our xenforo is not migrated to the latest version (all the add-on that we use has not yet been updated by their developer).

We want to backup to an EXTERNAL ftp server.

We are facing a connection difficulty ...

The server elements are as follows

- IP: 45.44.250.43 Port: 2121

- Directory: / Download / Test /

On the other hand, the same parameters on the ADD-on do not work (see below screen capture) with this message (in _ftplog.txt)

Code:

-- Connecting to 45.44.250.43:2121...

-- Failed to connect to remote FTP host...

Can you help us ? and tell us where we are wrong

Thank you in advance for your answer,

Regards,