It's going to depend on where your existing files are. It's going to be a different command based on the source.Sorry for the question which has probably been answered.

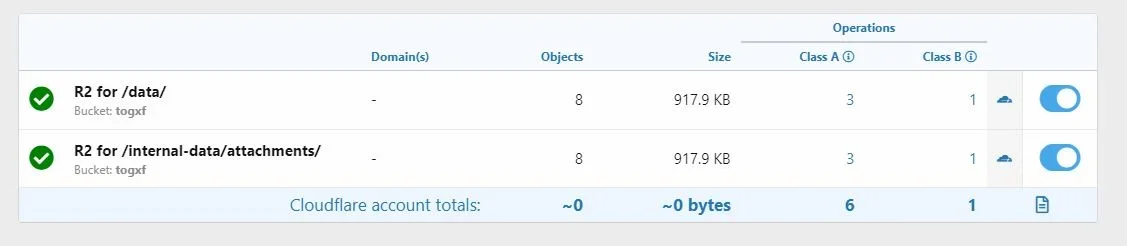

How do I use rclone to move my data to R2? Can someone explain that part to me, please?

The docs for rclone are here: https://rclone.org/docs/

I don't personally have much experience with rclone because I never had a ton of data to move (I used the CLI command I made to move my stuff). Probably best to search this forum for

rclone sync and you should find others doing it.