smallwheels

Well-known member

It could be a bot/proxy that doesn't send any IP information.

How could that be? I'd have assumed that it is unavoidable to send the source IP given how TCP/IP works and apart from that: The IPs are logged in the server's web.log when you grep it with the URL that is noted in the log of the 404-add on. So they are there.

Bots can manipulate the headers used to get the user IP so they are not reliable.

I'm using XF'sgetIp()method with$allowProxiedset totrueto get the IP so unlessconvertIpStringToBinaryfails, which is used to get the value stored for the IP, what you see is what you get in the 404 logs.

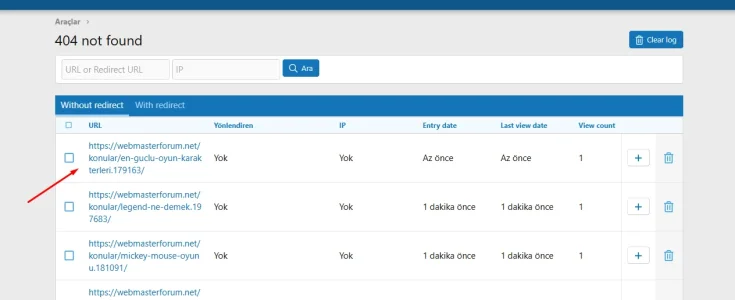

Had a further look into it and apart from malicious bots also the bing bot it affected. I.e I had this call in the log that ended with a 404:

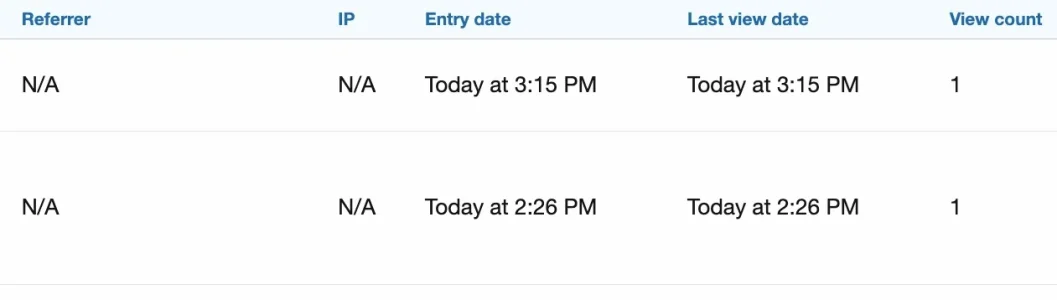

upper one:

msnbot-40-77-167-149.search.msn.com - - [08/Apr/2025:15:15:17 +0200] "GET /tags/start/ HTTP/1.1" 404 9472 "-" "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm) Chrome/116.0.1938.76 Safari/537.36" 315 10202

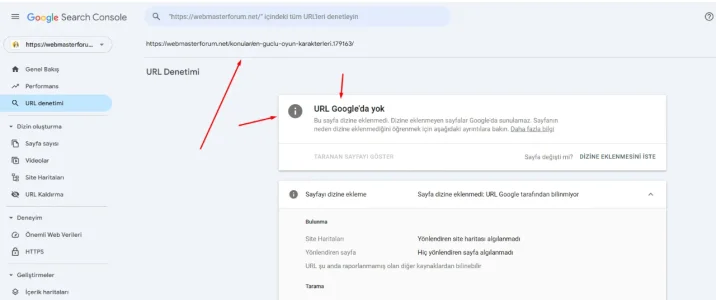

The URL "/tags/start/" exists (there is a tag "start"). It seems only accessble when you are logged in (which bingbot is not). If I call the URL when not logged in I do indeed get a 404 - a bit strange, I would have expected a 401 here. Clearly not your fault - rather a bug in XF that may impact SEO ranking to the negative (as bing will this way get hundreds of 404s on my forum)

second one:

msnbot-40-77-167-76.search.msn.com - - [08/Apr/2025:14:26:41 +0200] "GET /threads/Threadurl.1338/Picture-Name.jpg HTTP/1.1" 404 9505 "-" "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm) Chrome/116.0.1938.76 Safari/537.36" 796 14614

There are hundreds of those. The requested picture does exist (it is embedded in the thread) but as full size pictures are only accessible to logged in users the bot won't get the ful resolution pic. It would however have a different URL than the bot requested anyway - no idea where he got his from.

Anyway: This makes the log pretty useless as it is spoiled with 100s of entries caused by bing that can only manually be identified by grepping through the web.log.