AWS, the "butter face" of hosting?

Not entirely sure what you imply here to be honest; at least not from how I understand the meaning of "butter face"

I've lost count of how many sites without massive bandwidth requirements who slashed their web infrastructure costs by up to 90% by moving off AWS. Higher costs are not only associated with their BW.

Yes you can definitely ruin yourself (or your company) in many creative ways with only a few checkboxes; I was merely pointing out that most ways are misconfiguration-driven. And we can agree that a lot of that is to blame on their counter-intuitive UI and how many easy-to-click checkboxes incur serious fees.

But my point was: with bandwidth costs it doesn't matter if you're an expert, you're getting a bad deal and that's it.

Now should 90% of websites just use some simple shared hosting service for a while before blindly engaging into managing infra sold at a premium with API-driven management as a core feature? Yes.

Many times yes.

Throw in AWS learning curve compared to many of their competitors and you have additional hidden costs adding up. The AWS user interface is unintuitive, with a maze of options and features scattered in a haphazard way.

Depends on who you see as competitors I'd say. If you mean that it's more complex than most/all "smaller" hosting services, then yes definitely.

But whether it's more complex than Azure, GCP, and the likes? Not really tbh. And those are "[AWS'] competitors".

Though yes their UI sucks, that I would not disagree with.

However I'd note that many/most extensive users of their services will eventually gravitate towards their CLI and other management tools a-la Terraform & friends, which means they don't really use the UI in the first place.

Does it suck for everyone else not as deeply invested in cloud configuration memes? yeah. Does it explain why they have no incentive to improve it? yeah also.

What good is the ability to scale up quickly (which many others can provide) if it costs your dearly? AWS doesn't have a monopoly on scaling, and others can do it without the massive increase in costs.

The raw cost is only relevant depending on per-user-revenue though. In the case of many web platforms (of which many fall under a broad definition of "e-commerce"), the marginal extra cost per-user in infra is completely irrelevant even with the "AWS tax" on actual costs of service, given that people don't really browse their websites for entertainment, but with the intent to spend.

That said, I agree that in many cases (and maybe I sound like a shill here so let me reiterate that we don't run on AWS, nor would I recommend it in general) it doesn't make sense compared to other options.

As for a monopoly on scaling or not, they're not the only ones indeed, but I also didn't suggest that this was a strength of theirs. (and I don't know of very many web platforms that would actually need more than a couple dozen of servers to run, at most).

Their strength is instead very much the breadth of services they offer and integrate together and allow you to control fully via APIs.

They might suck at the depth part of it (many of these services are bare-minimum functionality to be marketable), but they are still there and working. And that breadth is very much unique.

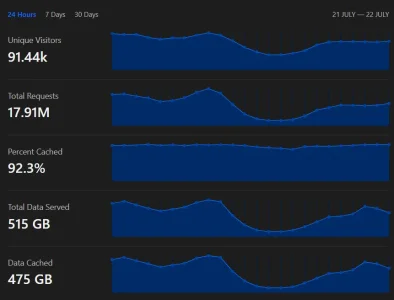

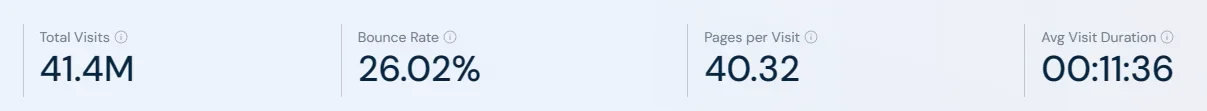

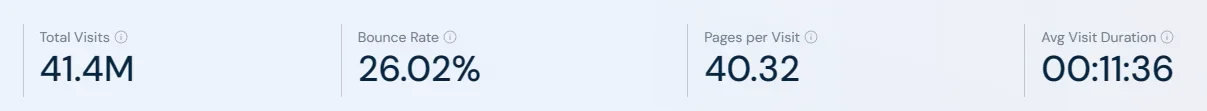

I don't know how much they are spending on servers but we spend like $100 (Dedicated Server) + $25 (Cloudflare) + $5 (GDrive for Backups) = $130/month

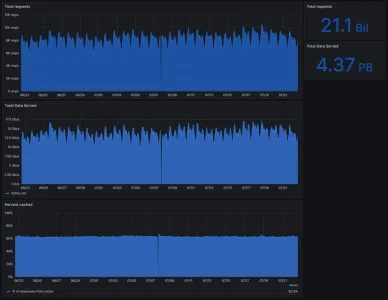

Idk how much they are spending, but I'd expect at least one extra 0 or two on their monthly bill, from the screenshots they shared.

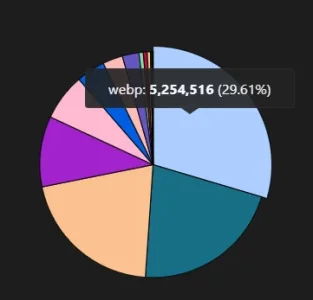

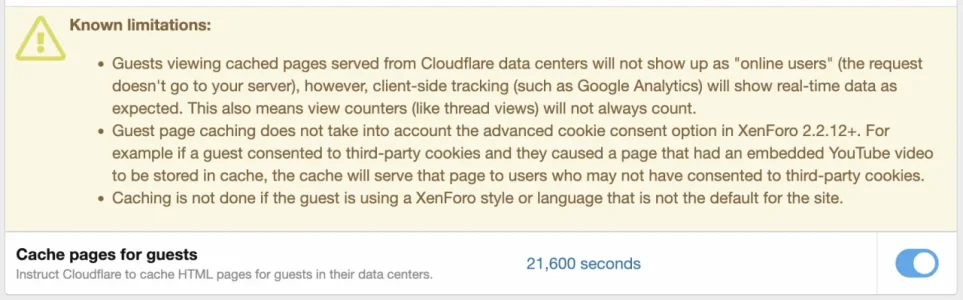

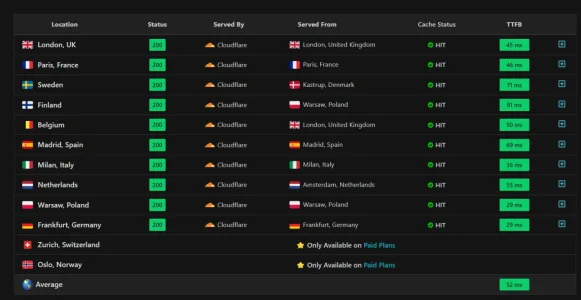

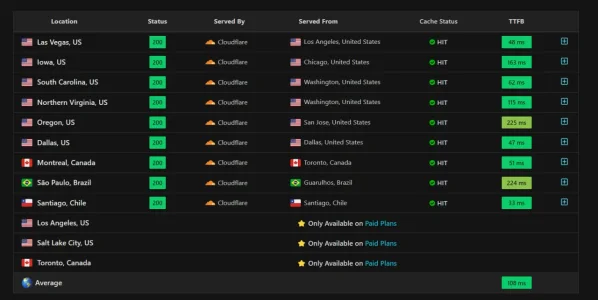

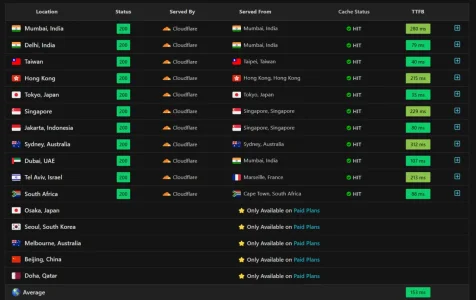

That said, your case is also peculiar. I don't know what it is for, but the numbers suggest at least a rather media-lightweight site with very good cacheability (and to be fair, AT does look very cacheable from here).

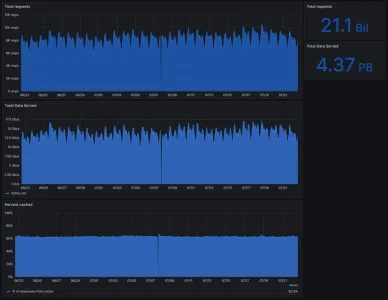

At least in our case, we definitely couldn't, and while we don't run on CF (so I can't exactly match the CF dashboard style) our costs are around $3000/month after many very friendly discounts all the way through.

(ignore the unclear "bytes_out" label on the middle graph, the data it's pulling from is bytes but with a x8 to match the axis of the plot, which is in bits/s, while the monthly sum on the top right is correctly in bytes, ie x1)

(ignore the unclear "bytes_out" label on the middle graph, the data it's pulling from is bytes but with a x8 to match the axis of the plot, which is in bits/s, while the monthly sum on the top right is correctly in bytes, ie x1)

Yup, I thought they also did hosting, hence my question. A quick check on their website fixed that notion lol.

They also do hosting, in some limited fashion. Rather than managing a server directly they will run your code on their own servers (the product is called Cloudflare Workers). But for something like XF they definitely aren't using that bit, at least not for the core of XF.

Also in the end, whichever host XF Cloud might have picked would be just fine. Quality of service is rather achieved by redundancy and fallback plans, rather than betting that paying 30% extra (or however much) means your provider will never fail you.