I've never actually used a robots.txt (didn't get round to it and it hadn't been an issue generally) and have been reading around on here to find various examples and still find it a bit confusing (due to there being different examples) and also not quite understanding some of it. I thought it was just to block robots from scanning the site, but it seems people are adding forum sections to it as well.

Last time I looked into this I thought you needed to add "Allow" at the end to allow google definitively?

Some explanation on it all would be gratefully received, along with a simple example. I currently have these bots crawling (in addition to Google):

Ahrefs, Bing, Petal search, Moz Dotbot- all of whom have been around for a long time, and I'm not aware of any issues related to them, but I've recently had some new ones:

Anthropic, ImageSift, Amazon and Bytedance. No idea where anthropic and imagesift came from or why amazon has suddenly popped up.

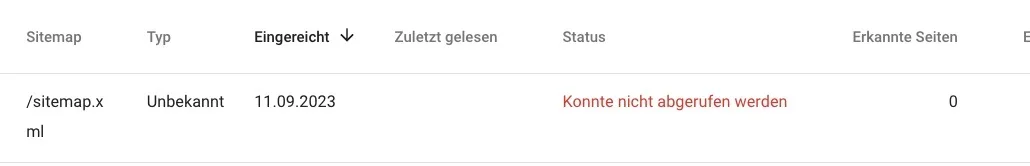

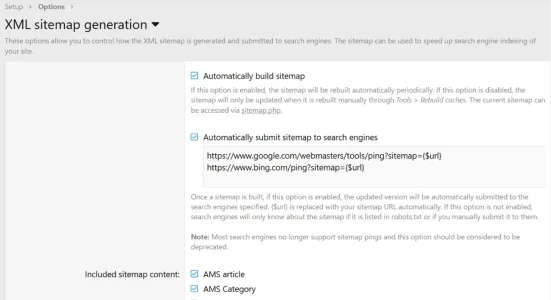

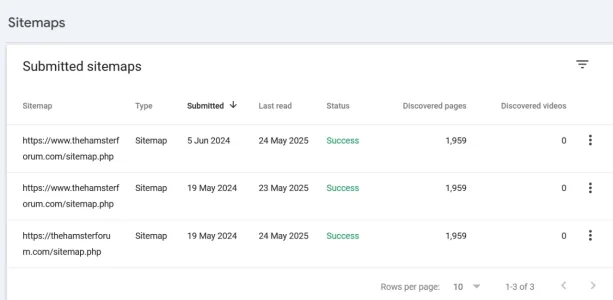

Also - is it actually essential to have robots.txt? Also is it essential to have your sitemap at the end as I'm still confused as to whether I have it as php or xml (I think it's php so can I actually put xml in the robots.txt?)

Edit: Occasionally have had Facebook and apple as well.

Last time I looked into this I thought you needed to add "Allow" at the end to allow google definitively?

Some explanation on it all would be gratefully received, along with a simple example. I currently have these bots crawling (in addition to Google):

Ahrefs, Bing, Petal search, Moz Dotbot- all of whom have been around for a long time, and I'm not aware of any issues related to them, but I've recently had some new ones:

Anthropic, ImageSift, Amazon and Bytedance. No idea where anthropic and imagesift came from or why amazon has suddenly popped up.

Also - is it actually essential to have robots.txt? Also is it essential to have your sitemap at the end as I'm still confused as to whether I have it as php or xml (I think it's php so can I actually put xml in the robots.txt?)

Edit: Occasionally have had Facebook and apple as well.

Last edited: