Makes a nice change that it wasn't DNS for once and I always appreciate a reasonably detailed write up, thanks for linking.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Do you use Cloudflare?

- Thread starter Sim

- Start date

-

- Tags

- cloudflare

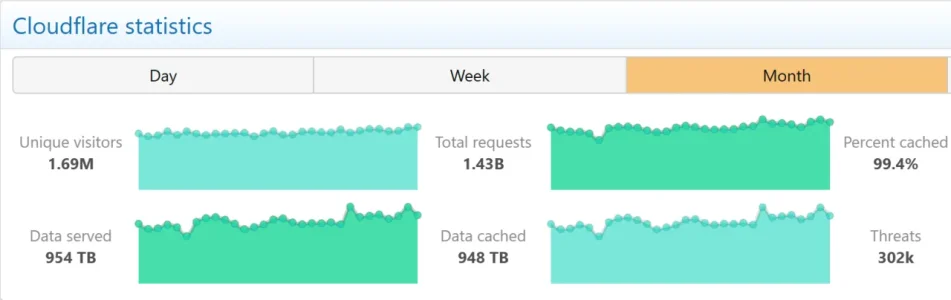

954 TB with a 'T'the main server cannot keep up with the bandwidth needed.

Ah, the young crowd that doesn't know about BBS'sIf you have indeed been running your own servers on the internet since 35 years, so since 1990, before the www/http was invented: Congratiulations, you must be a veteran. But if you are such a veteran you must know, that your statement above was wrong and primitive trolling or rage bait.

Those were barely attached to the internet but rather dialin via Modem. Personally, I would not really categorize those as "server" as well (they were indeed servers, but a completely different world from internet servers in almost every aspect). As part of the young crowd I was using things like Gopher, Veronica, Telnet and Archie in 1990, along with local BBS and the usenet (and a very occasional email).Ah, the young crowd that doesn't know about BBS's

@eva2000

Instead, it was triggered by a change to one of our database systems' permissions which caused the database to output multiple entries into a “feature file” used by our Bot Management system. That feature file, in turn, doubled in size. The larger-than-expected feature file was then propagated to all the machines that make up our network.

The software running on these machines to route traffic across our network reads this feature file to keep our Bot Management system up to date with ever changing threats. The software had a limit on the size of the feature file that was below its doubled size. That caused the software to fail.

-----

Sounds like someone changed some simple code and it was incorrect.

Instead, it was triggered by a change to one of our database systems' permissions which caused the database to output multiple entries into a “feature file” used by our Bot Management system. That feature file, in turn, doubled in size. The larger-than-expected feature file was then propagated to all the machines that make up our network.

The software running on these machines to route traffic across our network reads this feature file to keep our Bot Management system up to date with ever changing threats. The software had a limit on the size of the feature file that was below its doubled size. That caused the software to fail.

-----

Sounds like someone changed some simple code and it was incorrect.

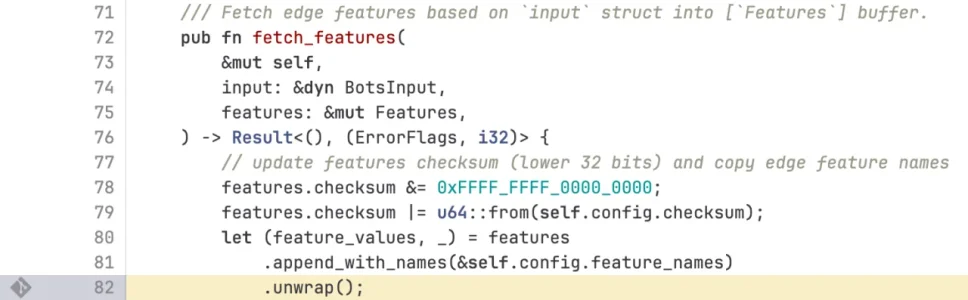

Cudos to Cloudflare for transparency, a little bit of headscratching about the programming error that caused the outage. Sounds like bad crafsmanschip to me - people mentioned that it would be an absolute no-no to use "unwrap" on a server in such a way:Cloudflare outage post mortem https://blog.cloudflare.com/18-november-2025-outage/

Also it seems that there was/is a lack of error handling, so robustness of the application in question (probably one of the most common things as root cause apart from DNS issues) and I wonder how come that a company like Cloudfare deploy such a piece of code worldwide (with drastic consequences) instead having it run in a limited environmet first to limit the damage in case of trouble. Seems they follow the "fix-forward" aproach: Less sorrowful testing but rather fixing bugs that show up forward fast.

I read somewhere they rewrote the piece of code in question in Rust to be safe from memory leaks and created a memory leak-like issue while doing so...Sounds like someone changed some simple code and it was incorrect.

memory leak would cause duplicate entries ?memory leak-like issue

sounds weird. i dont know.

No. The memory for this process was limited and the data did not fit into the memory. This should have been handled i.e. by ignoring the new data or by aborting or whatever else - but wasn't. It was just badly written code.memory leak would cause duplicate entries ?

sounds weird. i dont know.

I can't do anything about the other networks my site is passing through. That's how the internet works.

I can change whether i send all my user's data to an additional place and create a serial dependency, which makes my website less reliable.

Cloudflare can decrypt all of the data being sent to it. That's required in order for cloudflare to work.

Yes, I know it's required, since the end point for the client facing SSL certificate is their network, but I seriously doubt this is something Cloudflare would consider doing, much less actually do, given that news of such a thing would essentially destroy their reputation.

Cloudflare's warrant, canary, has never been flagged.

See my reply to ES Dev Team.If you are using cloudflare as an endpoint/gateway protection/proxy your the encryption between the client and your site terminates at Cloudflare - else it could not work. So basically Cloudflare can see everything what your users are doing including conten plus obviously they have all the data to be able to build profiles at scale as they serve a huge proportion of the internet traffic. This is

plus - with Cloudflare being an US-based company - it does not get better.

If you think you're any better off with other countries, looked up the recent raids and server confiscations in the Netherlands, a country famed for its so-called privacy protections.

Because cloudflare terminate the end user's SSL session on their hardware, at that point the traffic is decrypted and plain text. They then proxy the connection back to your servers, probably over HTTPS, but they effectively perform an authorised "man in the middle" (MITM) attack on the connection. It's not dissimilar to that done by lots of corporate network gateways that rely on trusted root certificates installed on staff devices. So Cloudflare can read all the data passing through them - it's one of the reasons they can do all their clever firewall stuff.

I know this, but do you seriously think they would use this to for any purpose other than handling the WAF and other features? If so, the FBI and many major companies would not be using them to protect their websites and other infrastructure.

You could of course design your systems to bypass Cloudflare for some items where you wanted full end-to end encryption, but then you're revealing the location of at least some of your hardware and of course the original use-case for Cloudflare was handling DDOS attacks in which case you want your actual servers to stay hidden. Of course later the WAF and edge caching became major factors in potentially using Cloudflare. The MITM is enough that I don't tend to use Cloudflare, but we do have some clients using it for parts of their business and in front of the stuff we're doing for them.

I mean if you can handle a reasonable DDOS then there really isn't a lot (beyond the WAF) that cloudflare offers. We get the occasional modest DOS from some client's forum members who have been banned - get grumpy and they go and rent a score of VMs with some random cloud provider and do a DOS that way, when it's only 20 or so IP addresses it's not too hard to drop the traffic at the firewall. A large DDOS would take things offline I mean the recent one on Azure was 15.72 terabits per second from the Aisura botnet using 500,000 IP addresses. I doubt many of us could have mitigated that amount of traffic. I'm guessing that most people are using rented VMs and virtual hosts for their forums and then a smaller number have their own hardware co-located to various degrees so the capacity to handle large DDOS attacks is probably limited for most of us. We've had the odd server taken out over the years by a good sized DDOS, even when the traffic is blackholed the IP in question is of course out of action.

It'll be interesting to read the full writeup of this issue since we've only got the "file grew too big" information at present. Still always nice (in a nasty way) when someone else is having the woes and it's out of your hands!

There are inexpensive DDOS services people can rent these days, and it's not difficult for them to bring down a single server. I've seen it happen more than once, sometimes with attacks lasting weeks, and been called in to set up protection.

Sorry: A statement like that lacks absolutely any competence about privacy, network security and about how the internet works. If this is in fact your level of knowledge you should not offer services on the internet, let alone a forum.

They are in fact correct that Google's services can be a privacy violation.

For that matter, visiting any website their web server logs can have sites people are visiting from, what browser is used (and in many cases, the browser's plug-ins), IP addresses and from IP addresses, geo-location.

Yes, I know it's required, since the end point for the client facing SSL certificate is their network, but I seriously doubt this is something Cloudflare would consider doing, much less actually do, given that news of such a thing would essentially destroy their reputation.

Cloudflare's warrant, canary, has never been flagged.

They're a publicly traded corporation and have incentives to do evil things to make money.

Very few publicly traded corporations don't eventually start abusing the market position that users give them.

But once they get a large amount of the market, that's usually when the abuse starts.

I don't think their reputation would be destroyed if they're found out to be misusing all the user traffic you send them. Look at what other people tolerate in exchange for small conveniences. They could totally get away with it.

And whose to say they couldn't be hacked? a large centralized pipe containing the ins and outs of half the world's server is a pretty juicy target.

You create a new potential attack vector when you use their service. That's a showstopper for me.

They're a publicly traded corporation and have incentives to do evil things to make money.

Very few publicly traded corporations don't eventually start abusing the market position that users give them.

But once they get a large amount of the market, that's usually when the abuse starts.

Respectfully, what I'm reading from you is a cynical view with unsupported assumptions. Cloudflare already has 82% of the reverse proxy market share, and 20% of all websites go through them. Rather than abusing their market position, I've seen them increase efforts at transparency, and they are one of the most transparent companies out there.

I don't think their reputation would be destroyed if they're found out to be misusing all the user traffic you send them. Look at what other people tolerate in exchange for small conveniences. They could totally get away with it.

I seriously doubt they'd get away with it. Their industry is one where trust is one of the most valuable assets a company can have. Reputational damage would far outweigh any short term gains breaking trust might have. Their customer base tends to be technically savvy, and they could not get away with undermining privacy without being quickly caught.

And whose to say they couldn't be hacked? a large centralized pipe containing the ins and outs of half the world's server is a pretty juicy target.

There's far more traffic going through a single vendor, Cisco, who provides about 80% of the world's networking equipment. Hosting at Amazon, which has large centralized hosting locations, that's pretty juicy too. And they've had their share of big outages.

You create a new potential attack vector when you use their service. That's a showstopper for me.

There's no one size fits all, and for me, the pros of Cloudflare outweigh the cons. Seems to be the choice for several other people in the field here on Xenforo's forums. I was once in your camp. Was.

It's fine, we don't have to agree!

Similar threads

- Replies

- 49

- Views

- 438

- Replies

- 9

- Views

- 173

- Question

- Replies

- 3

- Views

- 95

- Replies

- 12

- Views

- 277