You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Do you use Cloudflare?

- Thread starter Sim

- Start date

-

- Tags

- cloudflare

zappaDPJ

Well-known member

According to the BBC...

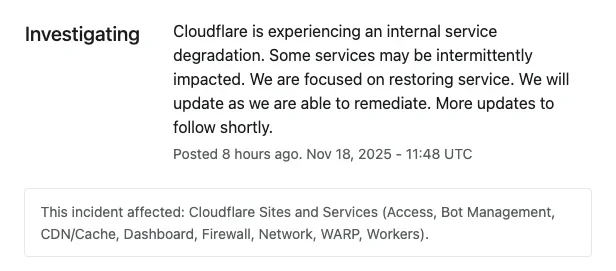

Cloudflare said the "significant outage" occurred after a configuration file designed to handle threat traffic did not work as intended and "triggered a crash" in its software handling traffic for its wider services.

www.bbc.co.uk

www.bbc.co.uk

Cloudflare said the "significant outage" occurred after a configuration file designed to handle threat traffic did not work as intended and "triggered a crash" in its software handling traffic for its wider services.

Cloudflare apologises for outage which took down X and ChatGPT

"We apologise to our customers and the Internet in general" the web infrastructure company said.

ES Dev Team

Well-known member

I'm using fail2ban here with good success across 30 servers.

It works approximately as well, users don't have to click a captcha, and we don't have outages. I also don't like sending all traffic over to another company - that's a huge privacy violation against my users.

It works approximately as well, users don't have to click a captcha, and we don't have outages. I also don't like sending all traffic over to another company - that's a huge privacy violation against my users.

MySiteGuy

Well-known member

That's a view which neglects that for many it has nothing to do with in-house expertise. For some, its a matter of spending time where it's most cost-effective. I wrote a suite of tools for my sites, including a dynamic robots.txt generator which would create rules based on what was fetching it, and auto blocking if a IP disregarded the rules. I had a setup of smart rules that could also determine if a user agent was being spoofed by crawlers, and much more, along with using prepackaged tools like fail2ban.I'm in the same boat basically. We've got a robust setup as well and a good network border control to ward off the rampage of bots and unwanted crawlers. CF is nice for a plug-n-play where you don't have in-house expertise to do it all. I'm afraid there's too much brain drain going on and there's certainly lot less desire out there to skill-up in the server ops world (os/db/net/app layers). People just want it to work out of the box instead of learning how it actually works. (Great fit for cloud users, turnkey solution and so on...)

At some point, I could no longer keep up with the game of whack-a-mole without spending precious development time on it. If your team's time costs less than Cloudflare or similar WAF/CDN service, it makes sense to do it in house, otherwise it doesn't. Have you run the numbers?

MySiteGuy

Well-known member

I'm using fail2ban here with good success across 30 servers.

It works approximately as well, users don't have to click a captcha, and we don't have outages. I also don't like sending all traffic over to another company - that's a huge privacy violation against my users.

How is it a huge privacy violation versus all the other networks their traffic is already passing through? Are you not using https on all your sites?

ES Dev Team

Well-known member

How is it a huge privacy violation versus all the other networks their traffic is already passing through? Are you not using https on all your sites?

I can't do anything about the other networks my site is passing through. That's how the internet works.

I can change whether i send all my user's data to an additional place and create a serial dependency, which makes my website less reliable.

Cloudflare can decrypt all of the data being sent to it. That's required in order for cloudflare to work.

We get it. You don't need to mention it in each post!I'm using fail2ban here with good success across 30 servers.

It works approximately as well, users don't have to click a captcha, and we don't have outages. I also don't like sending all traffic over to another company - that's a huge privacy violation against my users.

smallwheels

Well-known member

If you are using cloudflare as an endpoint/gateway protection/proxy your the encryption between the client and your site terminates at Cloudflare - else it could not work. So basically Cloudflare can see everything what your users are doing including conten plus obviously they have all the data to be able to build profiles at scale as they serve a huge proportion of the internet traffic. This isAre you not using https on all your sites?

plus - with Cloudflare being an US-based company - it does not get better.a huge privacy violation against my users.

chillibear

Well-known member

Because cloudflare terminate the end user's SSL session on their hardware, at that point the traffic is decrypted and plain text. They then proxy the connection back to your servers, probably over HTTPS, but they effectively perform an authorised "man in the middle" (MITM) attack on the connection. It's not dissimilar to that done by lots of corporate network gateways that rely on trusted root certificates installed on staff devices. So Cloudflare can read all the data passing through them - it's one of the reasons they can do all their clever firewall stuff. You could of course design your systems to bypass Cloudflare for some items where you wanted full end-to end encryption, but then you're revealing the location of at least some of your hardware and of course the original use-case for Cloudflare was handling DDOS attacks in which case you want your actual servers to stay hidden. Of course later the WAF and edge caching became major factors in potentially using Cloudflare. The MITM is enough that I don't tend to use Cloudflare, but we do have some clients using it for parts of their business and in front of the stuff we're doing for them.How is it a huge privacy violation versus all the other networks their traffic is already passing through? Are you not using https on all your sites?

I mean if you can handle a reasonable DDOS then there really isn't a lot (beyond the WAF) that cloudflare offers. We get the occasional modest DOS from some client's forum members who have been banned - get grumpy and they go and rent a score of VMs with some random cloud provider and do a DOS that way, when it's only 20 or so IP addresses it's not too hard to drop the traffic at the firewall. A large DDOS would take things offline I mean the recent one on Azure was 15.72 terabits per second from the Aisura botnet using 500,000 IP addresses. I doubt many of us could have mitigated that amount of traffic. I'm guessing that most people are using rented VMs and virtual hosts for their forums and then a smaller number have their own hardware co-located to various degrees so the capacity to handle large DDOS attacks is probably limited for most of us. We've had the odd server taken out over the years by a good sized DDOS, even when the traffic is blackholed the IP in question is of course out of action.

It'll be interesting to read the full writeup of this issue since we've only got the "file grew too big" information at present. Still always nice (in a nasty way) when someone else is having the woes and it's out of your hands!

smallwheels

Well-known member

They also say the reason for that was a "unusual spike in traffic" of a certain kind, but it was not a deliberate attack against Cloudflare.It'll be interesting to read the full writeup of this issue since we've only got the "file grew too big" information at present.

Apart from the privacy and other issues with Cloudflare (that astonishingly many forum admins that use Cloudflare seem not to be aware of) it is basically a single point of failure due to it's size. As lifehacker frames it:

- Cloudflare services roughly 24 million around the globe, including over 43% of the world's 10,000 most popular sites.

- This giant user base means when Cloudflare goes down, so do all those websites.

Rusty Snippets

Well-known member

I won’t mince words: earlier today we failed our customers and the broader Internet when a problem in

@Cloudflare network impacted large amounts of traffic that rely on us. The sites, businesses, and organizations that rely on Cloudflare depend on us being available and I apologize for the impact that we caused.

Transparency about what happened matters, and we plan to share a breakdown with more details in a few hours. In short, a latent bug in a service underpinning our bot mitigation capability started to crash after a routine configuration change we made. That cascaded into a broad degradation to our network and other services. This was not an attack.

That issue, impact it caused, and time to resolution is unacceptable. Work is already underway to make sure it does not happen again, but I know it caused real pain today. The trust our customers place in us is what we value the most and we are going to do what it takes to earn that back.

goyo

Active member

I'm using fail2ban here with good success across 30 servers.

It works approximately as well, users don't have to click a captcha, and we don't have outages. I also don't like sending all traffic over to another company - that's a huge privacy violation against my users.

"huge privacy violation"..

Given this logic, using this forum is a privacy violation,

and using ISPs or the internet at all would be one too.

Nothing personal, but I visited your forum in your about section, which loads a bunch of Google scripts.

So CloudFlare - no advertising profile network or graph, no known cross-site profiling - is a huge privacy violation, but Google - user tracking to build profiles, tied to Google's ad ecosystem etc. - isn't.

smallwheels

Well-known member

Sorry: A statement like that lacks absolutely any competence about privacy, network security and about how the internet works. If this is in fact your level of knowledge you should not offer services on the internet, let alone a forum."huge privacy violation"..

Given this logic, using this forum is a privacy violation,

and using ISPs or the internet at all would be one too.

goyo

Active member

Sorry: A statement like that lacks absolutely any competence about privacy, network security and about how the internet works. If this is in fact your level of knowledge you should not offer services on the internet, let alone a forum.

Yep. I'm running my own servers for 35 years, so this is definitely true..

smallwheels

Well-known member

If you have indeed been running your own servers on the internet since 35 years, so since 1990, before the www/http was invented: Congratiulations, you must be a veteran. But if you are such a veteran you must know, that your statement above was wrong and primitive trolling or rage bait.Yep. I'm running my own servers for 35 years, so this is definitely true..

goyo

Active member

If you have indeed been running your own servers on the internet since 35 years, so since 1990, before the www/http was invented: Congratiulations, you must be a veteran. But if you are such a veteran you must know, that your statement above was wrong and primitive trolling or rage bait.

Questioning the "another company" logic is not a "trolling/rage bait" category.

ES Dev Team

Well-known member

Google analytics is WAY less privacy violating than sending all your user's data, including the passwords they type, and the private messages they send, to a third party.

I don't like cloudflare for three reasons:

So anyway, i don't agree.

I don't like cloudflare for three reasons:

- all users data is sent to a third party who can decrypt it. And this may bite people in the future.

- the serial dependency means when they're down ( and this happens a few times a year lately ), your site is down.

- it does not allow enough granularity in settings and cannot be as intelligent as a protection mechanism that has knowledge of what's happening inside the entire server stack. Therefore when things get bad, you have to force everyone to click a captcha to proceed to the site. I don't have to do that.

So anyway, i don't agree.

eva2000

Well-known member

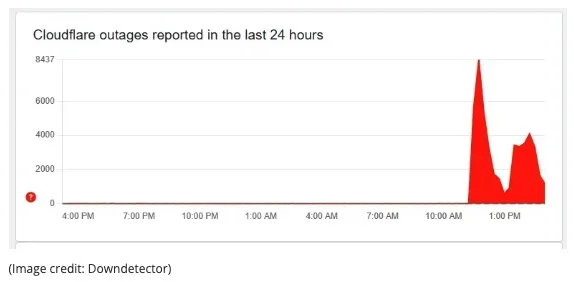

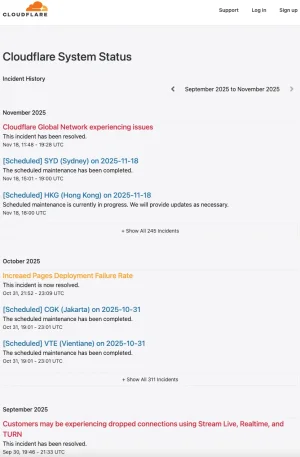

Looks like Cloudflare outage due to their bot mitigation system config issue that impacted other services

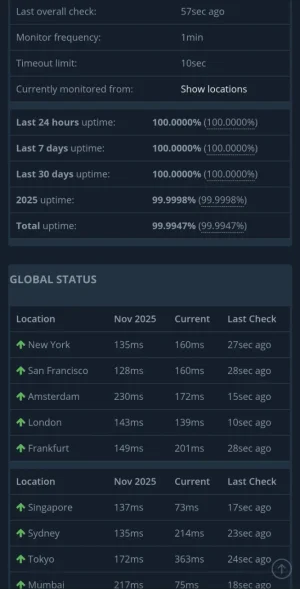

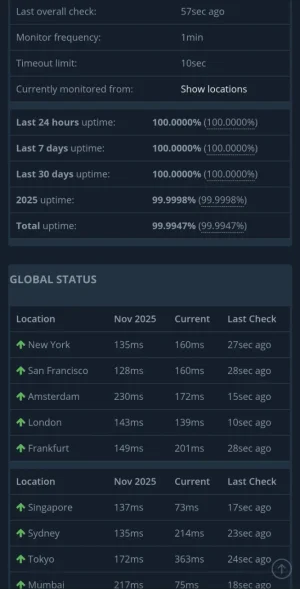

I noticed my Cloudflare Enterprise plan site and a few other known Enterprise plan sites remained online throughout the outage. Guess they weren't using those impacted services or that Cloudflare Advanced Enterprise Bot Management is actually different to regular free, pro and business plans ?

My Enterprise site uptime 100%

My Enterprise site uptime 100%

Last edited:

eva2000

Well-known member

Cloudflare outage post mortem https://blog.cloudflare.com/18-november-2025-outage/

The issue was not caused, directly or indirectly, by a cyber attack or malicious activity of any kind. Instead, it was triggered by a change to one of our database systems' permissions which caused the database to output multiple entries into a “feature file” used by our Bot Management system. That feature file, in turn, doubled in size. The larger-than-expected feature file was then propagated to all the machines that make up our network.

The software running on these machines to route traffic across our network reads this feature file to keep our Bot Management system up to date with ever changing threats. The software had a limit on the size of the feature file that was below its doubled size. That caused the software to fail.

After we initially wrongly suspected the symptoms we were seeing were caused by a hyper-scale DDoS attack, we correctly identified the core issue and were able to stop the propagation of the larger-than-expected feature file and replace it with an earlier version of the file. Core traffic was largely flowing as normal by 14:30. We worked over the next few hours to mitigate increased load on various parts of our network as traffic rushed back online. As of 17:06 all systems at Cloudflare were functioning as normal.

We are sorry for the impact to our customers and to the Internet in general. Given Cloudflare's importance in the Internet ecosystem any outage of any of our systems is unacceptable. That there was a period of time where our network was not able to route traffic is deeply painful to every member of our team. We know we let you down today.

This post is an in-depth recount of exactly what happened and what systems and processes failed. It is also the beginning, though not the end, of what we plan to do in order to make sure an outage like this will not happen again.

Similar threads

- Replies

- 49

- Views

- 434

- Question

- Replies

- 3

- Views

- 91

- Replies

- 12

- Views

- 274