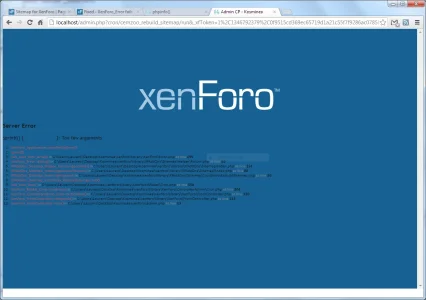

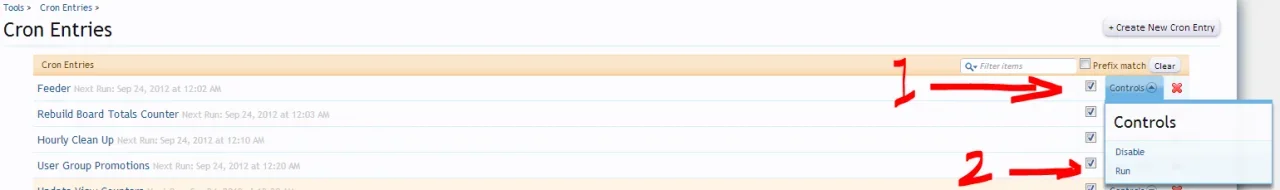

I can confirm this with version 1.1.2 (not 1.1.2d, I am unable to upgrade at the moment) and only in Debug mode, the cause beeing in Sitemap/Helper/Forum.php on line 50When I try to run the cron manually, I get:

"sprintf() [function.sprintf]: Too few arguments"

Any suggestions? Thanks.

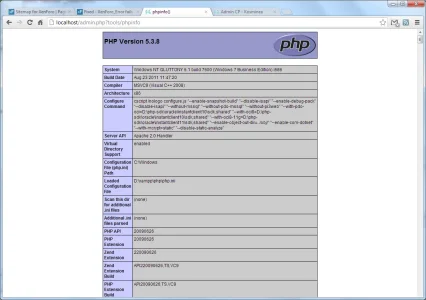

PHP:

XenForo_Error::debug('-- Excluded: ' . $url);@Rigel

I just finished developing a couple of helpers for additional nodes (Waindigo's addon "Library" adds libraries and articles nodes). How should I distribute them? Would you mind including them yourself in the Sitemap addon, or do you prefer that I post my code modifications here directly?