Ok. Then the 4XX responses is your biggest problem.

If that's 46% of your 1.94M crawl requests, that's a ton. It wasn't originally clear if Google just wasn't crawling your site, but with 1.94M crawl requests, that seems like a solid amount.

Yes I agree...the 46% value Other Client Error (4xx) in the Crawl Stats area does seem to stand out as something to investigate further.

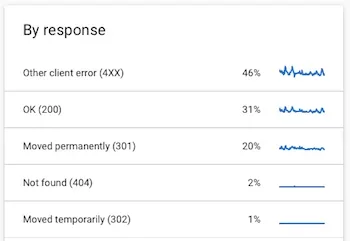

Here are my Crawl Stats (By Response):

Here's my confusion...what does that 46% represent (46% of what number)? If it's 46% of the 1.94M crawl requests...most definitely this could be the BIG issue I've been looking for...especially since 46% is very close to the 50%+ drop in traffic I've been referring to since the migration to XF.

Curiously...if I click on the Other Client Error section...it only lists 11 URL's for the last 90 days. If this 46% was of 46% of 1.94M...I would think we would expect to see a whole lot more than 11 URL's listed for the past 90 days. Also when I investigated all 11 URL's...turns out most of them are deleted threads...or threads in the private Staff area.

For comparison...if I click on the "Moved Temporarily (302)" section (which is only 1%)...it lists 999 URL's. Same thing with "Not found (404)" (2%)...it lists 1000 URL's. 46% must surely represent way more than 11 URL's...just very curious why Google Search Console is only listing 11.

Of course the BIG question is...how can this be resolved?...I'm not exactly sure where to start.

I know you listed some options:

- Permission set wrong

- Google is being directed to pages it's not supposed to be. Sitemap? Bad linking somewhere?

- other less common ones:

Surely if we're talking 46% of 1.94M (or at least a very very big number)...the issue must be something that's setup incorrectly to have this large of an effect (it's certainly not a bunch of individual dead links that need to be investigated & fixed one by one).

Additional thoughts I had...could this be something setup incorrectly in the:

- robots.txt file

- .htaccess file

- incorrect redirects

- etc

I'm open to ideas (anyone). Thanks