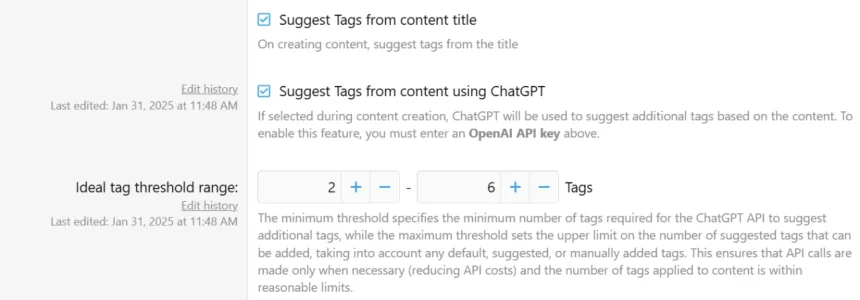

There are two issues. The first is cost. It might be possible to burn through your OpenAI credit quickly.

You could possibly implement an auto-manual feature using a user's main ChatGPT "credits" (i.e., not using OpenAI API... or even free services), Grok, or other AI services.

Export

N number of thread X + thread/post 1 thread title/message in JSON format, the user uploads that file and prompts it "for each entry, examine the thread_title and thread_message of the post; give me X thread_tags that closely relate to it no longer than 3 words in length and each word no longer than 10 characters in length; try to use up to 25% of the tags that are hot topics on the subject found in thread_message if they can relate".

Hypothetically you should have a new JSON file with a new "thread_tags" line populated with "this is a, this tag, about something...". That file could be used to import and circumvent OpenAI credits on batch processes.

I'd have to play with how many threads it could tag per process because anything under 100 would take way too much time. But, if it could process 500 for "free" (unsure unsubscribed ChatGPT) with 4o or another model (or even with Grok), a batch update wouldn't take any OpenAI credits to do for as long as the file stays in the same format: "thread_id" (for easier importing), "thread_title", "thread_message", "thread_tags" (added).

The add on would export all the JSON files and would be zipped so that the user could unzip them and process them 1 by 1 until done. Then zipped back up and then decompressed/applied to all threads when done (as ChatGPT can throttle if you do too much, so it might take a day to get done on 50,000+ threads, but it'd be free, unlike with the OpenAI credits).