Seems to me that Google is not picking up my site's threads/posts. I searched multiple forum categories and multiple older threads' titles on Google (copy/paste) and none of them show up at all? These are all unique titles too. I'm baffled.

Site: https://www.talkjesus.com/

Confirming

sitemap.php is in root directory

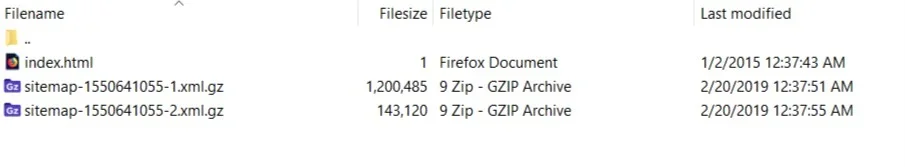

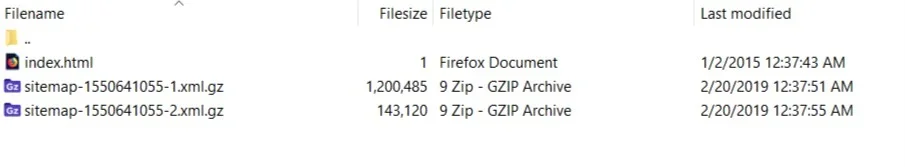

/public_html/internal_data/sitemaps has 2 sitemap files there too (see attached).

Domain is 16 years old

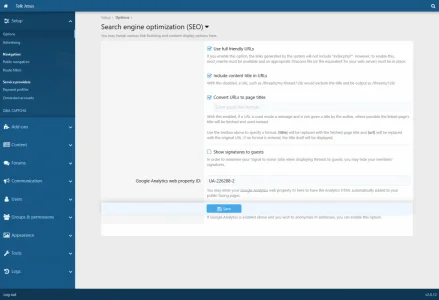

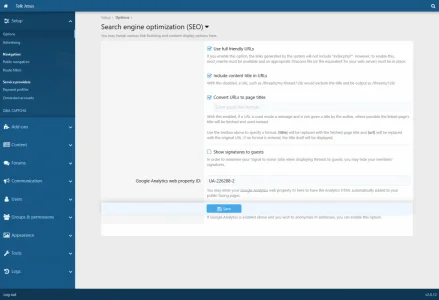

SEO set up correctly in admin panel

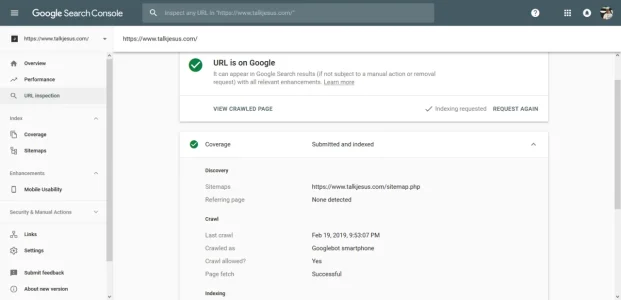

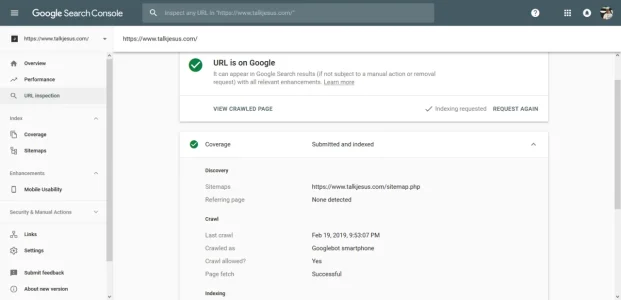

Google Search console: site verified + sitemap there as "success"

I would appreciate some help here. Thanks.

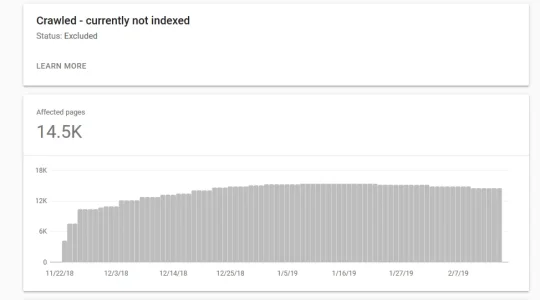

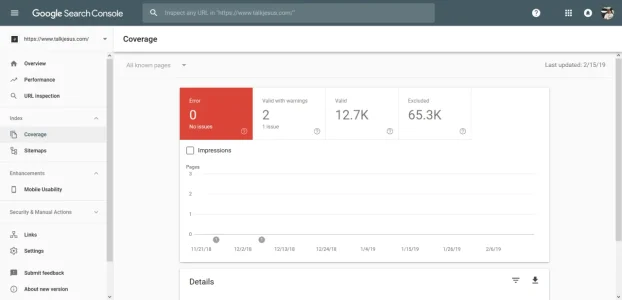

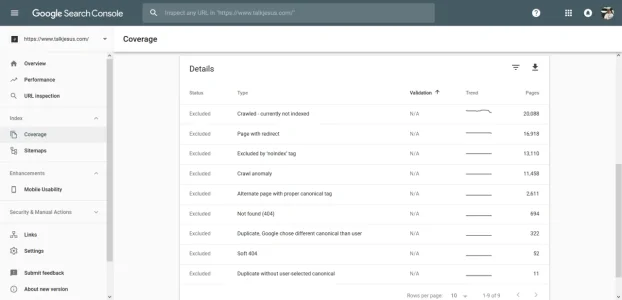

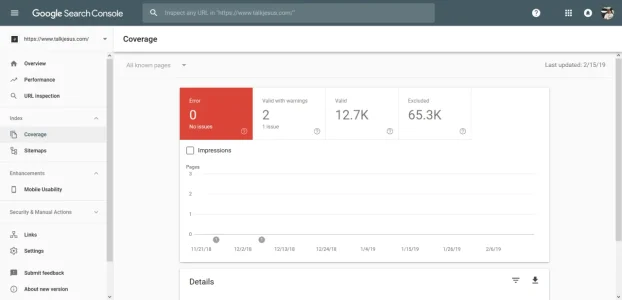

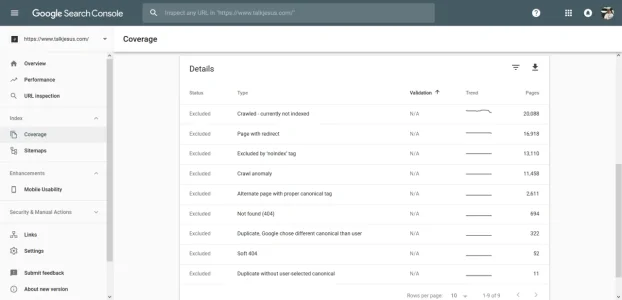

Google Coverage

Saw this just now.

How do I fix this? 65,000 pages excluded cannot be right.

This is my htaccess

Site: https://www.talkjesus.com/

Confirming

sitemap.php is in root directory

/public_html/internal_data/sitemaps has 2 sitemap files there too (see attached).

Domain is 16 years old

SEO set up correctly in admin panel

Google Search console: site verified + sitemap there as "success"

I would appreciate some help here. Thanks.

Google Coverage

Saw this just now.

How do I fix this? 65,000 pages excluded cannot be right.

This is my htaccess

Code:

RewriteEngine On

RewriteCond %{HTTPS} off

RewriteRule ^(.*)$ https://www.talkjesus.com/$1 [R,L]

# Mod_security can interfere with uploading of content such as attachments. If you

# cannot attach files, remove the "#" from the lines below.

#<IfModule mod_security.c>

# SecFilterEngine Off

# SecFilterScanPOST Off

#</IfModule>

## EXPIRES CACHING ##

<IfModule mod_expires.c>

ExpiresActive On

ExpiresByType image/jpg "access 1 year"

ExpiresByType image/jpeg "access 1 year"

ExpiresByType image/gif "access 1 year"

ExpiresByType image/png "access 1 year"

ExpiresByType text/css "access 1 month"

ExpiresByType text/html "access 1 month"

ExpiresByType application/pdf "access 1 month"

ExpiresByType text/x-javascript "access 1 month"

ExpiresByType application/x-shockwave-flash "access 1 month"

ExpiresByType image/x-icon "access 1 year"

ExpiresDefault "access 1 month"

</IfModule>

## EXPIRES CACHING ##

# TN - BEGIN Cache-Control Headers

<ifModule mod_headers.c>

<filesMatch "\.(ico|jpe?g|png|gif|swf)$">

Header set Cache-Control "public"

</filesMatch>

<filesMatch "\.(css)$">

Header set Cache-Control "public"

</filesMatch>

<filesMatch "\.(js)$">

Header set Cache-Control "private"

</filesMatch>

<filesMatch "\.(x?html?|php)$">

Header set Cache-Control "private, must-revalidate"

</filesMatch>

</ifModule>

# TN - END Cache-Control Headers

Header unset Pragma

FileETag None

Header unset ETag

<IfModule mod_gzip.c>

mod_gzip_on Yes

mod_gzip_dechunk Yes

mod_gzip_item_include file \.(html?|txt|css|js|php|pl)$

mod_gzip_item_include handler ^cgi-script$

mod_gzip_item_include mime ^text/.*

mod_gzip_item_include mime ^application/x-javascript.*

mod_gzip_item_exclude mime ^image/.*

mod_gzip_item_exclude rspheader ^Content-Encoding:.*gzip.*

</IfModule>

<IfModule mod_deflate.c>

# Compress HTML, CSS, JavaScript, Text, XML and fonts

AddOutputFilterByType DEFLATE application/javascript

AddOutputFilterByType DEFLATE application/rss+xml

AddOutputFilterByType DEFLATE application/vnd.ms-fontobject

AddOutputFilterByType DEFLATE application/x-font

AddOutputFilterByType DEFLATE application/x-font-opentype

AddOutputFilterByType DEFLATE application/x-font-otf

AddOutputFilterByType DEFLATE application/x-font-truetype

AddOutputFilterByType DEFLATE application/x-font-ttf

AddOutputFilterByType DEFLATE application/x-javascript

AddOutputFilterByType DEFLATE application/xhtml+xml

AddOutputFilterByType DEFLATE application/xml

AddOutputFilterByType DEFLATE font/opentype

AddOutputFilterByType DEFLATE font/otf

AddOutputFilterByType DEFLATE font/ttf

AddOutputFilterByType DEFLATE image/svg+xml

AddOutputFilterByType DEFLATE image/x-icon

AddOutputFilterByType DEFLATE text/css

AddOutputFilterByType DEFLATE text/html

AddOutputFilterByType DEFLATE text/javascript

AddOutputFilterByType DEFLATE text/plain

AddOutputFilterByType DEFLATE text/xml

# Remove browser bugs (only needed for really old browsers)

BrowserMatch ^Mozilla/4 gzip-only-text/html

BrowserMatch ^Mozilla/4\.0[678] no-gzip

BrowserMatch \bMSIE !no-gzip !gzip-only-text/html

Header append Vary User-Agent

</IfModule>

ErrorDocument 401 default

ErrorDocument 403 default

ErrorDocument 404 default

ErrorDocument 500 default

<IfModule mod_rewrite.c>

RewriteEngine On

# If you are having problems with the rewrite rules, remove the "#" from the

# line that begins "RewriteBase" below. You will also have to change the path

# of the rewrite to reflect the path to your XenForo installation.

#RewriteBase /

# This line may be needed to enable WebDAV editing with PHP as a CGI.

#RewriteRule .* - [E=HTTP_AUTHORIZATION:%{HTTP:Authorization}]

RewriteCond %{REQUEST_FILENAME} -f [OR]

RewriteCond %{REQUEST_FILENAME} -l [OR]

RewriteCond %{REQUEST_FILENAME} -d

RewriteRule ^.*$ - [NC,L]

RewriteCond %{REQUEST_URI} !^/[0-9]+\..+\.cpaneldcv$

RewriteCond %{REQUEST_URI} !^/[A-F0-9]{32}\.txt(?:\ Comodo\ DCV)?$

RewriteCond %{REQUEST_URI} !^/\.well-known/acme-challenge/[0-9a-zA-Z_-]+$

RewriteRule ^(data/|js/|styles/|install/|favicon\.ico|crossdomain\.xml|robots\.txt) - [NC,L]

RewriteRule ^.*$ index.php [NC,L]

</IfModule>

<Files 403.shtml>

order allow,deny

allow from all

</Files>

# php -- BEGIN cPanel-generated handler, do not edit

# NOTE this account's php is controlled via FPM and the vhost, this is a place holder.

# Do not edit. This next line is to support the cPanel php wrapper (php_cli).

# AddType application/x-httpd-ea-php72 .php .phtml

# php -- END cPanel-generated handler, do not edit

Last edited: