Hello everyone. I'm trying to scan my site for bad/old outdated links.

Issue is...if the home page URL is used as the starting point for a scan...the site is too large to do this all in one go (scans can take hours & hours...and this is when only a portion of the site is being scanned).

What I would like to do is limit the scope of each scan by only scanning one forum node at a time.

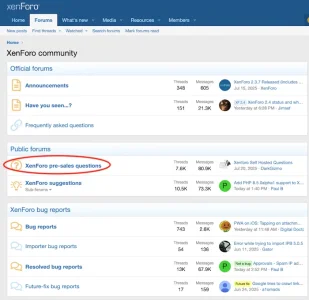

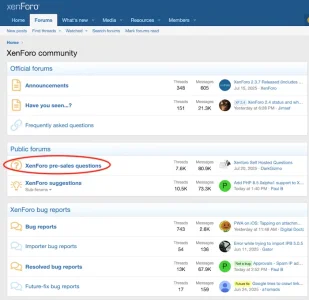

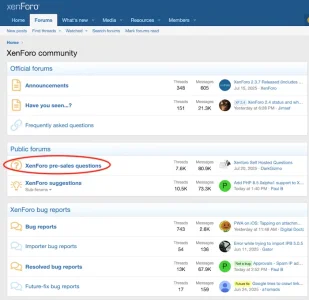

For example (using Xenforo.com as an example)...let's say I wanted to only scan the "Xenforo pre-sales questions" node. If the "Xenforo pre-sales questions" node is clicked on...the URL = https://xenforo.com/community/forums/xenforo-pre-sales-questions.5/

If this URL is used for a scan...only the thread titles in this node are scanned...individual threads & thread posts are not scanned. I've tried other URL's...but nothing I've tried will scan all the thread posts in a single forum node.

Again my goal is to scan all thread posts in a single Xenforo forum website node (as described above).

Obviously I'm not getting something correct. If anyone knows what the URL syntax should be for scanning all the thread posts in a single forum node...I would greatly appreciate it!

Thanks

Issue is...if the home page URL is used as the starting point for a scan...the site is too large to do this all in one go (scans can take hours & hours...and this is when only a portion of the site is being scanned).

What I would like to do is limit the scope of each scan by only scanning one forum node at a time.

For example (using Xenforo.com as an example)...let's say I wanted to only scan the "Xenforo pre-sales questions" node. If the "Xenforo pre-sales questions" node is clicked on...the URL = https://xenforo.com/community/forums/xenforo-pre-sales-questions.5/

If this URL is used for a scan...only the thread titles in this node are scanned...individual threads & thread posts are not scanned. I've tried other URL's...but nothing I've tried will scan all the thread posts in a single forum node.

Again my goal is to scan all thread posts in a single Xenforo forum website node (as described above).

Obviously I'm not getting something correct. If anyone knows what the URL syntax should be for scanning all the thread posts in a single forum node...I would greatly appreciate it!

Thanks