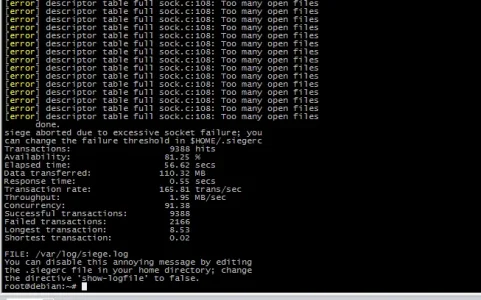

# siege -u https://www.axivo.com/forums/ -c 5000 -d 30 -t 1M

Lifting the server siege... done.

Transactions: 18042 hits

Availability: 100.00 %

Elapsed time: 59.57 secs

Data transferred: 151.95 MB

Response time: 0.05 secs

Transaction rate: 302.87 trans/sec

Throughput: 2.55 MB/sec

Concurrency: 15.06

Successful transactions: 18042

Failed transactions: 0

Longest transaction: 0.38

Shortest transaction: 0.00

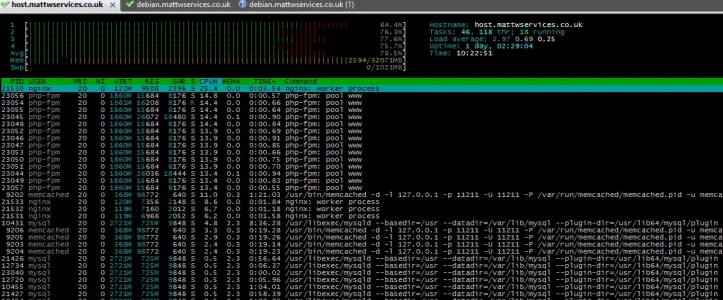

# top -M

top - 04:16:49 up 2:28, 2 users, load average: 0.01, 0.04, 0.00

Tasks: 158 total, 1 running, 157 sleeping, 0 stopped, 0 zombie

Cpu(s): 10.6%us, 2.0%sy, 0.0%ni, 86.9%id, 0.3%wa, 0.0%hi, 0.3%si, 0.0%st

Mem: 7770.230M total, 1323.508M used, 6446.723M free, 30.242M buffers

Swap: 8191.992M total, 0.000k used, 8191.992M free, 312.996M cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

9780 nginx 20 0 194m 45m 2528 S 15.3 0.6 0:12.82 nginx

9779 nginx 20 0 194m 45m 2424 S 9.0 0.6 0:15.33 nginx

9778 nginx 20 0 194m 45m 2540 S 2.3 0.6 0:16.99 nginx

1341 named 20 0 390m 40m 3084 S 1.0 0.5 0:14.08 named

9781 nginx 20 0 194m 45m 2524 S 1.0 0.6 0:10.84 nginx

15686 php-fpm 20 0 995m 20m 13m S 0.7 0.3 0:00.39 php-fpm