Max Taxable

Well-known member

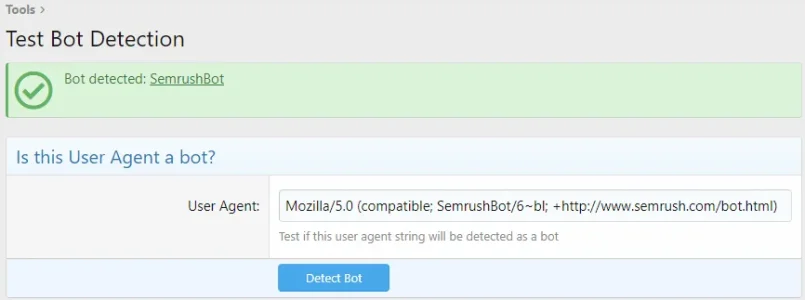

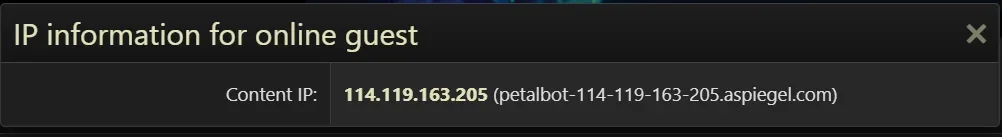

Yeah I just... Pictured in my mind a hundred guys copying the .txt files and adding them to their robots.txt file, thinking that'll work.The lists can be used to block by user agent at the web server. Those two places are just good sources of user agent info.