You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Known Bots 6.1.1

No permission to download

- Thread starter Sim

- Start date

Sim

Well-known member

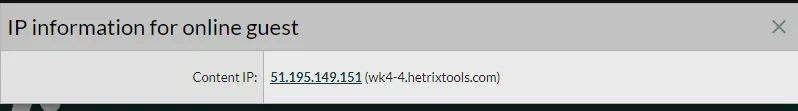

Anyone else having issues with Hetrix Monitoring bot not being detected?

HetrixTools was one of about 100 or so bots which were originally on the list but hadn't been seen in a long time, so got archived (the search key gets archived, but the bot remains listed).

Any of these bots that make an appearance again get added back - Hetrix should start being detected again shortly now that it's made an appearance.

TMC

Active member

HetrixTools was one of about 100 or so bots which were originally on the list but hadn't been seen in a long time, so got archived (the search key gets archived, but the bot remains listed).

Any of these bots that make an appearance again get added back - Hetrix should start being detected again shortly now that it's made an appearance.

Just wanted to confirm that it has now started detecting hetrix. Thanks for the add on!

Sim

Well-known member

Perhaps it was a coincidence ...

today I have updated this add-on. Sometime later my server was not reachable like normal, sometime later the server was away.

Now we found out that there are just too many bots. Could it be that there is a connection?

What do the log files say?

Any server error logs?

What do the web server logs say during the period while the server was unavailable?

I have no idea about all this, but I write some thoughts, you maybe correct me, please.

1. There are some hundreds of bots on my page (your addon says), many of them I dont need, because people outside of Europe I dont care. The robots.txt maybe helps, maybe not.

2. Because of the number of bots, it is maybe better to disallow all, and allow some? Is there a short cut for google, bing and maybe less more bots, I really should have? I get 80% from my visitors from Google. 15% comes from direct url. The rest who cares. Why I should have spandex, china, india, whatever bots?

4. All these bots are counted as visitors, maybe the addon of xon helps

5. If your add-on counts them, we have more queries, maybe I should use it sometimes, but not all time.

6. For some bots we need to use htaccess, i have read, but I dont know which infos in the web are ok, good, helpful. Things changes so fast.

Something like?

7. Is there a list of really bad spamming bots, nobody needs? IP-Lists to use?

8. I have seen Crawl-delay for robots.txt and also the search console to change the crawl rates, but to which values?

9. The robots.txt may help tomorrow, but what I do today?

1. There are some hundreds of bots on my page (your addon says), many of them I dont need, because people outside of Europe I dont care. The robots.txt maybe helps, maybe not.

2. Because of the number of bots, it is maybe better to disallow all, and allow some? Is there a short cut for google, bing and maybe less more bots, I really should have? I get 80% from my visitors from Google. 15% comes from direct url. The rest who cares. Why I should have spandex, china, india, whatever bots?

4. All these bots are counted as visitors, maybe the addon of xon helps

5. If your add-on counts them, we have more queries, maybe I should use it sometimes, but not all time.

6. For some bots we need to use htaccess, i have read, but I dont know which infos in the web are ok, good, helpful. Things changes so fast.

Something like?

Code:

RewriteCond %{HTTP_USER_AGENT} SemrushBot [OR]

RewriteCond %{HTTP_USER_AGENT} AhrefsBot

RewriteRule .* - [R=429]7. Is there a list of really bad spamming bots, nobody needs? IP-Lists to use?

8. I have seen Crawl-delay for robots.txt and also the search console to change the crawl rates, but to which values?

9. The robots.txt may help tomorrow, but what I do today?

Found this

Some of them should not care for robots.txt, and maybe some of them I want?

Maybe some of them are big fishes to kill by htaccess? But which ones?

Maybe some of them are big fishes killed by robots.txt

Code:

User-agent: AhrefsBot

User-agent: AhrefsSiteAudit

User-agent: adbeat_bot

User-agent: Alexibot

User-agent: AppEngine

User-agent: Aqua_Products

User-agent: archive.org_bot

User-agent: archive

User-agent: asterias

User-agent: b2w/0.1

User-agent: BackDoorBot/1.0

User-agent: BecomeBot

User-agent: BlekkoBot

User-agent: Blexbot

User-agent: BlowFish/1.0

User-agent: Bookmark search tool

User-agent: BotALot

User-agent: BuiltBotTough

User-agent: Bullseye/1.0

User-agent: BunnySlippers

User-agent: CCBot

User-agent: CheeseBot

User-agent: CherryPicker

User-agent: CherryPickerElite/1.0

User-agent: CherryPickerSE/1.0

User-agent: chroot

User-agent: Copernic

User-agent: CopyRightCheck

User-agent: cosmos

User-agent: Crescent

User-agent: Crescent Internet ToolPak HTTP OLE Control v.1.0

User-agent: DittoSpyder

User-agent: dotbot

User-agent: dumbot

User-agent: EmailCollector

User-agent: EmailSiphon

User-agent: EmailWolf

User-agent: Enterprise_Search

User-agent: Enterprise_Search/1.0

User-agent: EroCrawler

User-agent: es

User-agent: exabot

User-agent: ExtractorPro

User-agent: FairAd Client

User-agent: Flaming AttackBot

User-agent: Foobot

User-agent: Gaisbot

User-agent: GetRight/4.2

User-agent: gigabot

User-agent: grub

User-agent: grub-client

User-agent: Go-http-client

User-agent: Harvest/1.5

User-agent: Hatena Antenna

User-agent: hloader

User-agent: http://www.SearchEngineWorld.com bot

User-agent: http://www.WebmasterWorld.com bot

User-agent: httplib

User-agent: humanlinks

User-agent: ia_archiver

User-agent: ia_archiver/1.6

User-agent: InfoNaviRobot

User-agent: Iron33/1.0.2

User-agent: JamesBOT

User-agent: JennyBot

User-agent: Jetbot

User-agent: Jetbot/1.0

User-agent: Jorgee

User-agent: Kenjin Spider

User-agent: Keyword Density/0.9

User-agent: larbin

User-agent: LexiBot

User-agent: libWeb/clsHTTP

User-agent: LinkextractorPro

User-agent: LinkpadBot

User-agent: LinkScan/8.1a Unix

User-agent: LinkWalker

User-agent: LNSpiderguy

User-agent: looksmart

User-agent: lwp-trivial

User-agent: lwp-trivial/1.34

User-agent: Mata Hari

User-agent: Megalodon

User-agent: Microsoft URL Control

User-agent: Microsoft URL Control - 5.01.4511

User-agent: Microsoft URL Control - 6.00.8169

User-agent: MIIxpc

User-agent: MIIxpc/4.2

User-agent: Mister PiX

User-agent: MJ12bot

User-agent: moget

User-agent: moget/2.1

User-agent: mozilla

User-agent: Mozilla

User-agent: mozilla/3

User-agent: mozilla/4

User-agent: Mozilla/4.0 (compatible; BullsEye; Windows 95)

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows 2000)

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows 95)

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows 98)

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows NT)

User-agent: Mozilla/4.0 (compatible; MSIE 4.0; Windows XP)

User-agent: mozilla/5

User-agent: MSIECrawler

User-agent: naver

User-agent: NerdyBot

User-agent: NetAnts

User-agent: NetMechanic

User-agent: NICErsPRO

User-agent: Nutch

User-agent: Offline Explorer

User-agent: Openbot

User-agent: Openfind

User-agent: Openfind data gathere

User-agent: Oracle Ultra Search

User-agent: PerMan

User-agent: ProPowerBot/2.14

User-agent: ProWebWalker

User-agent: psbot

User-agent: Python-urllib

User-agent: QueryN Metasearch

User-agent: Radiation Retriever 1.1

User-agent: RepoMonkey

User-agent: RepoMonkey Bait & Tackle/v1.01

User-agent: RMA

User-agent: rogerbot

User-agent: scooter

User-agent: Screaming Frog SEO Spider

User-agent: searchpreview

User-agent: SEMrushBot

User-agent: SemrushBot

User-agent: SemrushBot-SA

User-agent: SEOkicks-Robot

User-agent: SiteSnagger

User-agent: sootle

User-agent: SpankBot

User-agent: spanner

User-agent: spbot

User-agent: Stanford

User-agent: Stanford Comp Sci

User-agent: Stanford CompClub

User-agent: Stanford CompSciClub

User-agent: Stanford Spiderboys

User-agent: SurveyBot

User-agent: SurveyBot_IgnoreIP

User-agent: suzuran

User-agent: Szukacz/1.4

User-agent: Szukacz/1.4

User-agent: Teleport

User-agent: TeleportPro

User-agent: Telesoft

User-agent: Teoma

User-agent: The Intraformant

User-agent: TheNomad

User-agent: toCrawl/UrlDispatcher

User-agent: True_Robot

User-agent: True_Robot/1.0

User-agent: turingos

User-agent: Typhoeus

User-agent: URL Control

User-agent: URL_Spider_Pro

User-agent: URLy Warning

User-agent: VCI

User-agent: VCI WebViewer VCI WebViewer Win32

User-agent: Web Image Collector

User-agent: WebAuto

User-agent: WebBandit

User-agent: WebBandit/3.50

User-agent: WebCopier

User-agent: WebEnhancer

User-agent: WebmasterWorld Extractor

User-agent: WebmasterWorldForumBot

User-agent: WebSauger

User-agent: Website Quester

User-agent: Webster Pro

User-agent: WebStripper

User-agent: WebVac

User-agent: WebZip

User-agent: WebZip/4.0

User-agent: Wget

User-agent: Wget/1.5.3

User-agent: Wget/1.6

User-agent: WWW-Collector-E

User-agent: Xenu's

User-agent: Xenu's Link Sleuth 1.1c

User-agent: Zeus

User-agent: Zeus 32297 Webster Pro V2.9 Win32

User-agent: Zeus Link Scout

Disallow: /Some of them should not care for robots.txt, and maybe some of them I want?

Maybe some of them are big fishes to kill by htaccess? But which ones?

Maybe some of them are big fishes killed by robots.txt

Chromaniac

Well-known member

in my case it was bytedance/bytespider that was killing my server. i noticed the activity using this addon and blocked them through cloudflare as they do not respect robots file.

I hope this helps?

I never had such issues before at my usual hoster, but this customer is in another country with another hoster,

and probably the RAM is just too less.

The biggest aggressor btw is apple!

Code:

RewriteCond %{HTTP_USER_AGENT} ^.(Bytedance|Bytespider).$ [NC]

RewriteRule .* - [F,L]I never had such issues before at my usual hoster, but this customer is in another country with another hoster,

and probably the RAM is just too less.

The biggest aggressor btw is apple!

Chromaniac

Well-known member

yup. applebot was also pretty much everywhere in the bot list. since i do not really see any point of their indexing, i blocked it as well. though it might be useful for US/Europe based sites. I mean they might or might not launch a proper search engine in future. For now, the index is used for results shown through Siri and other integrated parts I believe. I do have a pretty big robots file with a bunch of spiders blocked through cloudflare firewall. Petalbot is another one I saw no point of.

Sim

Well-known member

probably the RAM is just too less

Not enough RAM and poorly configured MySQL is the #1 cause of server outages in my experience - if you check the logs, you'll probably find that the OOM process will be killing off MySQL regularly, forcing it to restart. It's also likely swapping a lot - check disk IO activity, it's probably far too high.

Best thing you can do for performance is to allocate sufficient RAM and make sure MySQL isn't misconfigured to use too much of it.

Of course, if you're on shared hosting - all bets are off, it could be a lot of different causes.

As written for me this is all new, I had never any issues in the last ten or more years.

I have enough things to do, so I am furious about this sh*t.

But after watching around, this seems to be a big, big story, there are many websites, lists and docs.

Maybe it is better just to change the server. Saves my nerves and time.

I have enough things to do, so I am furious about this sh*t.

But after watching around, this seems to be a big, big story, there are many websites, lists and docs.

Maybe it is better just to change the server. Saves my nerves and time.

I try now:

I dont know all these details

begins with ., then Bytedance or Bytespider, so i could add |SemrushBot|AhrefsBot

NC = dont care upper/lower

but why ^. and .$ ? means

begins with "." = something?

ends with "." something?

means also something or nothing?

I guess it must be

Other examples use

RewriteCond %{HTTP_USER_AGENT} ^Bytedance [NC] [OR]

RewriteCond %{HTTP_USER_AGENT} ^Bytespider[NC] [OR]

RewriteCond %{HTTP_USER_AGENT} ^AhrefsBot[NC] [OR]

RewriteCond %{HTTP_USER_AGENT} ^SemrushBot[NC] [OR]

...

Code:

RewriteCond %{HTTP_USER_AGENT} ^.(Bytedance|Bytespider).$ [NC] [OR]

RewriteCond %{HTTP_USER_AGENT} bot[\s_+:,\.\;\/\\\-] [NC] [OR]

RewriteCond %{HTTP_USER_AGENT} SemrushBot [OR]

RewriteCond %{HTTP_USER_AGENT} AhrefsBot

RewriteRule .* - [F,L]I dont know all these details

begins with ., then Bytedance or Bytespider, so i could add |SemrushBot|AhrefsBot

NC = dont care upper/lower

Code:

RewriteCond %{HTTP_USER_AGENT} ^.(Bytedance|Bytespider|AhrefsBot|SemrushBot ).$ [NC] [OR]

RewriteCond %{HTTP_USER_AGENT} bot[\s_+:,\.\;\/\\\-] [NC]

RewriteRule .* - [F,L]but why ^. and .$ ? means

begins with "." = something?

ends with "." something?

means also something or nothing?

I guess it must be

Code:

^.?(name1|name2).?$Other examples use

RewriteCond %{HTTP_USER_AGENT} ^Bytedance [NC] [OR]

RewriteCond %{HTTP_USER_AGENT} ^Bytespider[NC] [OR]

RewriteCond %{HTTP_USER_AGENT} ^AhrefsBot[NC] [OR]

RewriteCond %{HTTP_USER_AGENT} ^SemrushBot[NC] [OR]

...

Last edited:

Mr. Jinx

Well-known member

Blocking bots sounds like something you will never win. There are so many, and the situation could change on a daily base.

Instead, I would rather investigate if there are better hosting options and add some extra protection like free Cloudflare which makes fighting against bots a lot easier.

Instead, I would rather investigate if there are better hosting options and add some extra protection like free Cloudflare which makes fighting against bots a lot easier.

What can I say, this client has booked at a large hoster; and somehow I have the feeling they play on him to buy a bigger server.

Because everything was fine for four weeks, and you can test these servers for four weeks. And you have to pay this hoster for one, two or three years if you want to have a better price. And now ask how long this server is rented AND paid!?

But probably, these are only coincidences (right word for this?)

If the robots.txt and htacess works, I am ok, to watch the logs one time a week (or when things slow down).

Free cloudflare? I will try to find and understand ... thank you.

Because everything was fine for four weeks, and you can test these servers for four weeks. And you have to pay this hoster for one, two or three years if you want to have a better price. And now ask how long this server is rented AND paid!?

But probably, these are only coincidences (right word for this?)

If the robots.txt and htacess works, I am ok, to watch the logs one time a week (or when things slow down).

Free cloudflare? I will try to find and understand ... thank you.

It seems that there are hosters who care for bots, and other hosters.

On one server I never had any problems with bots for the last nine years, it is a big one and, compared to the prices I have found in the last days a very affordable one.

Cloudflare was nice tip, thank you for that.

On one server I never had any problems with bots for the last nine years, it is a big one and, compared to the prices I have found in the last days a very affordable one.

Cloudflare was nice tip, thank you for that.

Similar threads

- Replies

- 3

- Views

- 644