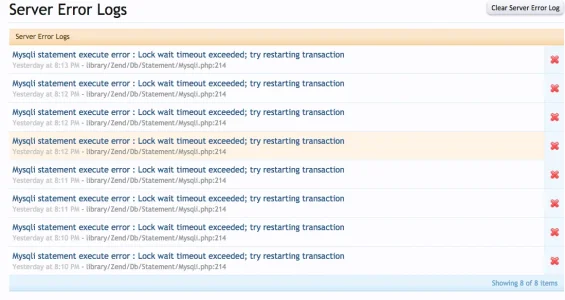

Error Info

Zend_Db_Statement_Mysqli_Exception: Mysqli statement execute error : Lock wait timeout exceeded; try restarting transaction - library/Zend/Db/Statement/Mysqli.php:214

Generated By:

truebluefan, 6 minutes ago

Stack Trace

#0 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/Zend/Db/Statement.php(297): Zend_Db_Statement_Mysqli->_execute(Array)

#1 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/Zend/Db/Adapter/Abstract.php(479): Zend_Db_Statement->execute(Array)

#2 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/DataWriter/DiscussionMessage.php(928): Zend_Db_Adapter_Abstract->query('?????UPDATE xf_...', 22679)

#3 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/DataWriter/DiscussionMessage/Post.php(200): XenForo_DataWriter_DiscussionMessage->_updateUserMessageCount(false)

#4 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/DataWriter/DiscussionMessage.php(560): XenForo_DataWriter_DiscussionMessage_Post->_updateUserMessageCount()

#5 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/DataWriter.php(1409): XenForo_DataWriter_DiscussionMessage->_postSave()

#6 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/DataWriter/Discussion.php(477): XenForo_DataWriter->save()

#7 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/DataWriter/Discussion.php(426): XenForo_DataWriter_Discussion->_saveFirstMessageDw()

#8 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/DataWriter.php(1409): XenForo_DataWriter_Discussion->_postSave()

#9 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/ControllerPublic/Forum.php(745): XenForo_DataWriter->save()

#10 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/FrontController.php(347): XenForo_ControllerPublic_Forum->actionAddThread()

#11 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/library/XenForo/FrontController.php(134): XenForo_FrontController->dispatch(Object(XenForo_RouteMatch))

#12 /home/mschwartz/github/mschwartz/sportstwo-xenforo/htdocs/index.php(13): XenForo_FrontController->run()

#13 {main}

Request State

array(3) {

["url"] => string(62) "

http://www.sportstwo.com/forums/calendar-excess.610/add-thread"

["_GET"] => array(0) {

}

["_POST"] => array(10) {

["title"] => string(41) "Formula One Grand Prix of Malaysia Mar 29"

["message_html"] => string(0) ""

["message"] => string(41) "4:00 AM ET

Sepang International Circuit"

["_xfRelativeResolver"] => string(65) "

http://www.sportstwo.com/forums/calendar-excess.610/create-thread"

["attachment_hash"] => string(32) "2ec48f061e32c0f9ea3fe423a59354e4"

["watch_thread_state"] => string(1) "1"

["discussion_open"] => string(1) "1"

["_set"] => array(2) {

["discussion_open"] => string(1) "1"

["sticky"] => string(1) "1"

}

["poll"] => array(8) {

["question"] => string(0) ""

["responses"] => array(10) {

[0] => string(0) ""

[1] => string(0) ""

[2] => string(0) ""

[3] => string(0) ""

[4] => string(0) ""

[5] => string(0) ""

[6] => string(0) ""

[7] => string(0) ""

[8] => string(0) ""

[9] => string(0) ""

}

["max_votes_type"] => string(6) "single"

["max_votes_value"] => string(1) "2"

["change_vote"] => string(1) "1"

["view_results_unvoted"] => string(1) "1"

["close_length"] => string(1) "7"

["close_units"] => string(4) "days"

}

["_xfToken"] => string(8) "********"

}

}