Sim

Well-known member

I'm looking at whether further optimisations of my database server are required.

I host 8 XenForo sites (9 actually if you include the staging server) plus about 20 WordPress sites and a few other PHP based sites (HelpDesk and various other tools/utilities).

I have multiple web servers running nginx connecting to a standalone MySQL server.

These are all running on Linode VPS servers of various size. The MySQL server has 8GB of RAM and I run mysqltuner.pl to optimise configuration.

I'm generally happy with the performance of the database server, but I'm wondering if there are further optimisations I could apply.

The key approach I've used to this point for database server performance has been to allocate as much RAM as I can to InnoDB buffers, while still maintaining server stability. On this server that means 4.5G of RAM has been allocated to InnoDB buffers (4.8G total buffers + 200 threads @ 1.1M per thread = 5G total allocated to MySQL).

I tried to go higher, but the sever became unstable.

However, the total size of my databases has grown to the point where I'm no longer able to retain all of the data in memory - although I'm not sure whether that's actually having a significant impact given there would be large amounts of data which simply never get read and so isn't really required to be held in the buffers.

My main challenge is that the next price point up for a Linode VPS is 2x the price for only 1.5x the amount of RAM (12GB). I already have a 2nd separate 8GB MySQL server running for my largest site (although that site is fairly unique in that it has been archived - no posting allowed, so database traffic is 99.9%+ read-only).

I was considering moving to a "high memory" instance @ 16GB RAM, but it only has 1 CPU core. I don't seem to see much in the way of CPU usage - my 8GB Linode has 4 cores, but rarely gets above 30% CPU usage anyway. I'm unsure if moving to a single core machine with more RAM will help or hinder performance.

The other factor I was considering was moving to a read/write setup with two database servers, one for reads and the other for writes - once XF2 is available. Again, I'm not sure if this is going to significantly impact on performance. Would this also offer redundancy (can the read slave be automatically upgraded to master in a XenForo setup if the master went offline) ? Right now I need to take the database server (and hence the websites) offline for a full system backup occasionally to ensure data integrity - I'd like to be able to do backups without taking sites offline.

Given the price/performance ratio, I'm thinking it may be more cost effective to deploy multiple 8GB database servers rather than trying to consolidate on one larger server? But then I won't get the benefit of the read/write setup because it becomes too expensive to have 4 or 6 servers.

I'm also considering splitting the servers based on workload - XenForo databases on one server and WordPress databases on another, so each can be optimised more specifically for the workload they experience. Again, I'm unsure if this will have a significant impact.

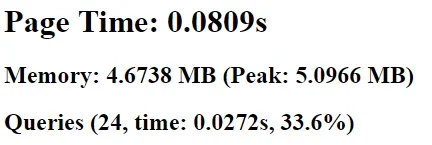

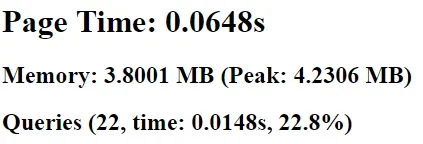

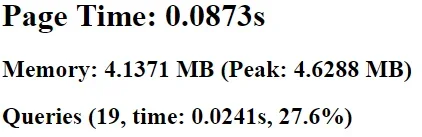

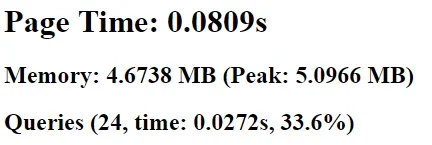

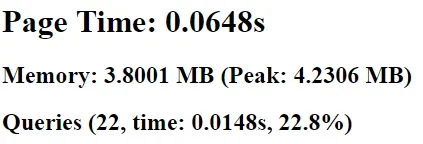

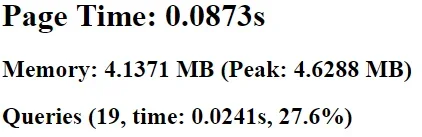

These are the kind of response times I'm currently seeing for forum home page and new thread searches:

... given how little these contribute to the overall page load time (350ms on average for Google crawler to download the HTML), I'm not sure if any more optimisations would be worth it at this point?

I'm not sure I actually have a problem, but either way, I would appreciate some guidance on possible growth strategies from here as my sites get busier and database sizes get larger:

The cost of having the database server go down and require rebuilding or losing up to 24 hours worth of data isn't quite at the level where it compares to the cost of maintaining a highly available setup (although there are reputational costs as well, which are difficult to quantify).

But either way, I can foresee a time in the not-too-distant future where the equation does start to tip towards the cost being justified, so I'd like to start thinking about how to architect things in preparation for that time.

I host 8 XenForo sites (9 actually if you include the staging server) plus about 20 WordPress sites and a few other PHP based sites (HelpDesk and various other tools/utilities).

I have multiple web servers running nginx connecting to a standalone MySQL server.

These are all running on Linode VPS servers of various size. The MySQL server has 8GB of RAM and I run mysqltuner.pl to optimise configuration.

I'm generally happy with the performance of the database server, but I'm wondering if there are further optimisations I could apply.

The key approach I've used to this point for database server performance has been to allocate as much RAM as I can to InnoDB buffers, while still maintaining server stability. On this server that means 4.5G of RAM has been allocated to InnoDB buffers (4.8G total buffers + 200 threads @ 1.1M per thread = 5G total allocated to MySQL).

I tried to go higher, but the sever became unstable.

However, the total size of my databases has grown to the point where I'm no longer able to retain all of the data in memory - although I'm not sure whether that's actually having a significant impact given there would be large amounts of data which simply never get read and so isn't really required to be held in the buffers.

My main challenge is that the next price point up for a Linode VPS is 2x the price for only 1.5x the amount of RAM (12GB). I already have a 2nd separate 8GB MySQL server running for my largest site (although that site is fairly unique in that it has been archived - no posting allowed, so database traffic is 99.9%+ read-only).

I was considering moving to a "high memory" instance @ 16GB RAM, but it only has 1 CPU core. I don't seem to see much in the way of CPU usage - my 8GB Linode has 4 cores, but rarely gets above 30% CPU usage anyway. I'm unsure if moving to a single core machine with more RAM will help or hinder performance.

The other factor I was considering was moving to a read/write setup with two database servers, one for reads and the other for writes - once XF2 is available. Again, I'm not sure if this is going to significantly impact on performance. Would this also offer redundancy (can the read slave be automatically upgraded to master in a XenForo setup if the master went offline) ? Right now I need to take the database server (and hence the websites) offline for a full system backup occasionally to ensure data integrity - I'd like to be able to do backups without taking sites offline.

Given the price/performance ratio, I'm thinking it may be more cost effective to deploy multiple 8GB database servers rather than trying to consolidate on one larger server? But then I won't get the benefit of the read/write setup because it becomes too expensive to have 4 or 6 servers.

I'm also considering splitting the servers based on workload - XenForo databases on one server and WordPress databases on another, so each can be optimised more specifically for the workload they experience. Again, I'm unsure if this will have a significant impact.

These are the kind of response times I'm currently seeing for forum home page and new thread searches:

... given how little these contribute to the overall page load time (350ms on average for Google crawler to download the HTML), I'm not sure if any more optimisations would be worth it at this point?

I'm not sure I actually have a problem, but either way, I would appreciate some guidance on possible growth strategies from here as my sites get busier and database sizes get larger:

- split busy sites onto multiple database servers first before worrying about scaling individual servers? (possibly cheaper overall, but much more work to maintain)

- work on economy of scale and go for a bigger server (more RAM) - scale up (more expensive, but less work)

- scale out with separate read/write servers (but what size?) (is the benefit worth the cost?)

- would love some redundancy so I can backup server without taking sites offline - but how to structure that? (again, is the benefit worth the cost?)

- some combination of the above?

The cost of having the database server go down and require rebuilding or losing up to 24 hours worth of data isn't quite at the level where it compares to the cost of maintaining a highly available setup (although there are reputational costs as well, which are difficult to quantify).

But either way, I can foresee a time in the not-too-distant future where the equation does start to tip towards the cost being justified, so I'd like to start thinking about how to architect things in preparation for that time.