Triops

Well-known member

Thank you again!

We did not had 200GB on 5th. At that time we had 20GB only. We increased to 200GB on 11-11.

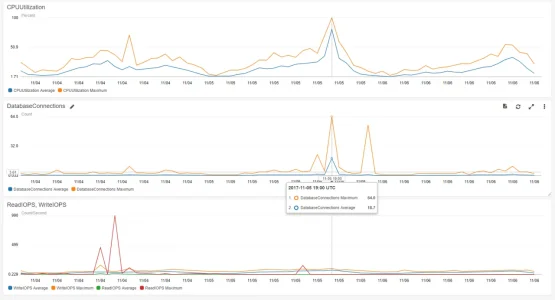

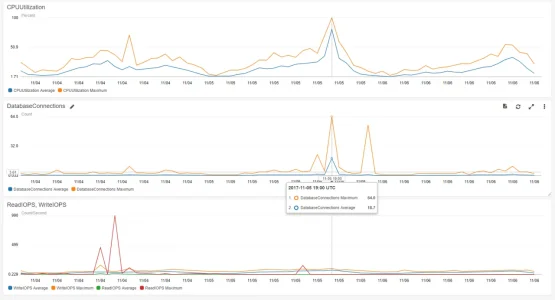

Yes, the IOPS peak... But that was on 11-04, the day before, when we switched RDS instances, t2.small (not t2.micro as said above) to m4.large.

The small red read IOPS peak on 5th is earlier at 3pm UTC (around 150 read IOPS). I don't remember exactly, maybe I took a sql dump. I don't think that we really hit the IOPS limit - even not with 20GB at 7-8pm on the 5th.

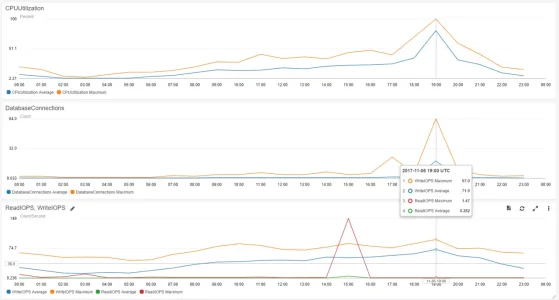

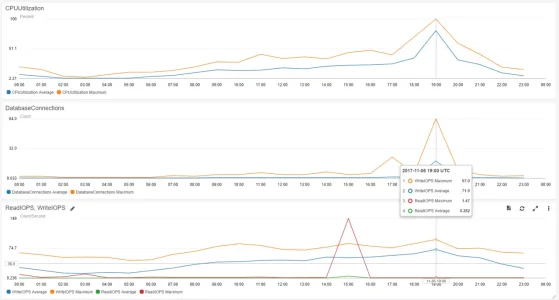

Currently the CPU is often heavy loaded since then, even when not really critical. But it becomes laggy at 8pm UTC almost every day so we approach the limit even with 200GB. That's why I feel "the RDS is too small". I fear somehow, that parameters and such won't significantly will increase performance?!

Might the DB connections be problematic? But 64 max should not kill the server?

Do you run the 10k users on Xenforo?

We did not had 200GB on 5th. At that time we had 20GB only. We increased to 200GB on 11-11.

Yes, the IOPS peak... But that was on 11-04, the day before, when we switched RDS instances, t2.small (not t2.micro as said above) to m4.large.

The small red read IOPS peak on 5th is earlier at 3pm UTC (around 150 read IOPS). I don't remember exactly, maybe I took a sql dump. I don't think that we really hit the IOPS limit - even not with 20GB at 7-8pm on the 5th.

Currently the CPU is often heavy loaded since then, even when not really critical. But it becomes laggy at 8pm UTC almost every day so we approach the limit even with 200GB. That's why I feel "the RDS is too small". I fear somehow, that parameters and such won't significantly will increase performance?!

Might the DB connections be problematic? But 64 max should not kill the server?

Do you run the 10k users on Xenforo?