Timelord_

Member

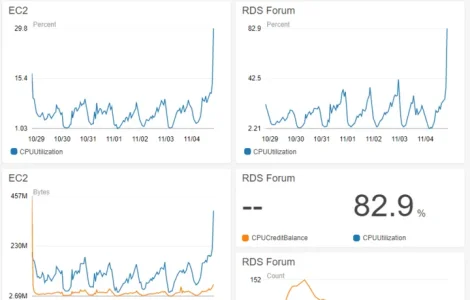

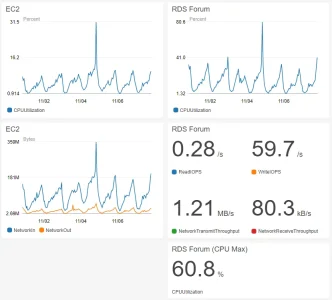

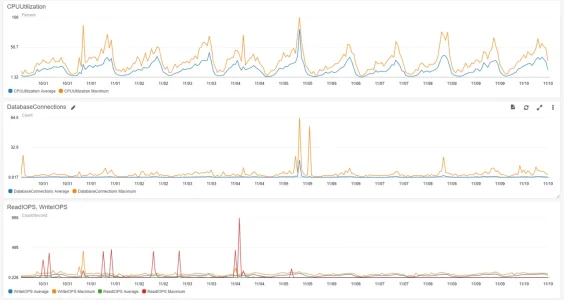

Has anyone successfully hosted a large install of Xenforo on EC2 across multiple instances? We are currently hosted on a single instance behind ELB (for free SSL), however, we are at the point now where we need to look at adding additional ec2 instances.

We are using RDS, so we would just need to script the process of installing xenforo, and copying any media to the new instances.

Any thoughts?

We are using RDS, so we would just need to script the process of installing xenforo, and copying any media to the new instances.

Any thoughts?