So it's not going to be the "cleanest" solution because XenForo doesn't support "sub-adapters" out of the box. However, if you install my

Cloudflare addon (even if you don't use Cloudflare or R2), it tweaks XenForo's abstracted filesystem a little bit to support sub-adapters (for example you can have an abstracted filesystem adapter for just a folder). It's backwardly compatible, so doesn't break anything if you don't use it that way.

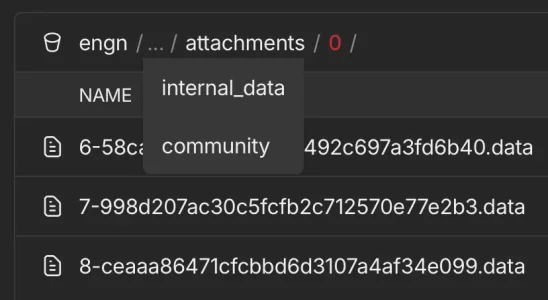

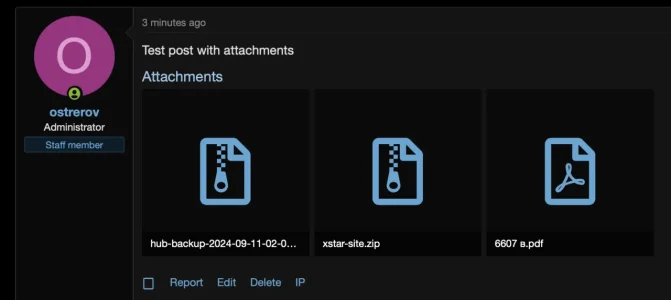

It was done for the exact reason you are running into... where you would want to have

internal_data/attachments in something like S3, but not the rest of the internal_data folders).

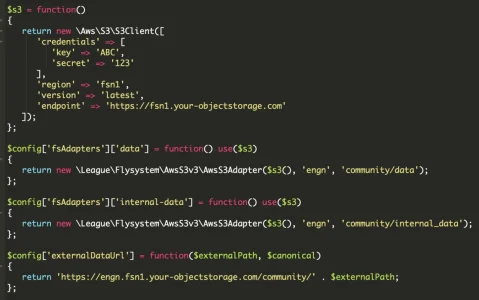

Can see a bit of the format here:

although if you really want to store those files in R2, you can via config.php edit.

I guess the code will be?

PHP:

$config['fsAdapters']['internal-data/image_cache'] = function() {

return \DigitalPoint\Cloudflare\League\Flysystem\Adapter\R2::getAdapter('internal-data/image_cache', 'cf-bucket-name');

};

...but it should work if you change the last part of your config above to this (assuming you have the Cloudflare addon installed):

PHP:

$config['fsAdapters']['internal-data/attachments'] = function() use($s3)

{

return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3(), 'bucket_name', 'internal_data');

};