You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Using DigitalOcean Spaces or Amazon S3 for file storage

No permission to download

- Thread starter Chris D

- Start date

skyscraper

Member

I used amazon on another platform and it was hard to tell but when anybody loaded up a photo it just lagged my site, but that could be the resizing as well and taking up cpu space. I scrapped that project and decided to pick up Xenforo more for content not going to try to be another FB. But i dont blame amazon nssary but still i was a newbie with the bucket service. But Thanks Rhodium I will try backblaze

Chromaniac

Well-known member

backblaze is likely to be slower than amazon s3 in my experience.

Last edited:

shimspedy

Well-known member

PHP:

$s3 = function()

{

return new \Aws\S3\S3Client([

'credentials' => [

'key' => 'xxxxxxxxxxxx',

'secret' => 'xxxxxxxxxxxxx'

],

'region' => 'us-east-1',

'version' => 'latest',

'endpoint' => 'https://s3.us-east-1.amazonaws.com'

]);

};

$config['fsAdapters']['data'] = function() use($s3)

{

return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3(), 'xxxxxx', 'data');

};

$config['externalDataUrl'] = function($externalPath, $canonical){

return 'https://xxxx.s3.us-east-1.amazonaws.com/data/' . $externalPath;

};

$config['fsAdapters']['internal-data'] = function() use($s3)

{

return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3(), 'xxx', 'internal_data');

};has anyone got this to work with amazon s3 .. just move the data folder and interna_data but still my images are still broken

shimspedy

Well-known member

could you help out ... i can get the old data to show on my site..Amazing easy install. Just did it in my test env before switching this weekend finally to XF2.1.

One question. I would like to add an own domain via Cloudflare to the S3 bucket for delivering the files from the data folder form my domain.

Is this possible?

Chromaniac

Well-known member

yes. iirc, you create a cname of your choice and connect it to the bucket url. and then replace the url in config file to match that subdomain. works very well as you can then set cloudflare to cache this parth using page rules.Is this possible?

Chromaniac

Well-known member

Code:

$config['externalDataUrl'] = function($externalPath, $canonical)

{

return 'https://BUCKETNAME.s3.ap-southeast-1.amazonaws.com/data/' . $externalPath;

};i am currently using b2 (look into it!)... but this is what needs to be done iirc.

so basically you would create a cname for cdn.yourdomain.tld that links to

https://BUCKETNAME.s3.ap-southeast-1.amazonaws.com. and then replace the line in the above code to... https://cdn.yourdomain.tld/data/. (correct the s3 datacenter part!)Hoffi

Well-known member

For S3 you need to create a bucket with the name of the domain which should be accesible for that.

Lets say it the data folder schould be accesible via cdn.whatever.tld the name of the bucket needs also be cdn.whatever.tld. Then it works for me.

I just split internal data into an own bucket and now I am going to optimize the security. I really like this.

Lets say it the data folder schould be accesible via cdn.whatever.tld the name of the bucket needs also be cdn.whatever.tld. Then it works for me.

I just split internal data into an own bucket and now I am going to optimize the security. I really like this.

Chromaniac

Well-known member

yup. i had to make the bucket public as well to make it work.

Hoffi

Well-known member

I used two buckets, one with my own domain for the data folder, and one other for the internal folder.

The public one is really small, but in the internal I moved 3 Gigs of data.

Works like a charm now.

Can I now remove the Settings for internal-Data folder in my config and delete all content from the folder, or is there something that still need be there? I assume I can remove all.

The public one is really small, but in the internal I moved 3 Gigs of data.

Works like a charm now.

Can I now remove the Settings for internal-Data folder in my config and delete all content from the folder, or is there something that still need be there? I assume I can remove all.

Hoffi

Well-known member

You need to copy the old files to the bucket.I can have new uploaded files to work but the old files like avatar and attachment don't work

PHP:$s3 = function() { return new \Aws\S3\S3Client([ 'credentials' => [ 'key' => 'xxxxxxxxxxxx', 'secret' => 'xxxxxxxxxxxxx' ], 'region' => 'us-east-1', 'version' => 'latest', 'endpoint' => 'https://s3.us-east-1.amazonaws.com' ]); }; $config['fsAdapters']['data'] = function() use($s3) { return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3(), 'xxxxxx', 'data'); }; $config['externalDataUrl'] = function($externalPath, $canonical){ return 'https://xxxx.s3.us-east-1.amazonaws.com/data/' . $externalPath; }; $config['fsAdapters']['internal-data'] = function() use($s3) { return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3(), 'xxx', 'internal_data'); };

has anyone got this to work with amazon s3 .. just move the data folder and interna_data but still my images are still broken

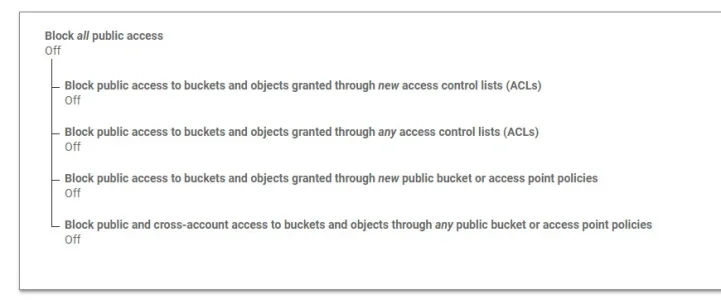

I have it working now on one of my smaller sites. Just did it last night. At first I was getting permission errors. Once I made the bucket public, it worked.

No, that's not a good idea at all.

First, we cannot guarantee any particular add-on developer is correctly using the abstracted file system so you may be deleting files that wouldn't be served from S3 and therefore deleting the only copies of those files.

Second, and probably more importantly, internal_data contains some files that should remain stored locally such as

It may be save to assume that specific known-XF directories are safe to remove, e.g. data/attachments data/avatars and internal_data/attachments and the same too for any official XF add-ons .

But, either way, I'd still recommend not deleting anything - instead move the files to a completely different location first. If anything goes wrong you can figure out which files and why and move the files back.

First, we cannot guarantee any particular add-on developer is correctly using the abstracted file system so you may be deleting files that wouldn't be served from S3 and therefore deleting the only copies of those files.

Second, and probably more importantly, internal_data contains some files that should remain stored locally such as

code_cache, that continue to be and are required to be stored locally for XF to run.It may be save to assume that specific known-XF directories are safe to remove, e.g. data/attachments data/avatars and internal_data/attachments and the same too for any official XF add-ons .

But, either way, I'd still recommend not deleting anything - instead move the files to a completely different location first. If anything goes wrong you can figure out which files and why and move the files back.

sbj

Well-known member

But what is the purpose of this addon if we cannot delete our attachments files?

The only reason to use services like Amazon S3 is because it is much cheaper and we don't have the same capacity on our hosted servers. But if I cannot free disk space on my server, why use this at all?

I am just asking genuinely. I am interested in this setup for a long time but it can cause a lot of problems for forums who depend on attachments and I am not confident enough because of that.

The only reason to use services like Amazon S3 is because it is much cheaper and we don't have the same capacity on our hosted servers. But if I cannot free disk space on my server, why use this at all?

I am just asking genuinely. I am interested in this setup for a long time but it can cause a lot of problems for forums who depend on attachments and I am not confident enough because of that.