You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

XF 1.5 Submitting sitemaps does not work any longer

- Thread starter fredrikse

- Start date

Is the web address supposed to start with SSL? I've never seen that before.

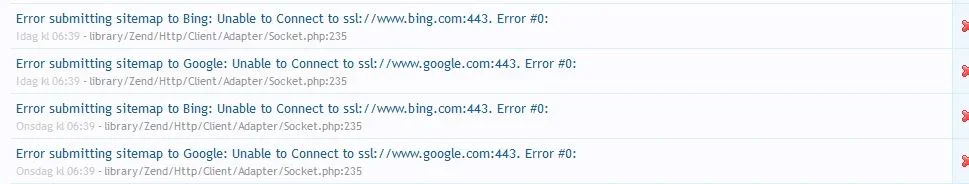

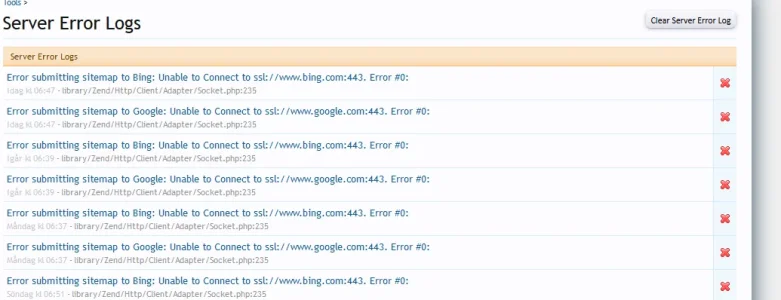

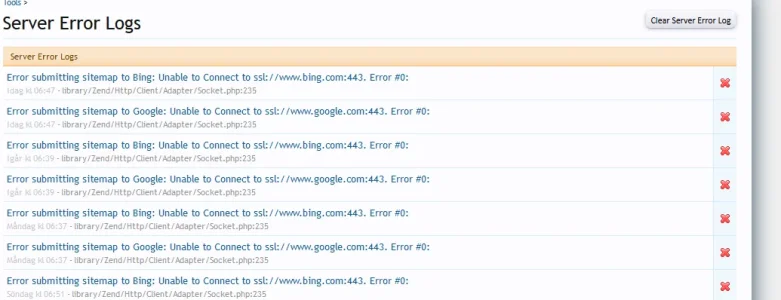

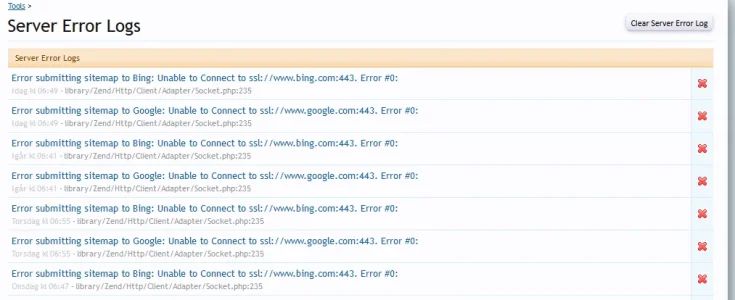

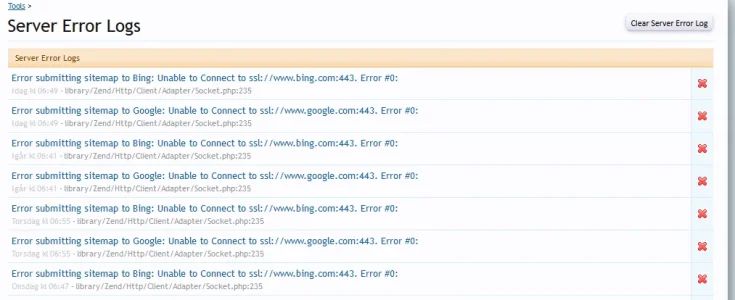

Zend_Http_Client_Adapter_Exception: Error submitting sitemap to Google: Unable to Connect to ssl://www.google.com:443. Error #0: - library/Zend/Http/Client/Adapter/Socket.php:235

Zend_Http_Client_Adapter_Exception: Error submitting sitemap to Google: Unable to Connect to ssl://www.google.com:443. Error #0: - library/Zend/Http/Client/Adapter/Socket.php:235

Yes, that's correct.

Unfortunately, you web host would really likely need to test via PHP itself using sockets to be a fair test. It's important to run the test using the same user PHP is running as -- and as a real PHP process -- because there can be per process/user restrictions applied.

Unfortunately, you web host would really likely need to test via PHP itself using sockets to be a fair test. It's important to run the test using the same user PHP is running as -- and as a real PHP process -- because there can be per process/user restrictions applied.

Picking up this thread again. I've been working with my service provided to try and pin point the error.

They ran a script and for Google it returned a 200 OK message:

But I still get these errors:

Any ideas where to go from here?

They ran a script and for Google it returned a 200 OK message:

PHP:

<?php

// create curl resource

$ch = curl_init();

// set url

$url = "https://www.google.com";

echo "URL: " . $url . "<br>";

curl_setopt($ch, CURLOPT_URL, $url);

//return the transfer as a string

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

// $output contains the output string

$output = curl_exec($ch);

echo "Output: <br>" . var_dump($output);;

echo "CURLINFO_HTTP_CODE: " . curl_getinfo($ch, CURLINFO_HTTP_CODE);

// close curl resource to free up system resources

curl_close($ch);

?>But I still get these errors:

Any ideas where to go from here?

Unfortunately, that isn't really the code path of the code being triggered here. Based on the error here, this is done through PHP sockets rather than cURL. The code in question is from the Zend Framework code in library/Zend/Http/Client/Adapter/Socket.php. There is more code before this to configure things like the context, but this is the line that isn't connecting:

It may be down to things like the SSL certificates not being setup in PHP itself properly.

Code:

$this->socket = @stream_socket_client($host . ':' . $port,

$errno,

$errstr,

(int) $this->config['timeout'],

$flags,

$context);It may be down to things like the SSL certificates not being setup in PHP itself properly.

Hi,

At the beginning of November I switched to SSL on my Xenforo website. Everything was working fine at a glance but then I started seeing more and more entries in the error log.

I kept getting errors related to sitemap submitting to various search engines. For the past month or so I've been working with my service provider to sort this out (https://xenforo.com/community/threads/submitting-sitemaps-does-not-work-any-longer.155699/). To this date it has still not been sorted out.

Today I was going to submit the sitemap manually to the Google Search Console. In the overview I saw a dramatic drop in clicks and impressions. Can sombody explain to me what this mean? Is it related to the switch to SSL?

When I look in the sitemap file itself for the threads I see this:

It says HTTP at the beginning of each URL when it should be HTTPS. My service provider offers the Let's Encrypt certificate to be installed for free. And in that process there is an option that you can select if you want all traffic on HTTP to be redirected to HTTPS.

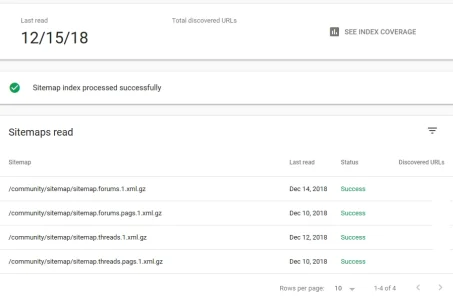

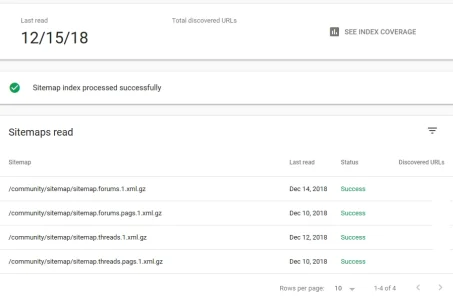

It also seems like Google can read the sitemaps generated by Xenforo:

Any guidance on this would be very much appreciated. It feels like I'm not getting the traffic I used to before I switched to SSL.

At the beginning of November I switched to SSL on my Xenforo website. Everything was working fine at a glance but then I started seeing more and more entries in the error log.

I kept getting errors related to sitemap submitting to various search engines. For the past month or so I've been working with my service provider to sort this out (https://xenforo.com/community/threads/submitting-sitemaps-does-not-work-any-longer.155699/). To this date it has still not been sorted out.

Today I was going to submit the sitemap manually to the Google Search Console. In the overview I saw a dramatic drop in clicks and impressions. Can sombody explain to me what this mean? Is it related to the switch to SSL?

When I look in the sitemap file itself for the threads I see this:

It says HTTP at the beginning of each URL when it should be HTTPS. My service provider offers the Let's Encrypt certificate to be installed for free. And in that process there is an option that you can select if you want all traffic on HTTP to be redirected to HTTPS.

It also seems like Google can read the sitemaps generated by Xenforo:

Any guidance on this would be very much appreciated. It feels like I'm not getting the traffic I used to before I switched to SSL.

Last edited:

I am sorry for having to say that, but:The service provider tried to access Google with wget from the web server hosting my forum. And it worked. Where do I go from here?

Your service provider is incompetent.

A HTTPS request with wget from the command line is a completely different environment than using curl/socket operations from PHP within the webserver (mod_php) or PHP-FPM.

Jesus christ, one month for investigatinh such an issue is insane, normally this should be resolved within mnutes.

I'd expect this to be an issue with certificates, outdated OpenSSL or firewall settings.

To check if it is a firewall issue I'd test if a raw socket connection without and to www.google.com port 443 can be opened from a PHP-Script executed to the weberver.

If this succeeds go on with checking PHP OpenSSL config.

This is what my robots.txt looks like:

In another thread I saw that the Sitemap URL nowadays is /sitemap/sitemap.php. Should I change the robots.txt to that?User-agent: *

Disallow: /account/

Disallow: /find-new/

Disallow: /help/

Disallow: /goto/

Disallow: /login/

Disallow: /lost-password/

Disallow: /misc/style/

Disallow: /online/

Disallow: /posts/

Disallow: /recent-activity/

Disallow: /register/

Disallow: /search/

Disallow: /admin.php

Disallow: /index.php?account/

Disallow: /index.php?find-new/

Disallow: /index.php?help/

Disallow: /index.php?goto/

Disallow: /index.php?login/

Disallow: /index.php?lost-password/

Disallow: /index.php?misc/style/

Disallow: /index.php?online/

Disallow: /index.php?posts/

Disallow: /index.php?recent-activity/

Disallow: /index.php?register/

Disallow: /index.php?search/

Disallow: /admin.php

Allow: /

Sitemap: https://www.mydomain.com/sitemap/sitemap.xml.gz

Last edited:

Perfect. And that's the URL I will submit to Google Console as weel?If thisrobots.txtis for a XenForo website, the correctSitemapentry would behttps://www.mydomain.com/sitemap.php

XenForo pings Google etc. whenever a new sitemap has been generated, this allows search engines to grab updates "immediately".

Otherwise they would have to re-check sitemaps in certain intervals (which they also do) to find updates, though that might/will take longer than without pings.

Otherwise they would have to re-check sitemaps in certain intervals (which they also do) to find updates, though that might/will take longer than without pings.

Similar threads

- Suggestion

- Replies

- 0

- Views

- 774

- Question

- Replies

- 2

- Views

- 960

- Replies

- 10

- Views

- 580