And even then it will block many legit visitors too for sure.

It's certainly not for sure, but I do appreciated your concerns... don't use it if you're worried. Have you found that FBHP has accidently detected a human as a bot? StopBotters goes a little bit further at making sure this never happens (multiple bot triggers have to occur before StopBotters/StopProxies ever records a SpamBot). I haven't had a case where StopBotters has detected a bot, but somehow the mechanism of FBHP didn't, and I expect I never will (unless FBPH is bypassed)

You are right about IP addresses re-entering the pool, but as mentioned, all the IP addresses that are prevented are ones that have been detected as active Spam Bots within the last few weeks

The concern about script kiddies banning an IP address isn't very valid. The techniques used to detect bots are very optimised for detecting XRumer (This is what hits and spams most forums a very high % of time).

XRumer is quite a hefty resource

People usually run it from dedicated servers

It's quite costly (~$600 last time I checked)

Most XRumer users use a dedicated box as a proxy server, or buy a list of proxies (some times they use free proxies, but find they are already banned on most forums)... These proxies are usually paid for a month (some sites offer daily proxies, but they are kept by the proxy owner for a lot longer). They wont be using their own IP addresses. So these IP addresses are out of the domain of your normal users for a while. But, eventually these proxies will go back into the pool, 3 weeks is a reasonable time for forum users using variable IP addresses not to get accidently detected as a bot (since even if a proxy is detected by a spammer, the spammer / proxy provider will have ownership of that proxy usually for at least a month)

If the spammer is running a basic script via a browser, FBPH goes out of its way to not detect it as a bot (Real people do use browser plugins to auto complete forms or they use browser plugins for password managers). Users that are using browsers are very unlikely to ever be detected as a bot, regardless of what browser plugin they are using. A combination of events has to be triggered to be detected as a spam bot, and this is only done with an outside application (such as XRumer). People running scripts via browsers wont be detected as spam bots... and to be honest, I don't really care about them (they are not firing 1000 requests every few seconds at hundreds of thousands of forums overnight), in fact, compared to XRumer users, they are very rare.

StopBotters / StopProxies does avoid human errors being introduce by humans falsely reporting IP addresses (maliciously or accidently) as a bot. I've seen this over and over on most anti-spam API's, the reason for this is the introduction of human reporting, or incorrect use of their reporting mechanism. That can not happen with StopBotters/StopProxies, nobody has access to use it or manually report forum users to it

It's also a closed network, nobody can query that database or use the database apart from XenForo forums. The reason for this is that it has been noticed that the effectiveness of many API's has started to dwindle and will continue to slip as more XRumer users use the open networks to know when to change their settings, or use things such as Xblack.txt, this allows the spam bots to go unnoticed for much longer.

By removing the information from the bot users, making the methods unobtainable and not making the data public we put XenForo users that use StopBotters in a much stronger position

But.. if you can not send user data to a third party, then obviously no API can be used in your case (all API's need some information to be able to detect if the user is a bot/not). The lack of information on the StopBotters/StopProxies site will possibly stay that way for a while, so if this is something you can't live with for your forum users, then don't use it.

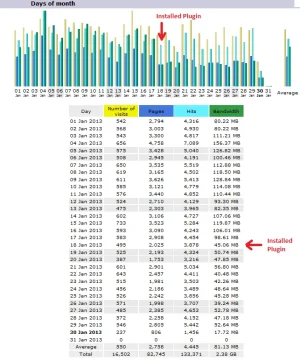

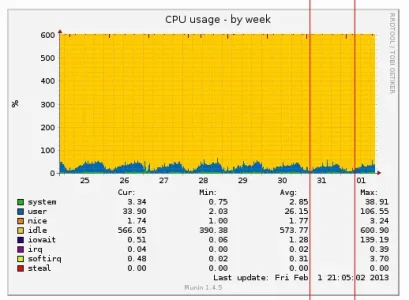

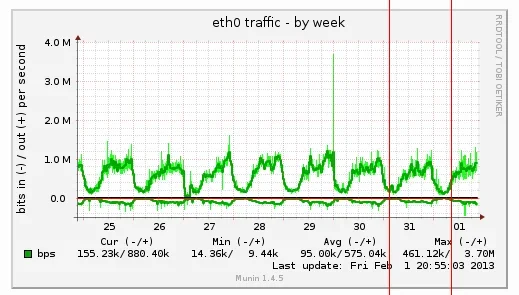

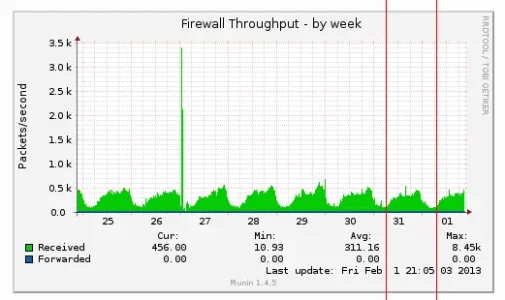

But.. as a final note, this mod (StopBotResources) is not for all forums. It's to be used for small forums (usually on shared hosts) that are finding spam bots are taking up a considerable amount of resources.... I myself was in this situation. It is a life saver for those forums that just cant continue due to the amount of resources spam takes up (I know of sites that have closed down just due to spam attempting to hit them, even if the spam wasn't successful). It doesn't sound like you are in that situation, so it's unlikely this plugin will benefit you considerably

I don't like to take the role of "devils advocate" here

Don't worry, I can see where you are coming from, but I know the other side... and everything is being done to avoid false negatives... it's designed with this in mind. It's currently very easy to detect bots, but harder to make sure you never accidently detect a human as a bot (this is something I keep repeating in PM's ... so, making sure false negatives are not found is priority)