webbouk

Well-known member

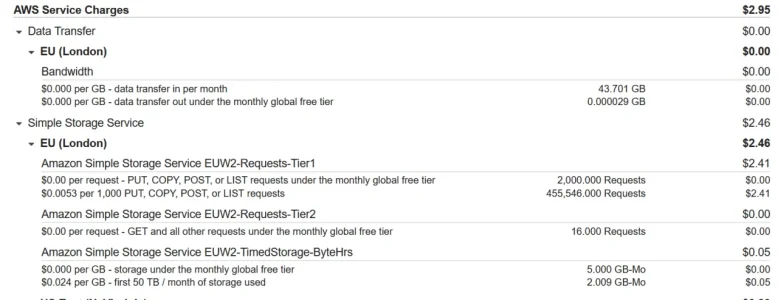

I'm currently running two nightly backups, both using JetBackup - one to back up all cPanel accounts to the server and another backup of the same off server to Amazon AWS just as an insurance should the server ever fail.

Both backups are set to store daily for seven days and then overwrite leaving rolling seven day backups.

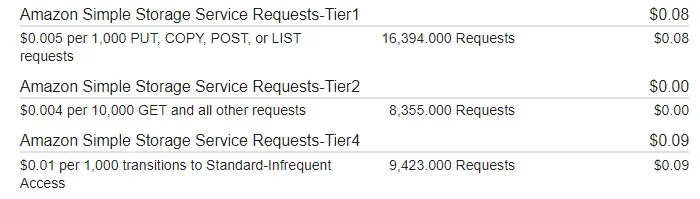

However, the AWS free tier usage only allows for 2,000 Put, Copy, Post or List Requests of Amazon S3 and I've exceeded that in less than two days so therefore starting to acrue charges.

What other options are available for large website/forum backups 'off server', preferably free or cheap

Both backups are set to store daily for seven days and then overwrite leaving rolling seven day backups.

However, the AWS free tier usage only allows for 2,000 Put, Copy, Post or List Requests of Amazon S3 and I've exceeded that in less than two days so therefore starting to acrue charges.

What other options are available for large website/forum backups 'off server', preferably free or cheap