Winterstride

Member

Hi, I barely know what I'm doing.

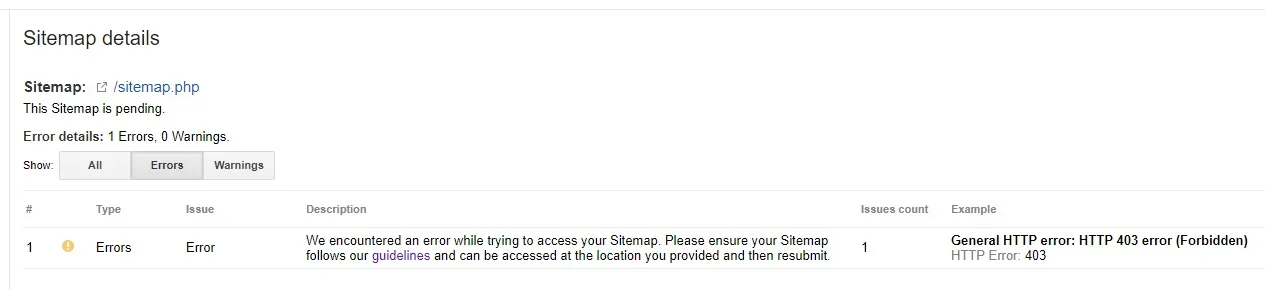

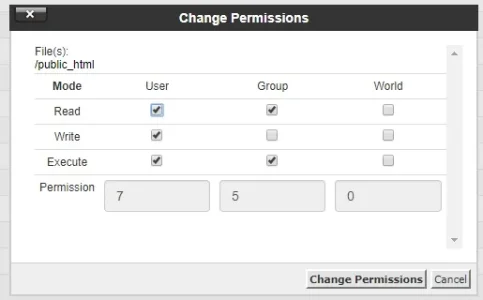

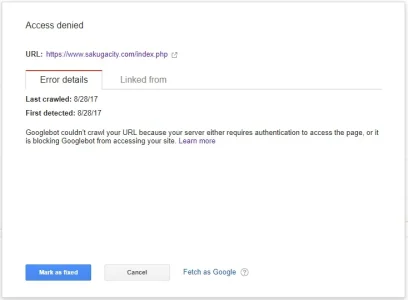

Our website stopped getting crawlers at some point and no longer seems to be indexed all that well on the Googles. We realized that we didn't have something called an SSL Certificate, which my admins tell me was very important to have for this sorta thing, so we got it and... still nothing!

From my research it seems we may just be in the "wait it out" phase and Google may get on top of it sometime down the line but I thought hey, in the meantime, why not ask around and see what other things may be contributing to our lack of Google indexing? Couldn't hurt, right?

Our site here if you need to take a look for some reason: www.sakugacity.com

Thank you very much for your time.

Our website stopped getting crawlers at some point and no longer seems to be indexed all that well on the Googles. We realized that we didn't have something called an SSL Certificate, which my admins tell me was very important to have for this sorta thing, so we got it and... still nothing!

From my research it seems we may just be in the "wait it out" phase and Google may get on top of it sometime down the line but I thought hey, in the meantime, why not ask around and see what other things may be contributing to our lack of Google indexing? Couldn't hurt, right?

Our site here if you need to take a look for some reason: www.sakugacity.com

Thank you very much for your time.