GrnEyedDvl

Active member

I will spoiler some images at the bottom of the post.

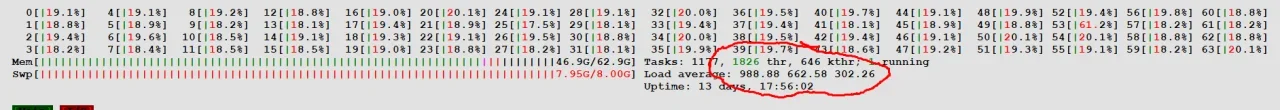

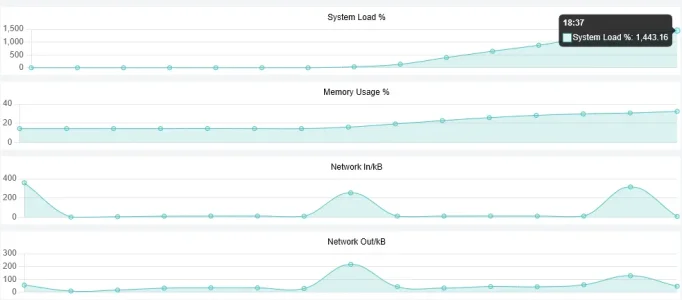

I wanted to share what happened overnight so people can have some idea of what to expect and come up with possible ways to handle it. I run a dedicated Ubuntu server, 64 cores with a ton of RAM on nginx, and we started getting hit by 100,000 requests per minute or more starting about 930 PM or so Central time US.. We are already behind Cloudflare and have their bot and scrape protection turned on. When I got a note from someone that the site was timing out I jumped on and server load was at nearly 1000, and climbing.

I immediately put Cloudflare in Under Attack mode, and slowed down a little bit and then picked right back up. It eventually peaked at server load just under 1200 at which point I just killed nginx and mysql. I was getting traffic that mostly (come back to this in a second) went through Cloudflare because they were disguising their User-Agent block, changing them every few minutes to look like normal users and they totally ignored robots.txt.

I have thousands of IPs, mostly from Brazil but some from China/Hong Kong and Singapore as well. As near as I can tell about 70ish% of this traffic actually went through the CF protection and 30ish% was direct to my IP. I am not 100% sure on that as I think there was a TON of logs in the buffer that did not get written before I killed nginx and I generally do not log every single access anyways. Either one by itself would have been a major issue, combined the server just could not handle it.

After blocking the entire world outside the US via CF rule so I could get web services up and start looking at things, this is when I noticed the User-Agent changes on identical IPs and that some were not coming from the Cloudflare proxy but were instead coming in direct. That is a problem.

I found a script that updates the UFW firewall rules with Cloudflare IP addresses. Basically what you do is shutdown all services so you can work (web, database, etc) and quickly disable UFW reset all the rules via the

Clone the git repository above, then edit the file. By default it allows all CF IP addresses to access all ports which is a bit insane. There are a couple of options in there, one to allow port 80, one to allow port 443, and one to allow both. Comment all of them out and then uncomment the option for port 443. That is really all that CF needs to pass to your server.

Run the script, you will end up with a UFW ruleset like this. The REDACTED part is my home IP address. Then you can add back in anything you need for FTP or email or cpanel and restrict it to wherever you need to. Then setup a cronjob to run the CF IP script once or twice a day to catch when they update their IP list.

That solves one problem, the direct access instead of going through CF. For the ones that make it through CF I finally got around to setting up rate limiting in nginx.

In /etc/nginx.conf, inside the http block

And inside either your /etc/nginx/conf.d/default file or inside your /etc/nginx/sites-available/your.site.vhost file. This is the location block suggested in the XenForo manual. I gave it a burst limit of 15 per second.

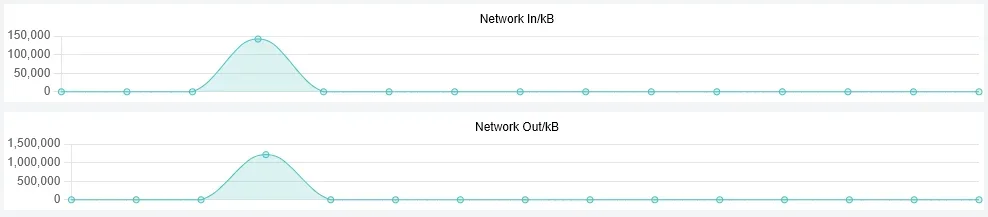

And a couple of shots of how bad this was. After I killed nginx and load started dropping I grabbed a screen shot. And I also have a shot from the ISPConfig monitor that shows data transfers. That is a 5 minute window. That big jump makes normal traffic look like 0.

Hope this helps someone out.

I wanted to share what happened overnight so people can have some idea of what to expect and come up with possible ways to handle it. I run a dedicated Ubuntu server, 64 cores with a ton of RAM on nginx, and we started getting hit by 100,000 requests per minute or more starting about 930 PM or so Central time US.. We are already behind Cloudflare and have their bot and scrape protection turned on. When I got a note from someone that the site was timing out I jumped on and server load was at nearly 1000, and climbing.

I immediately put Cloudflare in Under Attack mode, and slowed down a little bit and then picked right back up. It eventually peaked at server load just under 1200 at which point I just killed nginx and mysql. I was getting traffic that mostly (come back to this in a second) went through Cloudflare because they were disguising their User-Agent block, changing them every few minutes to look like normal users and they totally ignored robots.txt.

I have thousands of IPs, mostly from Brazil but some from China/Hong Kong and Singapore as well. As near as I can tell about 70ish% of this traffic actually went through the CF protection and 30ish% was direct to my IP. I am not 100% sure on that as I think there was a TON of logs in the buffer that did not get written before I killed nginx and I generally do not log every single access anyways. Either one by itself would have been a major issue, combined the server just could not handle it.

After blocking the entire world outside the US via CF rule so I could get web services up and start looking at things, this is when I noticed the User-Agent changes on identical IPs and that some were not coming from the Cloudflare proxy but were instead coming in direct. That is a problem.

I found a script that updates the UFW firewall rules with Cloudflare IP addresses. Basically what you do is shutdown all services so you can work (web, database, etc) and quickly disable UFW reset all the rules via the

ufw reset command, then allow SSH from your IP and enable the firewall again. Now you have a firewall that will not allow anything in at all.Clone the git repository above, then edit the file. By default it allows all CF IP addresses to access all ports which is a bit insane. There are a couple of options in there, one to allow port 80, one to allow port 443, and one to allow both. Comment all of them out and then uncomment the option for port 443. That is really all that CF needs to pass to your server.

Run the script, you will end up with a UFW ruleset like this. The REDACTED part is my home IP address. Then you can add back in anything you need for FTP or email or cpanel and restrict it to wherever you need to. Then setup a cronjob to run the CF IP script once or twice a day to catch when they update their IP list.

NGINX:

To Action From

-- ------ ----

[ 1] 22/tcp ALLOW IN REDACTED

[ 2] Anywhere ALLOW IN REDACTED

[ 3] 443 ALLOW IN 173.245.48.0/20 # Cloudflare IP

[ 4] 443 ALLOW IN 103.21.244.0/22 # Cloudflare IP

[ 5] 443 ALLOW IN 103.22.200.0/22 # Cloudflare IP

[ 6] 443 ALLOW IN 103.31.4.0/22 # Cloudflare IP

[ 7] 443 ALLOW IN 141.101.64.0/18 # Cloudflare IP

[ 8] 443 ALLOW IN 108.162.192.0/18 # Cloudflare IP

[ 9] 443 ALLOW IN 190.93.240.0/20 # Cloudflare IP

[10] 443 ALLOW IN 188.114.96.0/20 # Cloudflare IP

[11] 443 ALLOW IN 197.234.240.0/22 # Cloudflare IP

[12] 443 ALLOW IN 198.41.128.0/17 # Cloudflare IP

[13] 443 ALLOW IN 162.158.0.0/15 # Cloudflare IP

[14] 443 ALLOW IN 104.16.0.0/13 # Cloudflare IP

[15] 443 ALLOW IN 104.24.0.0/14 # Cloudflare IP

[16] 443 ALLOW IN 172.64.0.0/13 # Cloudflare IP

[17] 443 ALLOW IN 131.0.72.0/22 # Cloudflare IP

[18] 443 ALLOW IN 2400:cb00::/32 # Cloudflare IP

[19] 443 ALLOW IN 2606:4700::/32 # Cloudflare IP

[20] 443 ALLOW IN 2803:f800::/32 # Cloudflare IP

[21] 443 ALLOW IN 2405:b500::/32 # Cloudflare IP

[22] 443 ALLOW IN 2405:8100::/32 # Cloudflare IP

[23] 443 ALLOW IN 2a06:98c0::/29 # Cloudflare IP

[24] 443 ALLOW IN 2c0f:f248::/32 # Cloudflare IP

[25] 443 (v6) DENY IN Anywhere (v6)That solves one problem, the direct access instead of going through CF. For the ones that make it through CF I finally got around to setting up rate limiting in nginx.

In /etc/nginx.conf, inside the http block

Code:

limit_req_zone $binary_remote_addr zone=mylimit:10m rate=10r/s;And inside either your /etc/nginx/conf.d/default file or inside your /etc/nginx/sites-available/your.site.vhost file. This is the location block suggested in the XenForo manual. I gave it a burst limit of 15 per second.

Code:

location / {

limit_req zone=mylimit burst=15 nodelay;

index index.php index.html index.htm;

try_files $uri $uri/ /index.php?$uri&$args;

}And a couple of shots of how bad this was. After I killed nginx and load started dropping I grabbed a screen shot. And I also have a shot from the ISPConfig monitor that shows data transfers. That is a 5 minute window. That big jump makes normal traffic look like 0.

Hope this helps someone out.