LaxmiSathy

Member

Hello,

Recently I migrated my vbulletin board to Xenforo.

vbulletin is in the path - /public_html/forums

and Xenforo installation is in the path - public_html/community.

Now I have clarifications regarding robots.txt:

1) Should I include

Disallow: /forums/

to tell Google not to crawl the /forums pages?

2)I have included

Disallow: /community/members/

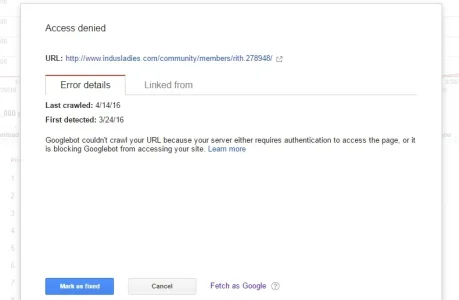

for all the member pages, but am getting "Access Denied" crawl error for the member pages.

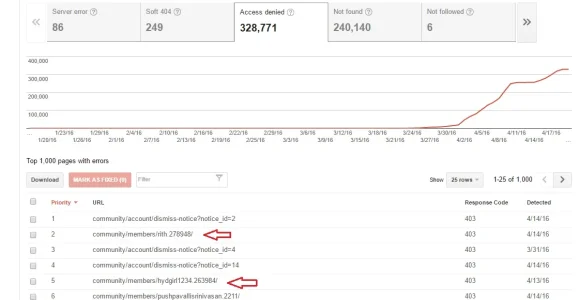

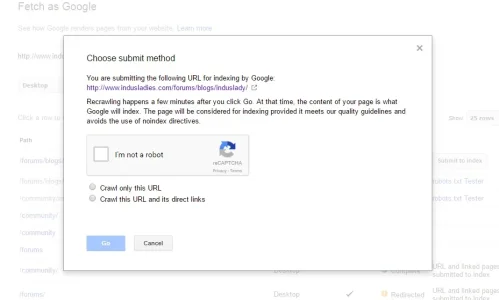

I have submitted that robots.txt is updated and also did "Fetch as Google" but still shows 300,000+ member pages as access denied crawl error

Should I do things additionally as well?

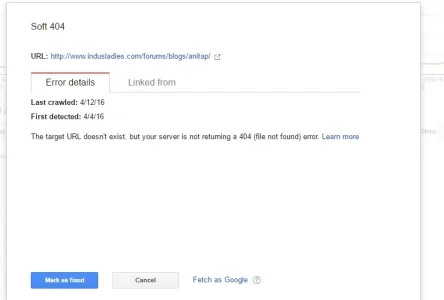

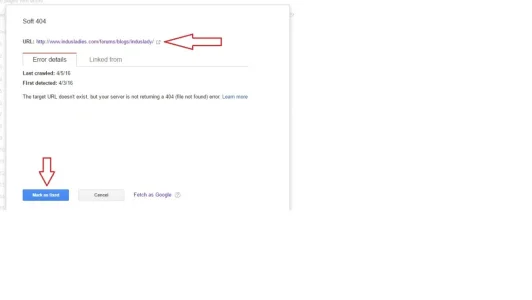

3)Also earlier I have vbulletin blogs and there were few blog pages like

/forums/blogs/anitap

which no longer exists now as I have imported all of the blog content into Xenforo threads. Should I include this url also to disallow from crawl in robots.txt?

Recently I migrated my vbulletin board to Xenforo.

vbulletin is in the path - /public_html/forums

and Xenforo installation is in the path - public_html/community.

Now I have clarifications regarding robots.txt:

1) Should I include

Disallow: /forums/

to tell Google not to crawl the /forums pages?

2)I have included

Disallow: /community/members/

for all the member pages, but am getting "Access Denied" crawl error for the member pages.

I have submitted that robots.txt is updated and also did "Fetch as Google" but still shows 300,000+ member pages as access denied crawl error

Should I do things additionally as well?

3)Also earlier I have vbulletin blogs and there were few blog pages like

/forums/blogs/anitap

which no longer exists now as I have imported all of the blog content into Xenforo threads. Should I include this url also to disallow from crawl in robots.txt?