You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

XF 2.2 How to back up your forum

- Thread starter 80sDude

- Start date

M@rc

Well-known member

It depends on your web host. If your server comes with cPanel, it's just a click of a button under the Backups icon. If the server doesn't have a control panel, you'll need to run the mysql dump command.I remember back in the days backing up vbulletin with mySql but l'm wondering if that is the method of doing it with Xen and two if so can somebody refresh my memory on how it's done? Thanks

There are addons you can download too. Have a search in the resource manager for them.

MartyFeldman

Member

How would a non-IT person start from scratch please?

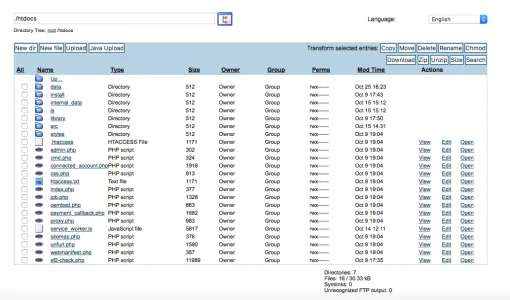

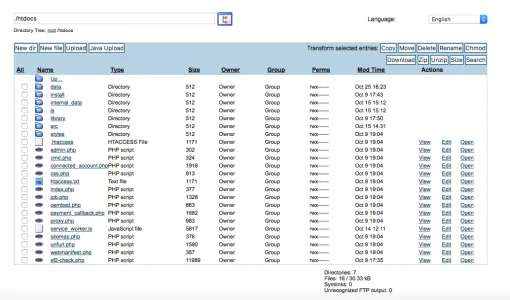

My host doesn't have cPanel but does permit FTP access albeit a very basic form. This is what we see:

What do we back up? All of it?

Had a look at some of the add-ons available but they're executable files and the Host doesn't have php exec function enabled and won't - its a shared hosting environment.

As for MySQLdump to deal with the database well that requires writing some code into something or other and not being IT techies (we need nice idiot proof GUI interfaces) no one here is brave enough to do that for fear of FUBARing what is a busy forum.

to deal with the database well that requires writing some code into something or other and not being IT techies (we need nice idiot proof GUI interfaces) no one here is brave enough to do that for fear of FUBARing what is a busy forum.

My host doesn't have cPanel but does permit FTP access albeit a very basic form. This is what we see:

What do we back up? All of it?

Had a look at some of the add-ons available but they're executable files and the Host doesn't have php exec function enabled and won't - its a shared hosting environment.

As for MySQLdump

You could consider downloading an FTP client which will make things a bit easier (Cyberduck, FileZilla...). For the MySQL part, you could always use MySQLWorkbench, this will help you visualize your data.How would a non-IT person start from scratch please?

My host doesn't have cPanel but does permit FTP access albeit a very basic form. This is what we see:

View attachment 239265

What do we back up? All of it?

Had a look at some of the add-ons available but they're executable files and the Host doesn't have php exec function enabled and won't - its a shared hosting environment.

As for MySQLdumpto deal with the database well that requires writing some code into something or other and not being IT techies (we need nice idiot proof GUI interfaces) no one here is brave enough to do that for fear of FUBARing what is a busy forum.

MartyFeldman

Member

ThanksYou could consider downloading an FTP client which will make things a bit easier (Cyberduck, FileZilla...). For the MySQL part, you could always use MySQLWorkbench, this will help you visualize your data.

In theory you only need the data and internal_data directories, and possibly the style directories if you have uploaded custom logos, etc.

All of the core files from XF and add-ons can be obtained from your account, the RM, third party sites.

However, it's much easier to just download the entire contents of the directory where XF is installed.

In the case of your screenshot above, that would be everything in the htdocs directory.

All of the core files from XF and add-ons can be obtained from your account, the RM, third party sites.

However, it's much easier to just download the entire contents of the directory where XF is installed.

In the case of your screenshot above, that would be everything in the htdocs directory.

Mr Lucky

Well-known member

You can export/import databases also via phpmyadmin (may need some host tweaks to increase import quota though)My host doesn't have cPanel

Last edited:

Mr Lucky

Well-known member

Hence I mentioned the host. Mattw has tweaked ours so that over 50mb works fine.I wouldn't trust phpMyadmin for anything over about 50MB.

But I’d use jet backup in preference over cPanel or php my admin so I time any updates etc to coincide with jetbackup times

Rhody

Well-known member

Don't forget, there are 2 different areas that need backup. The public_html files and the SQL database.

I set a cron to run at 3am and 3pm that backs up the sql, in a 7 day rotation which re-uses each day 7 days later.

ex:

I have another cron that compresses a daily file of the html folder.

ex:

A script runs on the home PC that ftp's in just after 3am and 3pm to download those archives, so there is an off-site copy as well as the one that lives hidden on the server. I keep 7 days of the tar, also, but do that locally with a script that renames the files each day.

In addition, the hosting company also does a jetbackup off-site backup. (but I don't really trust that one so much, and it doesn't seem to always run daily) It's a 'last resort' for me, but it's nice to have if I ever wanted to go back more than 7 days (HIGHLY unlikely)

So there are 3 backups available, in an emergency. The important one is done every 12 hours, for the SQL database only.

Good luck. Backups are super important, IMO.

on 9/11, one of the towers fell on my ISP at the time. It was months before I was able to access my data/their server again, and they were back. (no one died, thankfully) I had backups, and was up the next day - at a new ISP.

Years later, the data center for an ISP typed a command in error that wiped their entire data center out. It took more than a week to restore everything from an off-site last resort backup they had (because it used so much bandwidth). I had my own backups, and was back in a few hours.

For these reasons, I strongly feel that getting them offsite is important, too.

Mike

I set a cron to run at 3am and 3pm that backs up the sql, in a 7 day rotation which re-uses each day 7 days later.

ex:

Code:

mysqldump --opt --default-character-set=utf8mb4 -uUSERNAME -pPASSWORD DATABASE_NAME > /home/ACCOUNTNAME/backup/XYZFORUM-bkup-$(date +\%a).sqlI have another cron that compresses a daily file of the html folder.

ex:

Code:

ex: tar -cf /home/ACCOUNTNAME/backup/XYZFORUM-BU.tar /home/ACCOUNTNAME/public_html/A script runs on the home PC that ftp's in just after 3am and 3pm to download those archives, so there is an off-site copy as well as the one that lives hidden on the server. I keep 7 days of the tar, also, but do that locally with a script that renames the files each day.

In addition, the hosting company also does a jetbackup off-site backup. (but I don't really trust that one so much, and it doesn't seem to always run daily) It's a 'last resort' for me, but it's nice to have if I ever wanted to go back more than 7 days (HIGHLY unlikely)

So there are 3 backups available, in an emergency. The important one is done every 12 hours, for the SQL database only.

Good luck. Backups are super important, IMO.

on 9/11, one of the towers fell on my ISP at the time. It was months before I was able to access my data/their server again, and they were back. (no one died, thankfully) I had backups, and was up the next day - at a new ISP.

Years later, the data center for an ISP typed a command in error that wiped their entire data center out. It took more than a week to restore everything from an off-site last resort backup they had (because it used so much bandwidth). I had my own backups, and was back in a few hours.

For these reasons, I strongly feel that getting them offsite is important, too.

Mike

Last edited:

D

Deleted member 184953

Guest

Hello,

Sorry for my very very newbie question but how i can access and backup my SQL database which is hosted on a server with my computer ?

Some said to connect with powershell but how ? I'm looking for help on google but every websites speaking about that begin with samples of command line but nobody explain how to do before that.

I don't understand anything...

Sorry for my very very newbie question but how i can access and backup my SQL database which is hosted on a server with my computer ?

Some said to connect with powershell but how ? I'm looking for help on google but every websites speaking about that begin with samples of command line but nobody explain how to do before that.

I don't understand anything...

You need to log in to the server using the host name or IP address, a user name, and password.

That will only be possible via SSH (using PuTTY, Powershell, etc.) if you have that functionality on the server.

Most shared hosting does not allow for SSH.

Contact your host and they will be able to assist.

That will only be possible via SSH (using PuTTY, Powershell, etc.) if you have that functionality on the server.

Most shared hosting does not allow for SSH.

Contact your host and they will be able to assist.

MartyFeldman

Member

Just for completeness (as I hate it when a thread in a forum just stops part way through without reaching a conclusion and sharing the knowledge) and for the benefit of any other non-IT tech person reading this thread in the future I believe I managed it thanks to the advice above and to a couple of users who PM'd me as well  .

.

1. I downloaded Filezilla and installed it on a local machine. I then used Filezilla to connect the local machine via FTP to the web server and exported (aka copied) everything in the htdocs file on the hosted server to the local machine.

2. I downloaded MySQLWorkbench and, as above, connected to the hosted server where my MySQL DB sits and again exported the database to the local machine.

A manual operation admittedly but at least I feel more comfortable now.

The local machine runs automatic back-ups to a remote disk so I also have a back up of my back up.

For the future I need to learn how to automate the above manual processes rather than set them to run each evening before leaving - so research required into creating Cron entries.

A point to note for anyone else as well, on checking the back-up of the DB once it had completed it's task it appears to have captured everything but I did notice that during the transfer there were quite a few "Access Denied - PROCESS ... something or other about permissions" appears in the log. It would seem I don't have the requisite permission to access and copy some of the things in the DB. So I need to work out what's happening there as it was the DB owner's credentials being used to access the DB.

1. I downloaded Filezilla and installed it on a local machine. I then used Filezilla to connect the local machine via FTP to the web server and exported (aka copied) everything in the htdocs file on the hosted server to the local machine.

2. I downloaded MySQLWorkbench and, as above, connected to the hosted server where my MySQL DB sits and again exported the database to the local machine.

A manual operation admittedly but at least I feel more comfortable now.

The local machine runs automatic back-ups to a remote disk so I also have a back up of my back up.

For the future I need to learn how to automate the above manual processes rather than set them to run each evening before leaving - so research required into creating Cron entries.

A point to note for anyone else as well, on checking the back-up of the DB once it had completed it's task it appears to have captured everything but I did notice that during the transfer there were quite a few "Access Denied - PROCESS ... something or other about permissions" appears in the log. It would seem I don't have the requisite permission to access and copy some of the things in the DB. So I need to work out what's happening there as it was the DB owner's credentials being used to access the DB.

80sDude

Well-known member

Your path for the cron is that where you have your backups stored in? For instance what is home/Accountname exactly? ThanksDon't forget, there are 2 different areas that need backup. The public_html files and the SQL database.

I set a cron to run at 3am and 3pm that backs up the sql, in a 7 day rotation which re-uses each day 7 days later.

ex:Code:mysqldump --opt --default-character-set=utf8mb4 -uUSERNAME -pPASSWORD DATABASE_NAME > /home/ACCOUNTNAME/backup/XYZFORUM-bkup-$(date +\%a).sql

I have another cron that compresses a daily file of the html folder.

ex:Code:ex: tar -cf /home/ACCOUNTNAME/backup/XYZFORUM-BU.tar /home/ACCOUNTNAME/public_html/

A script runs on the home PC that ftp's in just after 3am and 3pm to download those archives, so there is an off-site copy as well as the one that lives hidden on the server. I keep 7 days of the tar, also, but do that locally with a script that renames the files each day.

In addition, the hosting company also does a jetbackup off-site backup. (but I don't really trust that one so much, and it doesn't seem to always run daily) It's a 'last resort' for me, but it's nice to have if I ever wanted to go back more than 7 days (HIGHLY unlikely)

So there are 3 backups available, in an emergency. The important one is done every 12 hours, for the SQL database only.

Good luck. Backups are super important, IMO.

on 9/11, one of the towers fell on my ISP at the time. It was months before I was able to access my data/their server again, and they were back. (no one died, thankfully) I had backups, and was up the next day - at a new ISP.

Years later, the data center for an ISP typed a command in error that wiped their entire data center out. It took more than a week to restore everything from an off-site last resort backup they had (because it used so much bandwidth). I had my own backups, and was back in a few hours.

For these reasons, I strongly feel that getting them offsite is important, too.

Mike

Similar threads

- Question

- Replies

- 44

- Views

- 353

- Question

- Replies

- 6

- Views

- 740

- Question

- Replies

- 1

- Views

- 893

- Question

- Replies

- 3

- Views

- 409

- Question

- Replies

- 1

- Views

- 1K