You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

XF 2.1 Google reports "soft 404" for attachment URLs

- Thread starter dethfire

- Start date

jgaulard

Active member

Good insight and that sounds like a reasonable explanation. XF should not use a lightbox for a direct image resource.

I would absolutely love it if Xenforo had an option to turn off the lightbox and simply link directly to any image that's uploaded. That would save me from having to block each and every /attachments/ link with robots.txt.

dethfire

Well-known member

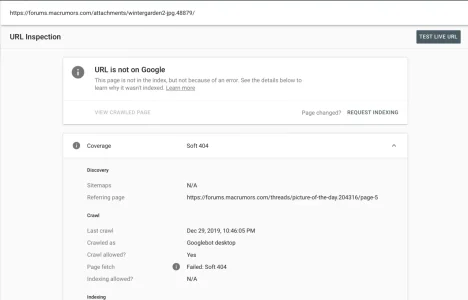

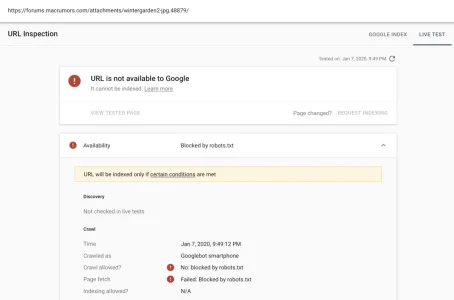

hmmm I don't get that, just a Failed: Soft 404I don't think the Lightbox explains it. When you use Inspect google for that direct url, it comes back with a Robots block error for me, even though they aren't robots blocked.

arn

Well-known member

hmmm I don't get that, just a Failed: Soft 404

Do the live test button on the top right.

Attachments

jgaulard

Active member

Good insight and that sounds like a reasonable explanation. XF should not use a lightbox for a direct image resource.

I guess my question for you would be, have all of the Soft 404s had any negative repercussions on your search engine rankings? If it's true that these image "pages" are actually pages, they would certainly be considered thin. According to pretty much everyone, thin pages are bad for rankings. I would be curious to get your perspective on this. Do you suspect that these pages have dampened your search engine position at all?

dethfire

Well-known member

Do the live test button on the top right.

I did and it's fine for me, no blocking

I guess my question for you would be, have all of the Soft 404s had any negative repercussions on your search engine rankings? If it's true that these image "pages" are actually pages, they would certainly be considered thin. According to pretty much everyone, thin pages are bad for rankings. I would be curious to get your perspective on this. Do you suspect that these pages have dampened your search engine position at all?

Good question, hard for me to tell. All I know is Google thinks it's an issue. Seems like a good idea to believe them. I agree they could be considered thin pages. Maybe the question is how to allow Google to crawl and index your image files, but not these lightbox pages. Is there a way?

jgaulard

Active member

Good question, hard for me to tell. All I know is Google thinks it's an issue. Seems like a good idea to believe them. I agree they could be considered thin pages. Maybe the question is how to allow Google to crawl and index your image files, but not these lightbox pages. Is there a way?

If you were to block the /attachments/ directory in robots.txt, that would block Google from crawling the image pages. Since the source of the image file is visible in the page code and since the actual physical images are stored in the /data/attachments/ directory, blocking the /attachments/ directory would have no effect in the indexing of the images themselves. Two completely different directories. I have the /attachments/ directory blocked on my site and the images are still being indexed by Google. They're actually indexed quite well. They generally rely on the title of the page when someone does a search for something similar.

Now, here's the thing; the reason I asked if your search engine ranking have suffered at all is because it's probably not a great idea to fix something that isn't broken. Although, if these pages have been crawled and indexed since the inception of your website, you would really have no reference point in regards to your rankings. They wouldn't have just "dropped" out of nowhere. They may have never risen to their full potential because of those pages. We simply don't know. I don't want to be the one to recommend that you go ahead and block the /attachments/ directory, which in turn would cause another section of the Google Search Console to flare up. Instead of having "Soft 404" errors, you'd have "Blocked by robots.txt" errors. Which one is worse is the big question. As I mentioned in a previous post, from my personal experience, I've seen blocked pages drop out of the index after three months, which would be a good thing. So all those "Blocked by robots.txt" errors would eventually disappear. This entire discussion really needs to be fleshed out, as it's actually quite important. I blocked my /attachments/ directory about a month and a half ago as opposed to using the Xenforo permissions to block crawling, so I'll have more input for you in a few months. I can report back and let you know if these images pages drop out of the index at all. I'd hate for you to block 85,000 pages all at once for no reason.

dethfire

Well-known member

Since the source of the image file is visible in the page code and since the actual physical images are stored in the /data/attachments/ directory, blocking the /attachments/ directory would have no effect in the indexing of the images themselves. Two completely different directories. I have the /attachments/ directory blocked on my site and the images are still being indexed by Google.

I don't understand this because when I copy an image address or look at the src in devtools it's not /data/, it's /attachments/. So how is Google finding this /data/ folder?

I don't want to be the one to recommend that you go ahead and block the /attachments/ directory, which in turn would cause another section of the Google Search Console to flare up. Instead of having "Soft 404" errors, you'd have "Blocked by robots.txt" errors. Which one is worse is the big question. As I mentioned in a previous post, from my personal experience, I've seen blocked pages drop out of the index after three months, which would be a good thing.

Also it would help with crawl budget. Google wouldn't be wasting their time crawling these pages. I either need to block or noindex these lightbox pages, but I can't find anything in templates.

jgaulard

Active member

I don't understand this because when I copy an image address or look at the src in devtools it's not /data/, it's /attachments/. So how is Google finding this /data/ folder?

This depends on which version of the image is showing in a post. If you inspect the thumbnail image, you'll see the /data/attachments/ directory. If the images are showing at full size in the post, you'll only see the /attachments/ directory.

Also it would help with crawl budget. Google wouldn't be wasting their time crawling these pages. I either need to block or noindex these lightbox pages, but I can't find anything in templates.

I've actually updated my entire outlook on this topic. Here's what I did to solve everything.

First, I keep as many uploaded images as I can as thumbnails. These are the ones that link to the lightbox. Then, I set the View Attachments, View Member Profiles, View Profile Posts, and View Member Lists to No (in User Group Permissions) for the Unregistered/Unconfirmed visitors to the website. This way, when Googlebot tries to access those pages as an unregistered site visitor, a 403 Unauthorized response will show. Those pages will fall out of the index over time.

The thing is, I don't want to be internally linking to thousands and thousands of essentially dead pages in my website. To deal with these internal links, I purchased two very awesome add-ons that remove them:

[cXF] Remove attachments link

This is a cXF Pack A Membership Add-on. Upgrade your account to cXF Pack A Membership for: 1-year access to all cXF Pack A Membership Add-ons for XenForo 2 1-year access to all cXF Premium Customizations for XenForo 2 If you got our...

[cXF] Remove username link

This is a cXF Pack B Membership Add-on. Upgrade your account to cXF Pack B Membership for: 1-year access to all cXF Pack B Membership Add-ons for XenForo 2 1-year access to all cXF Premium Customizations for XenForo 2 If you got our...

With these add-ons in place and the permissions set the way I have them set, the attachment pages as well as member pages don't exist to the search engine crawlers (they're not linked to anymore). The only thing that needs to happen now is for the pages that have been seen, to fall out of the index over the next few months. Again, I have a warning for you. Having a bunch of pages linked to and then not linked to all of a sudden will make your site traffic fall. It'll come back in spades after a while though, especially if what you removed are thin/junk pages in the eyes of Google.

PS - I don't block any of these pages in robots.txt anymore. I keep them accessible so Google can see the 403 response code and remove the pages from the index.

dethfire

Well-known member

This depends on which version of the image is showing in a post. If you inspect the thumbnail image, you'll see the /data/attachments/ directory. If the images are showing at full size in the post, you'll only see the /attachments/ directory.

This is an issue because the vast majority are full image. I need to try some sql statements to change all thumbnails to full.

The thing is, I don't want to be internally linking to thousands and thousands of essentially dead pages in my website. To deal with these internal links, I purchased two very awesome add-ons that remove them:

I have done a lot of this manually

With these add-ons in place and the permissions set the way I have them set, the attachment pages as well as member pages don't exist to the search engine crawlers (they're not linked to anymore). The only thing that needs to happen now is for the pages that have been seen, to fall out of the index over the next few months.

This will block google from indexing images though too? I just need to edit that lightbox framework and add a noindex. It must be in a javascript.

jgaulard

Active member

This is an issue because the vast majority are full image. I need to try some sql statements to change all thumbnails to full.

You'll need to experiment with the code, but this is what you'll ultimately want:

<img src="/forum/data/attachments/24/image.jpg" alt="image.jpg" />

Straight up image reference code that shows the image. Since the images are being referenced from the /data/attachments/ directory, they should be available to the search engines for indexing.

Also, regarding noindex code, I've never seen including that code on a web page have a positive effect on ranking. I know this goes against the grain of the entire SEO community, but what I claim is simply from personal experience. The way I see it is that Google still indexes the page with noindex on it, but doesn't show it in search results. That's it. Perhaps it will benefit someone who's suffering from duplicate content or something, but that's even debatable. The only effective method for increasing/restoring the ranking of a website that's suffering from thin content is to remove the content all together so it presents a 404 header response or completely hide it from the search engines (not even link to it) and allow it to fall out of the index over time. Internally linking to a page that's blocked by robots.txt is no good. The page still exists and a blocked page is considered a thin page. Linking to a page that needs authorization to access is no good as well. Doing that is akin to linking to a 404 page. Horrible for rankings.

popowich

Active member

I upgraded from 1.5 to 2.2.x about 2 months ago and have had a steady increase in "soft 404" as seen by Google console. All of the links listed bring up valid pages, they're not broken or restricted guest permissions. Is there a resolution for this issue? I'm running vanilla Xenforo with the vbulletin redirects add-on since that time.

Similar threads

- Question

- Replies

- 3

- Views

- 555

- Replies

- 4

- Views

- 1K

- Replies

- 2

- Views

- 525

- Replies

- 2

- Views

- 807

- Replies

- 0

- Views

- 489