snoopy5

Well-known member

Hi,

I have a couple of different forums. All are XF1.5.21. All are older than 4 years. All have the same setup on the same server. I have created on all the same way a sitemap and the cronjob for it and put the sitemap.php link into Google search years ago.

But two of them differ dramatically in Google Search for getting indexed. They do get indexed, but only around 15-20% of all submitted pages. All other sites have somethimg like 98%.

According to Google, the test with the sitemap.php is o.k.

Now I looked into this and whe I type into my browser the path to the sitemap.php file, I get for those 2 "problem.kids" different results in the way how it is displayed than with the well indexed sites. See screenshots.

What can be the reason that they look so different? Is there any setting I did wrong?

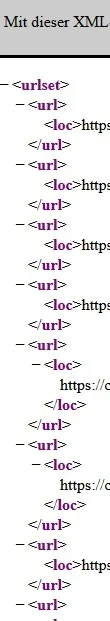

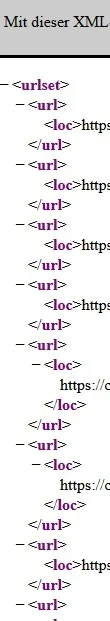

The good ones:

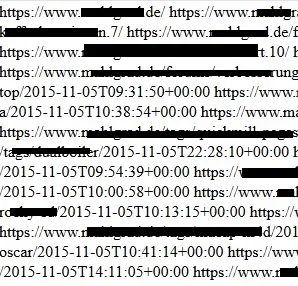

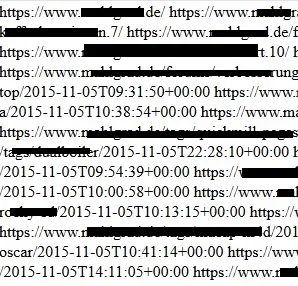

the bad ones:

I have a couple of different forums. All are XF1.5.21. All are older than 4 years. All have the same setup on the same server. I have created on all the same way a sitemap and the cronjob for it and put the sitemap.php link into Google search years ago.

But two of them differ dramatically in Google Search for getting indexed. They do get indexed, but only around 15-20% of all submitted pages. All other sites have somethimg like 98%.

According to Google, the test with the sitemap.php is o.k.

Now I looked into this and whe I type into my browser the path to the sitemap.php file, I get for those 2 "problem.kids" different results in the way how it is displayed than with the well indexed sites. See screenshots.

What can be the reason that they look so different? Is there any setting I did wrong?

The good ones:

the bad ones: