You should try this

• Log in to your Xenforo admin interface.

• Navigate to Appearance > Templates (Xenforo style).

• Look for the PAGE_CONTAINER template, (Xenforo style) which is the main container for your site and is used to include the header, footer, and other global elements on all pages.

Step 2: Add the conditional canonical tag

• Once in the PAGE_CONTAINER template, find the opening <head> tag

• Add the following code just above the closing </head> tag to insert a canonical tag only if it does not already exist:

Code:

<xf:if is="{$xf.canonicalUrl}">

<link rel="canonical" href="{$xf.canonicalUrl}" />

<xf:else />

<link rel="canonical" href="{$xf.options.boardUrl}{$xf.request.getRequestUri()}" />

</xf:if>

• This code first checks if a canonical URL is already defined by Xenforo with

{$xf.canonicalUrl}.

• Then, it checks if a canonical URL is defined via the meta with

{$page.meta.canonical}.

• If neither condition is met, it adds a canonical URL based on the current URL of the page.

• The script also adds a canonical URL to XFRM resources

Step 3: Save and verify

• Click Save changes to apply the changes.

• Test several pages of your site to ensure that the canonical URL is added by opening the source code of each page with Firefox or by adding in the URL in Google Chrome:

Code:

view-source: (preceding the https://www.)

Example of a source code display URL:

Code:

view-source:https://www.your-site.php

Search for the line starting with:

Code:

<link rel="canonical" href=

This is the canonical URL that was added by the script.

I tested this script myself and it works perfectly, it adds a canonical url on posts and resources that do not have one and it does not add one if there is one that you have added manually with the add-on like [Xen-Soluce] SEO Optimization 2.7.0 Fix 3 or other addon.

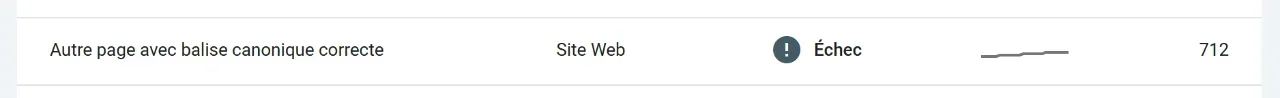

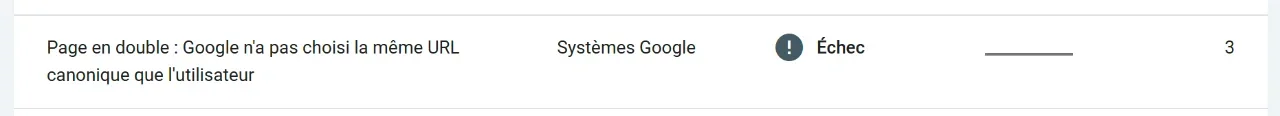

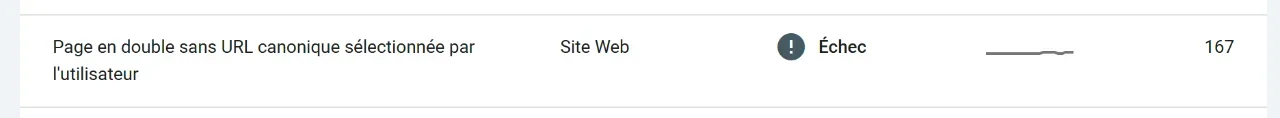

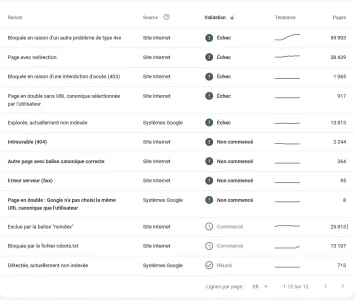

After adding the canonical tag, monitor your site's SEO performance to ensure that the changes have a positive impact on your SEO. Use tools such as Google Search Console to check the indexed canonical URLs.