Ok. You disagree with me. So let's run some quick benchmarks and find out who is right.

Ubuntu 25.04 with PHP 8.4 and Apache and Nginx both with default settings on a Core i5-14500T Dell Micro

Nginx is using PHP-FPM, Apache is using mod_php

Hyperthreading is turned off.

I'm using the wrk tool which is using 14 threads.

helloworld.html is a file with the text 'hello world' in it.

helloworld.php is a file with the text 'hello world' also in it, but the file extension forces PHP to be loaded.

I'm using 100 and 500 concurrency because this is somewhere around the maximum of what >=90% Xenforo sites will see.

Apache 500 concurrency

wrk

http://localhost/helloworld.php -c 500 -t 14

Requests/sec: 186048.81

http://localhost/helloworld.html -c 500 -t 14

Requests/sec: 212756.80

Nginx 500 concurrency

wrk

http://localhost/helloworld.php -c 500 -t 14

Requests/sec: 57139.04

wrk

http://localhost/helloworld.html -c 500 -t 14

Requests/sec: 419169.29

Apache 100 concurrency

wrk

http://localhost/helloworld.php -c 100 -t 14

Requests/sec: 173069.76

wrk

http://localhost/helloworld.html -c 100 -t 14

Requests/sec: 219708.11

Nginx 100 concurrency

wrk

http://localhost/helloworld.php -c 100 -t 14

Requests/sec: 73375.09

wrk

http://localhost/helloworld.html -c 100 -t 14

Requests/sec: 414821.27

We can definitely say the overhead of instancing PHP is the smallest with Apache.

In this case the performance differential is much worse than i initially quoted. In real world apps i see typically a 20% slower on a single request basis.

That indicates that not only the initialization, but other interfacing is also slower via PHP-FPM.

Why is it so different? here's my theory.

PHP-FPM: has to manage processes, communicates via unix socket

mod_php: PHP is instanced in the apache process, dies with the apache process, communicates back/forth to the webserver at a lower level than unix sockets

So yeah, PHP-FPM has at least two added sources of overhead, so these numbers come as no surprise.

And as we both expected, nginx is much faster at serving static files.

I'm also not noticing a memory usage spike when running either benchmark.. so i cannot observe this higher memory thing you're talking about. maybe the requests are completing too fast.

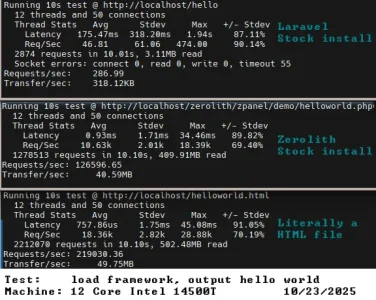

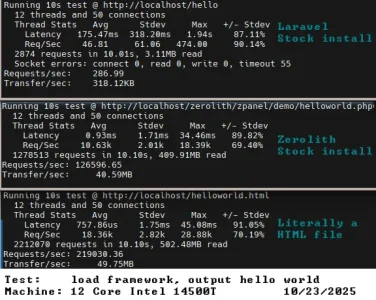

For fun here is another test that is basically booting up a PHP framework and outputting hello world.

Zerolith is our high performance competitor to Laravel.

Laravel is the most popular PHP framework.

This is to demonstrate that if your application is slow, even if you ran a

webserver written in assembly language, it'd still be slow. No webserver can outrun a slow app.