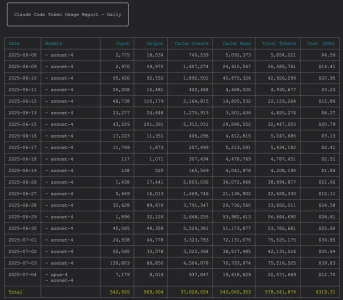

Yes, I was resisting for a while, but I kept running into usage limits, especially when combining Claude and Gemini 2.5 via MCP, which further increased the usage. But the subscription works out well given the fixed cost versus paying Claude's per-token costs via their API. Just look at my above ccusage prior to July 4th, already paid for itself on Claude Pro $20/month + GST. Even paid for itself in value compared to Claude Max $100/month plan as well

Yeah. I am going to consolidate and review my hosting costs and server deals to see if I can fit the Claude Max $100 + $10 GST budget. Also been training Claude Code on Xenforo and Centmin Mod LEMP stack data for future projects/tools/features

Preparing Centmin Mod to also be able to handle local server AI/LLM software/tech stacks for myself and users