frm

Well-known member

All add ons disabled.

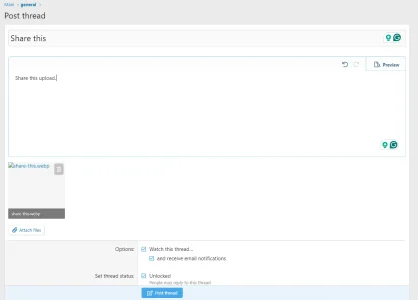

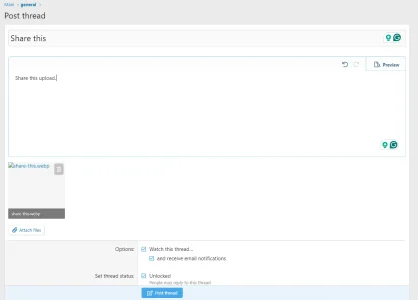

Upload attachment.

No preview for the upload.

Post thread.

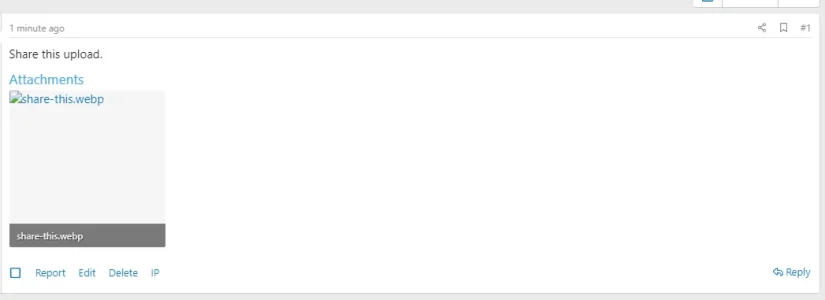

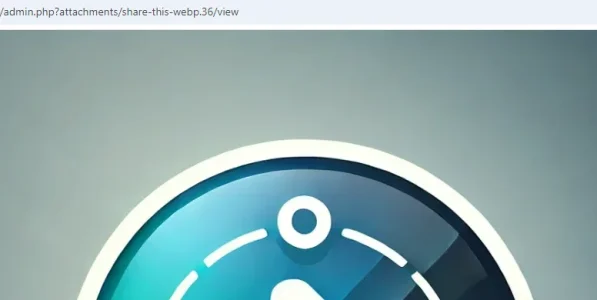

Click on attachment

Is this a XF bug or an object storage problem where Vultr isn't supported?

Config seems to work:

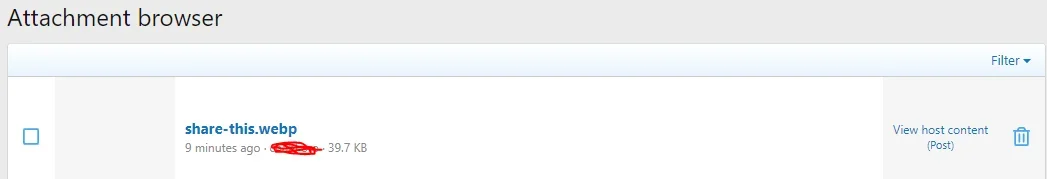

The bucket filled, and I'm unsure what share-this.webp would be named to confirm that it's coming from the bucket and not the server.

But, it's not showing on upload or as an attachment.

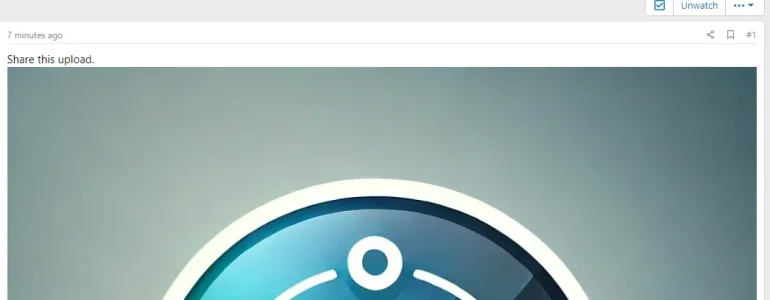

If I edit the post and insert the full image into the post, it shows up.

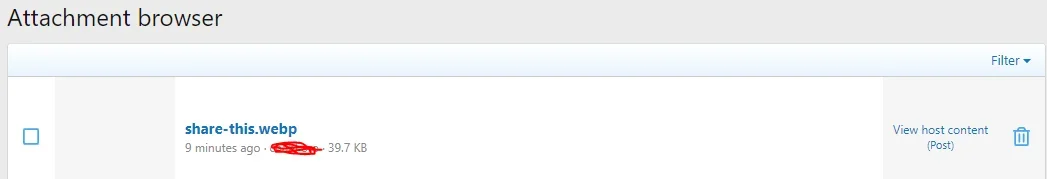

It's also blank in the ACP > Content > Attachments

Except when I click share-this.webp, the attachment shows up.

Edit: You can also not set avatars. After the filename appears on upload, it disappears and says Choose file again.

Upload attachment.

No preview for the upload.

Post thread.

Click on attachment

Is this a XF bug or an object storage problem where Vultr isn't supported?

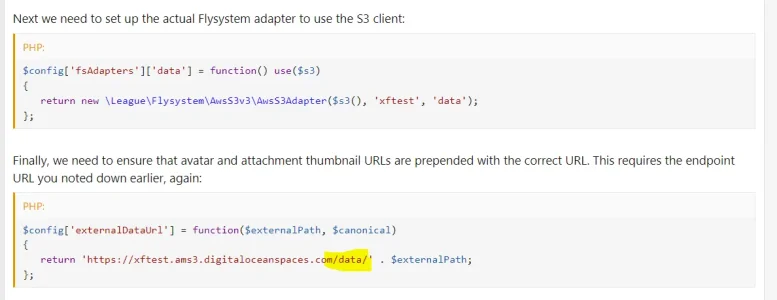

Config seems to work:

PHP:

$s3 = function()

{

return new \Aws\S3\S3Client([

'credentials' => [

'key' => 'XXX',

'secret' => 'YYY'

],

'region' => 'ams1',

'version' => 'latest',

'endpoint' => 'https://ams1.vultrobjects.com'

]);

};

$config['fsAdapters']['data'] = function() use($s3)

{

return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3(), 'dev-XXX', 'data');

};

$config['externalDataUrl'] = function($externalPath, $canonical)

{

return 'https://XXX.ams1.vultrobjects.com' . $externalPath;

};

$config['fsAdapters']['internal-data'] = function() use($s3)

{

return new \League\Flysystem\AwsS3v3\AwsS3Adapter($s3(), 'dev-XXX', 'internal_data');

};The bucket filled, and I'm unsure what share-this.webp would be named to confirm that it's coming from the bucket and not the server.

But, it's not showing on upload or as an attachment.

If I edit the post and insert the full image into the post, it shows up.

It's also blank in the ACP > Content > Attachments

Except when I click share-this.webp, the attachment shows up.

Edit: You can also not set avatars. After the filename appears on upload, it disappears and says Choose file again.

Last edited: