Just saw in Google Search Console a page that was not indexed, so I inspected it and it shows it's trying to index from two sitemaps

In our Yoast SEO support ticket today, they say that can create problems called "sitemap" collision.

"When we say sitemap collision, we simply mean that you avoid using sitemaps that might include the same URLs. In your case, we understood that your sitemaps are different and do not contain same links, therefore, no further actions needed. Having similar sitemaps might confuse Google crawling each time the duplicate links for indexing purposes."

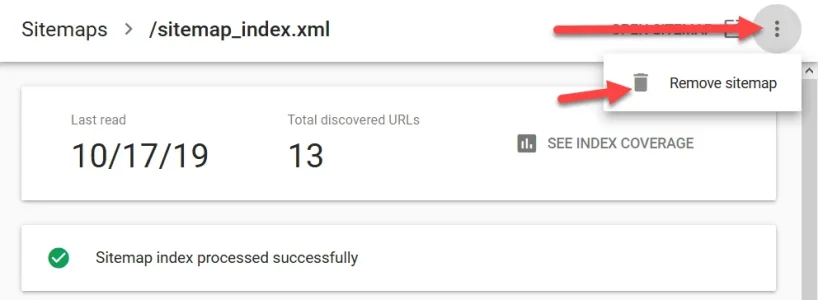

How do we get down to one sitemap?

Thank you.

https://www.xyz.com/community/sitemap.php

https://www.xyz.com/community/sitemap.xml

In our Yoast SEO support ticket today, they say that can create problems called "sitemap" collision.

"When we say sitemap collision, we simply mean that you avoid using sitemaps that might include the same URLs. In your case, we understood that your sitemaps are different and do not contain same links, therefore, no further actions needed. Having similar sitemaps might confuse Google crawling each time the duplicate links for indexing purposes."

How do we get down to one sitemap?

Thank you.