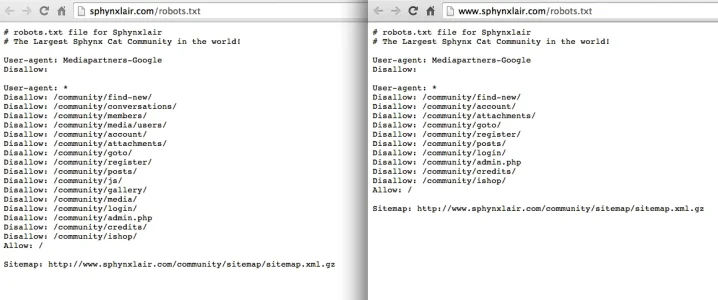

When I visit the url, it's the same for me.I just found something odd. My site 301 redirect is on point. How can I get two different robots.txt? On my server there is only one file "robots.txt" - it's a www - non www issue I imagine?

View attachment 68381

View attachment 68382

Hard refresh each url on your side.