tommydamic68

Well-known member

Just trying to get some ideas of what anyone is currently using for their robots.txt on their Xenforo site.

Here is mine:

Here is mine:

Code:

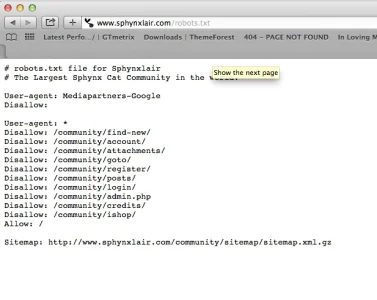

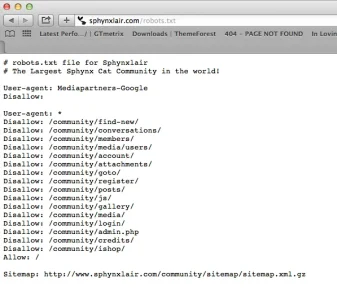

# robots.txt file for Sphynxlair

# The Largest Sphynx Cat Community in the world!

User-agent: Mediapartners-Google*

Disallow:

User-agent: *

Disallow: /community/find-new/

Disallow: /community/account/

Disallow: /community/attachments/

Disallow: /community/goto/

Disallow: /community/register/

Disallow: /community/posts/

Disallow: /community/login/

Disallow: /community/admin.php

Disallow: /community/ajax/

Disallow: /community/misc/contact/

Disallow: /community/data/

Disallow: /community/forums/-/

Disallow: /community/forums/tweets/

Disallow: /community/conversations/

Disallow: /community/events/birthdays/

Disallow: /community/events/monthly/

Disallow: /community/events/weekly/

Disallow: /community/find-new/

Disallow: /community/help/

Disallow: /community/internal_data/

Disallow: /community/js/

Disallow: /community/library/

Disallow: /community/search/

Disallow: /community/styles/

Disallow: /community/login/

Disallow: /community/lost-password/

Disallow: /community/online/

Disallow: /credits/

Allow: /

Sitemap: http://www.sphynxlair.com/community/sitemap/sitemap.xml.gz

Last edited: