craigiri

Well-known member

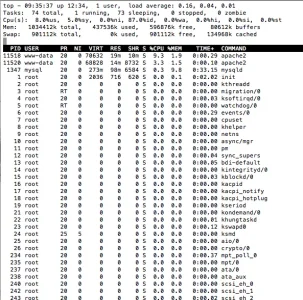

My 1G debian VPS (running XF and WP - light traffic site and small db) crashes once every couple of weeks - seems to be arbitrary, as neither the load, traffic or CPU use is ever heavy. Basically it hangs up and shows an incredibly heavy load until mysql is shut down manually and then restarted. But it's not (IMHO) mysql running out of memory - just that the whole systems seems to panic and overload.

The OOM message shows that Apache get it.....

"apache2 invoked oom-killer"

This happened before I doubled the RAM - and I also use caches for the wordpress and flush RAM once in a while. In other words, I never see the system eating up available memory - the error seems to come out of nowhere....

Looking around the web, I saw some folks mention that it could be a fault of Linux stock settings - that they are not overcommitting:

http://www.hskupin.info/2010/06/17/how-to-fix-the-oom-killer-crashe-under-linux/comment-page-1/

Anyone else have experience with this syndrome? It seems that if it were default behavior in Linux, it would be happening to lots of people!

The OOM message shows that Apache get it.....

"apache2 invoked oom-killer"

This happened before I doubled the RAM - and I also use caches for the wordpress and flush RAM once in a while. In other words, I never see the system eating up available memory - the error seems to come out of nowhere....

Looking around the web, I saw some folks mention that it could be a fault of Linux stock settings - that they are not overcommitting:

http://www.hskupin.info/2010/06/17/how-to-fix-the-oom-killer-crashe-under-linux/comment-page-1/

Anyone else have experience with this syndrome? It seems that if it were default behavior in Linux, it would be happening to lots of people!