Chris D submitted a new resource:

Using DigitalOcean Spaces or Amazon S3 for file storage in XF 2.x - The same concepts can be applied to other adapters too.

Read more about this resource...

Using DigitalOcean Spaces or Amazon S3 for file storage in XF 2.x - The same concepts can be applied to other adapters too.

Why this guide?

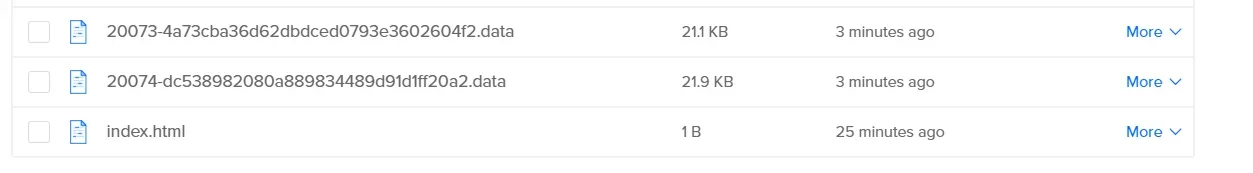

Since XenForo 2.0.0 we have supported remote file storage using an abstracted file system named Flysystem. It's called an abstracted file system as it adds a layer of abstraction between the code and a file system. It means that it provides a consistent API for performing file system operations so that whether the file system is a local disk-based file system or a distributed and remotely accessible...

Read more about this resource...