You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

UK Online Safety Regulations and impact on Forums

- Thread starter lazy llama

- Start date

-

- Tags

- regulation

eva2000

Well-known member

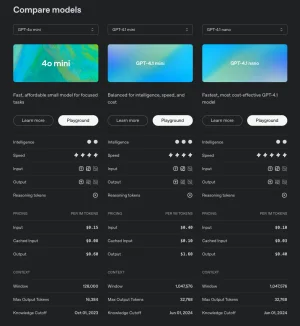

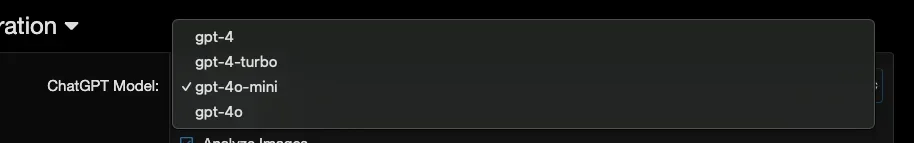

Which model is being used right now?I've also asked if the new 4.1 models can be included, as 4.1 nano is even cheaper:

MattW

Well-known member

Thank youIf you want an off-the-shelf solution

is probably easiestAsk to user confirmation before open link

- truonglv

- confirmation link link checker link confirmation

- Add-ons [2.x]

I've yet to find time to sit and read everything, but are you not allowed to operate a site that isn't low risk? Do all sites have to actually be low or negligible risk?

But maybe less intelligent than the 4.1 mini? (Looking at the description) And 4o mini is cheaper than the 4.1 mini.I've also asked if the new 4.1 models can be included, as 4.1 nano is even cheaper:

View attachment 322036

MattW

Well-known member

4.1 nano should be about the same as 4o mini, but cheaper and faster.But maybe less intelligent than the 4.1 mini? (Looking at the description) And 4o mini is cheaper than the 4.1 mini.

You've raised a point there - after an embedded youtube video finishes playing, it does indeed come up with recommended further videos to watch, encouraging people to click on them (or potentially showing unsuitable ones). The warning is a good idea and this addon has been linked by @chillibear.From what I can see, it seems liability stops with the video embedded on your website.. I would just moderate all videos posted and not worry about what might happen if a user follows it.

Embedding a YouTube video means you're sharing content from another platform. If the embedded video is safe and you don't control subsequent content (like recommended videos), your direct liability is limited.

What I would do, is vet every submitted video, maybe automatically moderate things with YouTube embeds and then, if that video is safe, I would use JavaScript to interrupt when a user leaves my domain with a warning "You are now leaving the hamster forum, please be safe, any content found from here on is not under our direct control".

Or something like that.

As my site would apparently be medium risk for CSAM hyperlinks (unless it had hyperlink scanning). This section applies. What exactly does it mean by "unless it is currently not technically feasible to achieve this outcome"? Does it mean you must have enough moderation in place to take something down swiftly, but if you can't then you don't have to?! Seems a bit noddy.

"Having a content moderation function that allows for the swift take down of illegal content

ICU C2

This measure sets out that providers should have systems and processes designed to swiftly take down illegal content and/or illegal content proxy of which the provider is aware (part of its ‘content moderation function’), unless it is currently not technically feasible to achieve this outcome.

"Having a content moderation function that allows for the swift take down of illegal content

ICU C2

This measure sets out that providers should have systems and processes designed to swiftly take down illegal content and/or illegal content proxy of which the provider is aware (part of its ‘content moderation function’), unless it is currently not technically feasible to achieve this outcome.

sport_billy

Member

How have you worked it out as 'medium risk' may i ask? This is my issue with these risk statements they become a subjective judgement call by the owner doing the risk assessment...As my site would apparently be medium risk for CSAM hyperlinks (unless it had hyperlink scanning). This section applies. What exactly does it mean by "unless it is currently not technically feasible to achieve this outcome"? Does it mean you must have enough moderation in place to take something down swiftly, but if you can't then you don't have to?! Seems a bit noddy.

"Having a content moderation function that allows for the swift take down of illegal content

ICU C2

This measure sets out that providers should have systems and processes designed to swiftly take down illegal content and/or illegal content proxy of which the provider is aware (part of its ‘content moderation function’), unless it is currently not technically feasible to achieve this outcome.

If you have CSAM scanning and sent all links to manual approval for instance and had no history (thank goodness) of that content ever being posted then surely the risk would be lower than medium..

Nothing can ever be risk free for sure. But it is a judgement call isn’t it.

I wish ofcom would give clearer guidance e,g

CSAM scanning on site

Report system in use with strong modding policy = Medium risk.

So there is a bench mark to gauge our efforts against.

Some examples of risk assessments would be useful, but they seem to wrap all guidance up in repetitive jargon, that unless you are legal savy most volunteer run forums will just all doing their own thing hoping for the best.

I would expect no two risk assessments even follow the same format.

I also noticed the children’s code of practice is still the draft copy on Ofcom’s website https://www.ofcom.org.uk/siteassets...ctice-for-user-to-user-services-.pdf?v=395674 . So I presume site owners need to refer to that and the illegal codes of practice to find out the codes for their mitigation measures https://www.ofcom.org.uk/siteassets...for-user-to-user-services-24-feb.pdf?v=391889 until the final version is available. It would have been helpful to have all final guidance in place and the online toolkit.

The way it comes up as medium risk is if it's a site that's accessible to children - this is from my notes taken from the guidance that came up on my digital toolkit thingHow have you worked it out as 'medium risk' may i ask? This is my issue with these risk statements they become a subjective judgement call by the owner doing the risk assessment...

If you have CSAM scanning and sent all links to manual approval for instance and had no history (thank goodness) of that content ever being posted then surely the risk would be lower than medium..

Nothing can ever be risk free for sure. But it is a judgement call isn’t it.

I wish ofcom would give clearer guidance e,g

CSAM scanning on site

Report system in use with strong modding policy = Medium risk.

So there is a bench mark to gauge our efforts against.

Some examples of risk assessments would be useful, but they seem to wrap all guidance up in repetitive jargon, that unless you are legal savy most volunteer run forums will just all doing their own thing hoping for the best.

I would expect no two risk assessments even follow the same format.

I also noticed the children’s code of practice is still the draft copy on Ofcom’s website https://www.ofcom.org.uk/siteassets...ctice-for-user-to-user-services-.pdf?v=395674 . So I presume site owners need to refer to that and the illegal codes of practice to find out the codes for their mitigation measures https://www.ofcom.org.uk/siteassets...for-user-to-user-services-24-feb.pdf?v=391889 until the final version is available. It would have been helpful to have all final guidance in place and the online toolkit.

"it says you can only be low risk if there are comprehensive systems and processes in place that ensure that CSAM url’s are very unlikely to be shared. Eg automated url detection, or manual systems and process that can be used to check ALL text or hyperlinks shared.

So it is medium risk UNLESS you can check every single hyperlink. So that to me means either sending every post with a link in for manual moderation (which would make use of the forum not so nice for people and a lot of moderation work), or have some software that scans all hyperlinks. Then it could be called low risk.

However, if you don't, it's then medium risk. But that doesn't seem to make any difference to the recommended measures that the toolkit suggests at the end. It just means you've assessed as medium risk (which in itself sounds like a finger wagging to do something about it).

If there were no child users (ie no possibility of child users) then it could come under low risk via the other criteria mentioned.

However I am looking into development of an addon that does scan all hyperlinks (by someone else). As ever though, these things cost.

I completely agree we need the childrens risk toolkit!

From what I've read so far though, it just seems to double down on the basic risk assessment if you've already accepted there may be child users. But it's possible the recommended mitigations are more.

The bit I am stuck on is youtube videos. Because even if all links are scanned for illegal harms, it doesn't assess the video content. Now historically that's never been an issue. They are perfectly safe videos. I guess I'd just have to rely on user reports for that. But a further issue with them is 1) They link off site (discussed earlier) but even if just watched onsite as an embed, at the end it comes up with suggested videos from youtube. Likewise with links to Instagram posts. I don't really want to block all those. They wouldn't be harmful url's but - I'm wondering if that will come up under a child risk assessment.

In an ideal world I'd have age verification software (even if for under 18's) and also scanning of hyperlinks - to just get on with life! But there are significant costs involved. My preference is the email age check from verifymy - not obtrusive, won't put people off. Although if 25% fail on that they'd be asked to scan id. And would probably give up and go. But with a £2000 set up fee - that is just way over anything a non profit could spend usually.

Edit - plus the cost of programming API integration.

Last edited:

Another query I have - short url's can they be blocked or sent for manual moderation, separately from other url's?

And also - is it possible for ANY report to send a post for manual moderation? Eg if a member reports a post and a moderator doesn't see it for a while. So that if the report button is pressed, the post automatically goes for manual moderation? Although I guess that makes all users semi moderators .......

And also - is it possible for ANY report to send a post for manual moderation? Eg if a member reports a post and a moderator doesn't see it for a while. So that if the report button is pressed, the post automatically goes for manual moderation? Although I guess that makes all users semi moderators .......

Last edited:

chillibear

Well-known member

You'll probably want to look atEg if a member reports a post and a moderator doesn't see it for a while. So that if the report button is pressed, the post automatically goes for manual moderation?

Allow your userbase to moderate messages by reporting them.

- Xon

- moderation

- Add-ons [2.x]

Mr Lucky

Well-known member

This is very good, it will allow your risk assessment to say you have a lot of moderation in many time zones.You'll probably want to look at

Allow your userbase to moderate messages by reporting them.

- Xon

- moderation

- Add-ons [2.x]

Thanks very much. So depending on how you set it up, any user with a certain number of points can send a report and it'll automatically go for manual moderation (ie be removed from the thread), is that right? It sounds very good.You'll probably want to look at

Allow your userbase to moderate messages by reporting them.

- Xon

- moderation

- Add-ons [2.x]

The only issue would be that it was so incredibly rare for anything to need reporting that people will forget how to do it. Unless it happens automatically when they report something.

I've also set up email staff if a report is made and set a vip sound alert for email from myself on the forum -so no need for text as long as it makes a beep. Just can't find the right kind of beep

Honestly, you're a star finding solutions to everythingYou'll probably want to look at

Allow your userbase to moderate messages by reporting them.

- Xon

- moderation

- Add-ons [2.x]

chillibear

Well-known member

In essence yes. You can refine how many "points" certain groups contribute to the moderation threshold - so you can weight your older more trusted users if you like.Thanks very much. So depending on how you set it up, any user with a certain number of points can send a report and it'll automatically go for manual moderation (ie be removed from the thread), is that right? It sounds very good.

In essence this is why I use it. Whilst on the larger forum I am involved with we do have moderators around the world it just serves as a extra layer that if something so bad as to be reported several times is at least pulled until someone can take a look. To be honest it's only tripped a few times so far and that's generally been from two moderators reporting something before checking to see if it'd already been reported! Still seemed like a sensible investmentThis is very good, it will allow your risk assessment to say you have a lot of moderation in many time zones.

Sorry not sure what you mean here. There isn't anything extra for the users to do - they just report a post as normal and go on their merry way.The only issue would be that it was so incredibly rare for anything to need reporting that people will forget how to do it. Unless it happens automatically when they report something.

Ah thank you. So it's just like a normal report then and the post disappears and goes for manual moderation then. I'd rather it did that than soft delete.In essence yes. You can refine how many "points" certain groups contribute to the moderation threshold - so you can weight your older more trusted users if you like.

In essence this is why I use it. Whilst on the larger forum I am involved with we do have moderators around the world it just serves as a extra layer that if something so bad as to be reported several times is at least pulled until someone can take a look. To be honest it's only tripped a few times so far and that's generally been from two moderators reporting something before checking to see if it'd already been reported! Still seemed like a sensible investment

Sorry not sure what you mean here. There isn't anything extra for the users to do - they just report a post as normal and go on their merry way.

chillibear

Well-known member

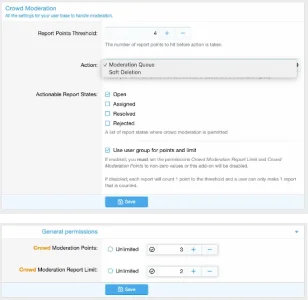

You can choose either moderation or soft-delete. I'd love a more advanced system, but then again there are a few changes I'd make to reports. Here are the options for Crowd Moderation:

So in my case the permissions shown are for users that have been on the site a good long time (probably 15+ years, I forget) so if they shout I pay attention and even allow them to "shout" twice (ie if they report a post twice) - which would actually be enough to have something pulled (2x 3 points > 4 point threshold). Younger users are a little less heavily weighted.

So in my case the permissions shown are for users that have been on the site a good long time (probably 15+ years, I forget) so if they shout I pay attention and even allow them to "shout" twice (ie if they report a post twice) - which would actually be enough to have something pulled (2x 3 points > 4 point threshold). Younger users are a little less heavily weighted.

Ok so you set individual users a number of points in permissions? So if I set 4 for a group of trusted users, it would just automatically go straight to manual reports, is that right? And the report limit is to stop a rogue member reporting everything in site? Surely a post only needs reporting once to go for manual moderation? Or is the 2 the number of times they can report something in a 24 hour period or something?

chillibear

Well-known member

Yes if you had the same threshold (4) and you had a user group where the Moderation Points value was 4 then yes if member of that group reported a post once in order for the post to go into moderation.Ok so you set individual users a number of points in permissions? So if I set 4 for a group of trusted users, it would just automatically go straight to manual reports, is that right?

Yes. So users can potentially report a post as many times as they like in XenForo. So to prevent abuse of this add-on you could have general users in the registered group set to Moderation Points = 1 and Report Limit = 1. Then a newbie can only ever contribute 1 point towards the threshold, even if they reported the same post 20 times (over any time period).And the report limit is to stop a rogue member reporting everything in site? ...[SNIP]... Or is the 2 the number of times they can report something in a 24 hour period or something?

Totally dependent on the threshold being being met. So take the newbie example above. If four (or more) newbies reported the same post then it would get pulled into moderation. If only two newbies reported it then it would remain live (but reported).Surely a post only needs reporting once to go for manual moderation?