Erik P.

Member

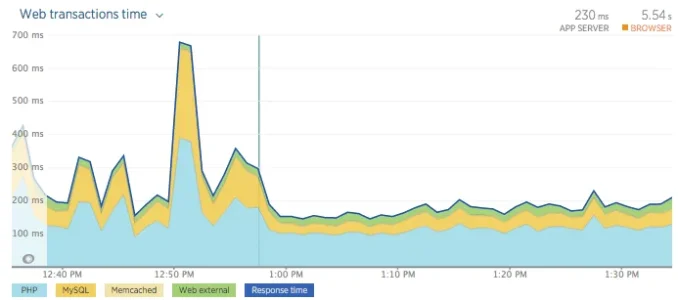

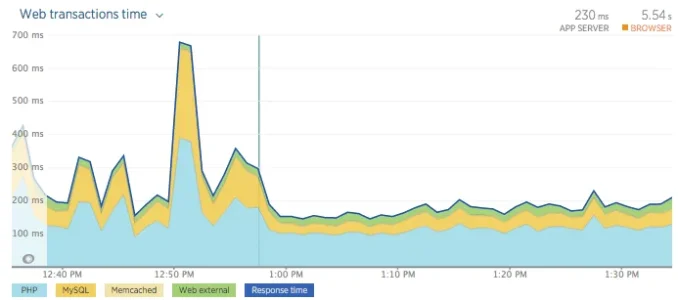

The use of TRUNCATE TABLE in XenForo_Model_Thread::updateThreadViews is causing seizures on our galera cluster leading to poor performance. I replaced 'TRUNCATE TABLE xf_thread_view' with 'DELETE FROM xf_thread_view' and attached a graph of the results. The point where my change was deployed can be seen. The change above is the only one I made.

and a few moments later...

TRUNCATE TABLE causes a table lock which stalls the cluster. DELETE FROM locks only the rows its deleting and doesn't block new rows from being inserted at the same time. I recommend removing all uses of truncate table to make Xenforo scale better in the future.

Also, SELECT ... FOR UPDATE causes a table lock leading to a cascading failure when a bunch of people try to vote on a poll. That should also be refactored to avoid that lock.

Cheers.

and a few moments later...

TRUNCATE TABLE causes a table lock which stalls the cluster. DELETE FROM locks only the rows its deleting and doesn't block new rows from being inserted at the same time. I recommend removing all uses of truncate table to make Xenforo scale better in the future.

Also, SELECT ... FOR UPDATE causes a table lock leading to a cascading failure when a bunch of people try to vote on a poll. That should also be refactored to avoid that lock.

Cheers.

Last edited: