klamm

Member

We succesfully upgraded from VB 3.8.x to XF 2.2.

Only problem we still cannot solve:

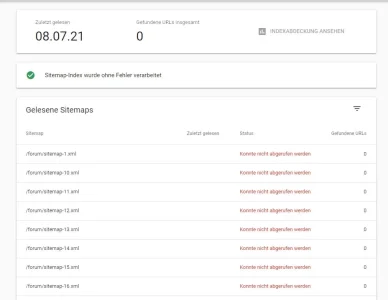

The generated sitemap, located here: https://www.klamm.de/forum/sitemap.xml is "not readable" by Google.

At least that's what search concole says.

Sitemap is delivered and accessable correctly.

Do you have any ideas what may cause the problem?

Only problem we still cannot solve:

The generated sitemap, located here: https://www.klamm.de/forum/sitemap.xml is "not readable" by Google.

At least that's what search concole says.

Sitemap is delivered and accessable correctly.

Do you have any ideas what may cause the problem?