As far as I know, there isn't any difference.I don't see any difference in contents between https://xenforo.com/community/sitemap.xml and https://xenforo.com/community/sitemap.php.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Optimizing Google Crawl Budget

- Thread starter Alfuzzy

- Start date

Mouth

Well-known member

And thus my intrigueThere’s not.

Ozzy47

Well-known member

See, https://xenforo.com/community/threads/sitemap.141412/#post-1225114And thus my intrigue

Was working on my robots.txt today...specifically the sitemap statement. XF is installed in the server root directory. There's a sitemap.php file in the server root directory...but it's dated from about 3 months ago. I'm guessing this is not the correct sitemap file...maybe an artifact of some sort when the site was migrated from vBulletin to XF a couple months ago.

I then did a server search for "sitemap"...and I see the most recent sitemaps seem to be in the directory:

public_html/internal_data/sitemaps/

In this directory there are 6 files:

sitemap-2000345678-1.xml.gz

sitemap-2000345678-2.xml.gz

sitemap-2000345678-3.xml.gz

sitemap-2000345678-4.xml.gz

sitemap-2000345678-5.xml.gz

sitemap-2000345678-6.xml.gz

The dates of these files are from yesterday (9-16-20)...which corresponds correctly with what I'm seeing in the AdminCP.

Based on info mentioned earlier in this thread...I thought my robots.txt file sitemap statement would be:

Sitemap: https://www.example.com/sitemap.xml

Based on the info above...is this still true...or should the robots.txt sitemap statement be something different?

Thanks

I then did a server search for "sitemap"...and I see the most recent sitemaps seem to be in the directory:

public_html/internal_data/sitemaps/

In this directory there are 6 files:

sitemap-2000345678-1.xml.gz

sitemap-2000345678-2.xml.gz

sitemap-2000345678-3.xml.gz

sitemap-2000345678-4.xml.gz

sitemap-2000345678-5.xml.gz

sitemap-2000345678-6.xml.gz

The dates of these files are from yesterday (9-16-20)...which corresponds correctly with what I'm seeing in the AdminCP.

Based on info mentioned earlier in this thread...I thought my robots.txt file sitemap statement would be:

Sitemap: https://www.example.com/sitemap.xml

Based on the info above...is this still true...or should the robots.txt sitemap statement be something different?

Thanks

Mouth

Well-known member

Either sitemap.xml or sitemap.php - both produce the same identical contents.

xF, whilst suggesting the later in ACP, also recommends using the former.

Are you guys saying that although the actual sitemap files are in the directory:

public_html/internal_data/sitemaps/

...that the robots.txt sitemap statement of:

Sitemap: https://www.example.com/sitemap.xml

Will get the job done?

Thanks

p.s. Obviously "example.com" will be replaced with the actual URL.

public_html/internal_data/sitemaps/

...that the robots.txt sitemap statement of:

Sitemap: https://www.example.com/sitemap.xml

Will get the job done?

Thanks

p.s. Obviously "example.com" will be replaced with the actual URL.

Mouth

Well-known member

Yes. Hit the URL for your site in your browser, and you'll see it's contents and how it's date/time stamped appropriately and, depending on the size of your site, possibly split into multiple files.

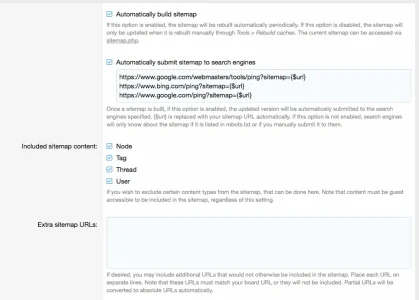

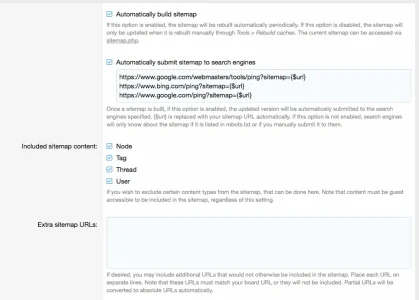

Excellent. One more quick question regarding this sitemap stuff. Here's a screenshot of my XF AdminCP sitemap settings.

Any suggestions regarding what could be added...changed...deleted? Main goal is to optimize Google crawl budget...not have anything there that's wasted effort/wasted resources. Thanks

2nd question...Does it effect/hurt the Google Crawl budget if there are more than one submitted sitemap?

Any suggestions regarding what could be added...changed...deleted? Main goal is to optimize Google crawl budget...not have anything there that's wasted effort/wasted resources. Thanks

2nd question...Does it effect/hurt the Google Crawl budget if there are more than one submitted sitemap?

Excellent...thanks DJ.I don't think it hurts anything.

However, uncheck Tag and User in that list. All you need are Nodes and Threads. The rest is just noise.

I thought based on what you recommended earlier in the thread...that unchecking tag & user might be the way to go.

Mr Lucky

Well-known member

I'm trying to optimize my sites Google crawl budget. My understanding is...items can be added to a sites robots.txt file to prevent Googlebot from crawling/indexing unnecessary items...and thus better utilize the Google crawl budget for the important stuff.

What exactly is the crawl budget?

NB: my understanding robots.txt while it stops crawling ny Google, doesn't stop it indexing.

Google can't index pages it doesn't know about. Crawling is how Googlebot finds your pages. Since that is limited, you don't want googlebot wasting time crawling or trying to crawl pages you don't want to be indexed.

searchengineland.com

searchengineland.com

yoast.com

yoast.com

Google explains what "crawl budget" means for webmasters

Google says crawl demand and crawl rate make up GoogleBot's crawl budget for your website.

How to optimize your crawl budget

Crawl budget optimization can help you out if Google doesn't crawl enough pages on your site. Learn whether and how you should do this.

Google doesn’t always spider every page on a site instantly. In fact, sometimes, it can take weeks. This might get in the way of your SEO efforts. Your newly optimized landing page might not get indexed. At that point, it’s time to optimize your crawl budget. We’ll discuss what a ‘crawl budget’ is and what you can do to optimize it in this article.

Crawl budget is the number of pages Google will crawl on your site on any given day. This number varies slightly from day to day, but overall, it’s relatively stable. Google might crawl 6 pages on your site each day, it might crawl 5,000 pages, it might even crawl 4,000,000 pages every single day. The number of pages Google crawls, your ‘budget’, is generally determined by the size of your site, the ‘health’ of your site (how many errors Google encounters) and the number of links to your site.

Similar threads

- Replies

- 10

- Views

- 191

- Replies

- 5

- Views

- 1K

- Replies

- 3

- Views

- 828

- Replies

- 7

- Views

- 2K