While I am in favor of this suggestion, I obviously don't support at all how OP randomly acted so rudely. With that out of the way...

As far as XenForo goes, it’s about as efficient as it can be without going down a road of compromising user’s experience. If your web server is being overloaded, it’s not because thread pages aren’t cached. A run of the mill web server can do ~50M uncached thread views per day. If you are approaching that number, it probably makes more sense to get a second web server than it does to make the website (even slightly) out of date for guests. However if you really wanted to do that, you can already do it with no backend changes or infrastructure requirements with Cloudflare (and you don't need to worry about the cost or resources involved with running a backend cache system).

Maybe I'm misunderstanding this, but I still don't see how it addresses the original suggestion's underlying benefits...

1. The problems aren't just about overloading the server(s) CPU wise, but also about real user response speed due to network constraints

Even if Xenforo was infinitely fast and replied infinitely fast for a cached guest page. If your server is distant geographically from the user then they pay at least the request roundtrip latency. This can regularly be in the 2XXms range. In practice XF might take anywhere within the 100ms range (still honorable, not blaming XF at all here, it can't bypass physics), meaning up to 300ms response times on the user-side. And that's a kind estimate. The longer the network path, the more chances of some random hiccup on the way.

If you do cache pages at the edge, you can instead have real user performance in the <50ms range very consistently (for popular threads at least). This is entirely impossible to compete with in a model where you always hit the origin.

2. Even that aside, and when running multiple servers, there is still a CPU cost for Xenforo to process the cached requests

Yes it quickly goes to Redis (or whatever one uses), but that isn't "free" even on cache hit. It might not be much, but it's still entirely unneeded networking and compute being used here. At least in the case where slightly stale guest pages is an acceptable tradeoff for the platform operator.

3. But all this aside, if we were able to force it, then it's just not recommended and there's no need for this suggestion. Except that's not quite true.

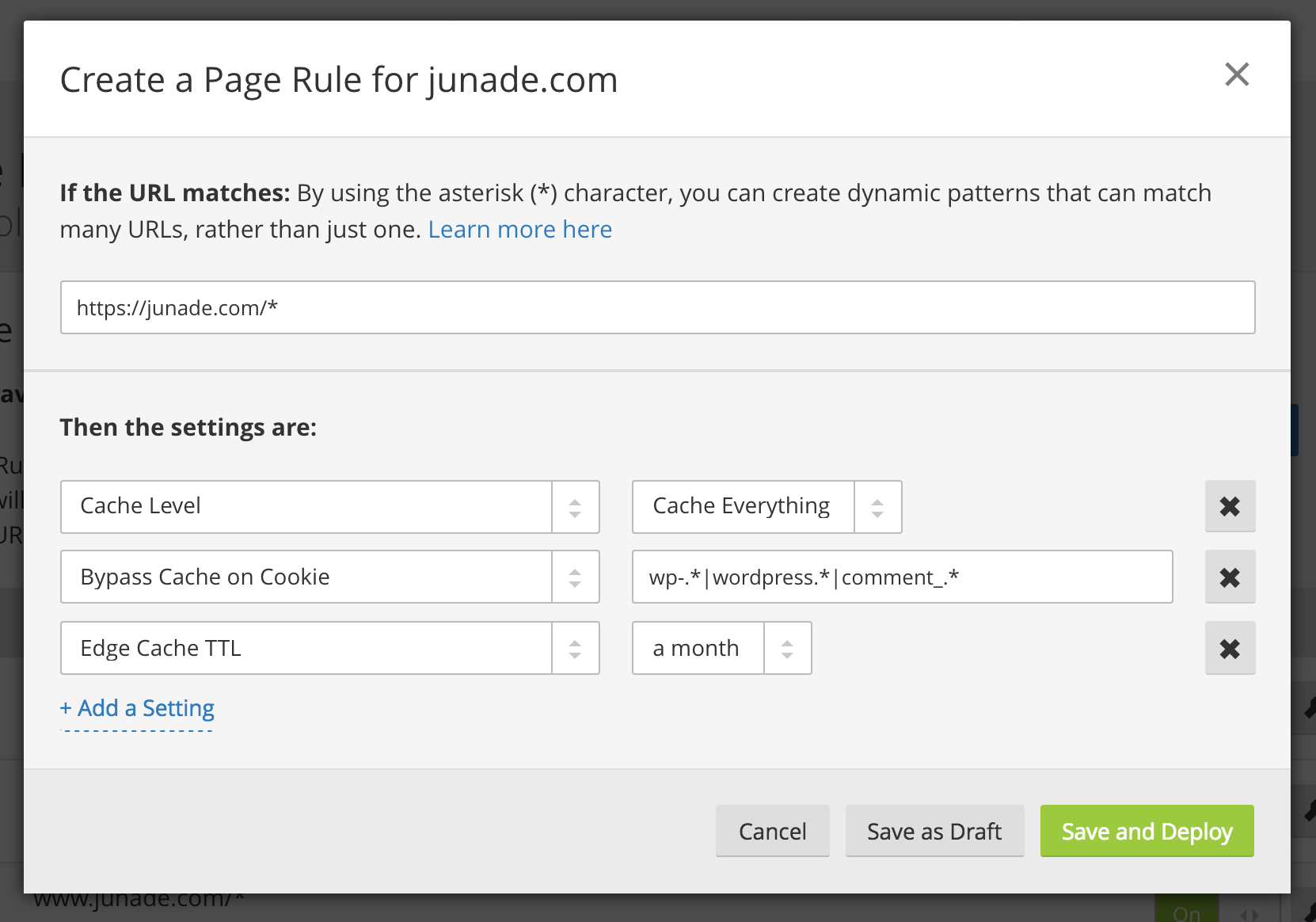

However if you really wanted to do that, you can already do it with no backend changes or infrastructure requirements with Cloudflare (and you don't need to worry about the cost or resources involved with running a backend cache system).

As far as I know anyway, and please do tell me if I missed something, this isn't true because XF tries and assumes it succeeded in setting the xf_* cookies even for guests. And specifically, the xf_csrf one.

Since it assumes that guests have those set by the time they try to start things like the login flow (which you would make uncached ofc), this triggers errors like "you need to allow coookies..." etc.

So if you do force it to fully cache outside, you will have guests actively encountering errors when trying to perform other actions.

There are a few solutions to that:

- support pushing CSRF cookies on-demand, ie when the user is about to do something that will actually need one -- for example, retried form submissions if no CSRF cookie was found: submission goes through if CSRF cookie present, otherwise requests re-submission while setting the CSRF cookie

- support replacing CSRF cookies with some alternative stateless mechanism

- support disabling them with the operator in charge of figuring out a secure way to operate after (I would not support that much since we all know what happens when you expect everyone to do due diligence wrt security)