I do have a slightly different opinion here. Clearly, for DDOS protection you need something that the requests have to pass before they reach the actual forum and it better be on a different host. One may name it Netfilter, Firewall, Loadbalancer, Reverse Proxy, Gateway or whatever name one likes - the marketing of solution providers has scrambled those names anyway chaotically. One can use Cloudflare for that (and many people do).Don't forget that identifying and processing bots in the forum software will require increasing levels of resources - and thus cost to you directly. Outsourcing that to a 3rd party (Cloudflare or otherwise) prevents the traffic from even getting to your server - thus requiring fewer resources to process. This is why DDoS protection should be done externally - not on-server.

PS. if you want to talk ISO model stuff - bots are generally an Application Layer level thing - they use HTTP and DNS and act like regular web browsers. Network Layer stuff is much, much lower in the stack and more typically associated with firewalls and mitigating DDoS attacks rather than higher level bot traffic.

Obviously, this kind of "thingy" can block or reroute any network traffic you want, classically based on source and destination. That is what a classic firewall does. With stateful inspection (developed by Checkpoint in the 90ies) you get at least some intelligence to that relative primitive process and with deep packet inspection even more - the latter unfortunately breaks privacy. And indeed it needs a considerable amount of computing resources with heavy load.

While it is highly desirable to do your filtering on a different physical host than the one that runs your forum for a bunch of reasons, this seems pretty demanding for small forums/websites and the people running them. If you rule out cloudflare (i.e. for privacy reasons) like I do and can live w/o DDOS protection (like I do) you can still have the rest and run your firewall on the same machine. Maybe in form of docker instances or virtual hosts or even on the same host. So you could have i.e a setup:

incoming traffic -> iptables (or similar) -> apache/nginx -> Xenforo

If you are on shared hosting like me you typically can't use/configure iptables or the webserver config and are limited to things like .htaccess or XenForo itself. While not optimal from a performance and architecture point of view in many cases sufficient (but annoying to maintain, does not scale properly with performance issues as a consequence with many rules in place, barely useful logging, so bugfixing quickly becomes a nightmare).

However: The Firewall or Gateway, no matter where it runs, does follow rules. Some of those are easy (like only allowing certain ports or not allowing certain ip-addresses), some a little more complex. And this is where something comes into play what a solition provider would probably market as "threat intelligence": The rules the firewall executes have to come from somewhere. There are some which you can define w/o thinking and Cloudflare claims to do a lot of magic (and maybe it does). Plus a lot of this magic comes from statistical data, based on the very huge amount of hosts that use cloudflare. This way cloudflare is able to discover patterns in worldwide internet traffic and to react to that. This is way more than you can do yourself and no doubt, if they do their homework properly, can offer good protection in many cases.

However: What they probably can't and don't do is to discover patterns specific for your host. I.e. the biggest threat for forums at the moment are AI bots that scrape forum content. Yesterday I had a couple of thousand requests from Vietnam, coming from a couple of hundred different IPs, slowly but steadily crawling through my forum, using a ton of different user agents until I locked them out.

Code:

"Mozilla/5.0(iPhone;CPUiPhoneOS17_3likeMacOSX)AppleWebKit/605.1.15(KHTML,likeGecko)AvastSecureBrowser/5.3.1Mobile/15E148

"Mozilla/5.0(Macintosh;IntelMacOSX10_15)AppleWebKit/605.1.15(KHTML,likeGecko)Version/17.0DuckDuckGo/7Safari/605.1.15"10234684

"Mozilla/5.0(Linux;Android11;motoe20Build/RONS31.267-94-14)AppleWebKit/537.36(KHTML,likeGecko)Chrome/122.0.6261.64MobileSafari/537.36"

"Mozilla/5.0(WindowsNT10.0;Win64;x64;rv:123.0)Gecko/20100101Firefox/123.0"9894684

"Mozilla/5.0(WindowsNT10.0;Win64;x64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/121.0.0.0Safari/537.36Edg/121.0.0.0Agency/98.8.8188.80"

"Mozilla/5.0(iPhone;CPUiPhoneOS17_1likeMacOSX)AppleWebKit/605.1.15(KHTML,likeGecko)EdgiOS/119.0.2151.65Version/17.0

"Mozilla/5.0(Linux;Android10;K)AppleWebKit/537.36(KHTML,likeGecko)Chrome/122.0.0.0MobileSafari/537.36"10564684

"Mozilla/5.0(iPhone;CPUiPhoneOS17_0likeMacOSX)AppleWebKit/605.1.15(KHTML,likeGecko)EdgiOS/116.0.1938.72Version/17.0

"Mozilla/5.0(Linux;Android10;K)AppleWebKit/537.36(KHTML,likeGecko)Chrome/122.0.0.0MobileSafari/537.36"10064684

"Mozilla/5.0(iPad;CPUOS17_0likeMacOSX)AppleWebKit/605.1.15(KHTML,likeGecko)Version/17.0Mobile/15E148Safari/604.1"

"Mozilla/5.0(iPhone;CPUiPhoneOS17_0likeMacOSX)AppleWebKit/605.1.15(KHTML,likeGecko)EdgiOS/116.0.1938.79Version/17.0

"Mozilla/5.0(Linux;Android10;K)AppleWebKit/537.36(KHTML,likeGecko)Chrome/122.0.0.0MobileSafari/537.36"10184684

"Mozilla/5.0(iPhone;CPUiPhoneOS17_0likeMacOSX)AppleWebKit/605.1.15(KHTML,likeGecko)EdgiOS/119.0.2151.105Version/17.0

"Mozilla/5.0(iPhone;CPUiPhoneOS17_1likeMacOSX)AppleWebKit/605.1.15(KHTML,likeGecko)EdgiOS/121.0.2277.107Version/17.0

"Mozilla/5.0(WindowsNT10.0;Win64;x64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/121.0.0.0Safari/537.36Edg/121.0.0.0"

"Mozilla/5.0(Macintosh;IntelMacOSX10_12_5;rv:123.0esr)Gecko/20100101Firefox/123.0esr"I was able to detect them by finding the pattern: Loads of visits from vietnam (unusual for my German language forum) that in parallel with different IPs visited old threads, often with different IPs visiting the same threads. In this case it was easy as they were all coming from VNPT Corp (Vietnam Posts and Telecommunications Group), mostly ASN45899, using static and dynamic IPs. While this pattern is very uncommon for my forum it would probably not be uncommon for other forums or websites and thus be below the radar of cloudflare - the more, as in absolute numbers it was not a huge "attack". Thus with Clouldflare, due to a lack of rules, I would probably have gone unprotected".

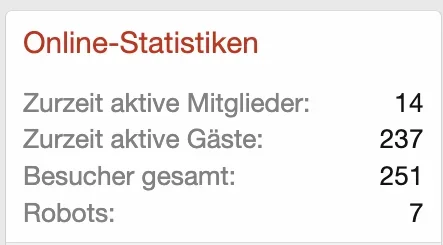

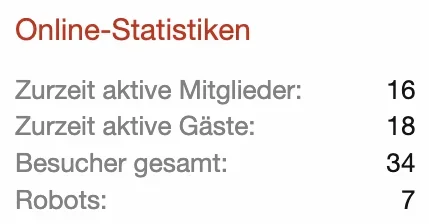

Manually on the other hand I saw an amount of guests on my forum that was unusually high in relation to registred users:

After blocking ASN45899 it went down instantly within a couple of minutes:

However: This needed active monitoring and manual work. I'd love to have an automation for these kind of things - no matter where the actual blocking happens. Cloudflare on itself can't do that as they probably cannot identify the pattern I discovered.

So a little more intelligence within XenForo to identify threats and then trigger the according actions, not necessarily within XenForo but i.e. within Cloudflare, IP-Tables etc. would be helpful. Maybe a bit like fail2ban works, but based on the actual forum and it's content. This could be an area, where AI could actually be helful.

Yes, this would cost resources - but it would gain a huge benefit that can't be gained otherwise and I would be willing to pay that price.

Regarding the bot detection in XenForo: I like it but it is clearly very far from perfect: It seems simply to rely on the useragent that is submitted plus it does not recognize a load of bots. This is obviously not your fault, but it is simply not sufficient and you can't do too much with it anyway and even less in a comfortable way.

What I'd i.e. like would be able to flag certain bots as "ok" and others as "questionable" and others as "unwanted". These could i.e. show up with green/yellow/red bubbles in the list and/or be filterable. If the "unwanted" ones could be forwareded to some instance (like with a "block bot" button) that would automatically create a rule for them in the gateway (whatever gateway one uses) it would become really useful.

I've recently learned about "Resident Proxies" and still lack an idea on how to get rid of those - this seems to be an issue that possibly a solution like Cloudflare could detect and block easier than others.

Last edited: