DeltaHF

Well-known member

I just moved my site to SSL last night, and now the Image Proxy will crash PHP-FPM if it has trouble pulling images from problematic origin servers. I've had trouble with it before (and it's always been a bit temperamental for me), but the impact is now much more severe after the SSL conversion. This is a very large high-traffic forum (10.2 million posts, ~300k daily page views) which has some extremely image-heavy threads, so we push the Image Proxy hard.

PHP-FPM has crashed about 8 times in the past 12 hours. There are no errors in the PHP-FPM error log. The server runs a LEMP web stack configured by @eva2000's Centminmod. Any help or advice would be greatly appreciated.

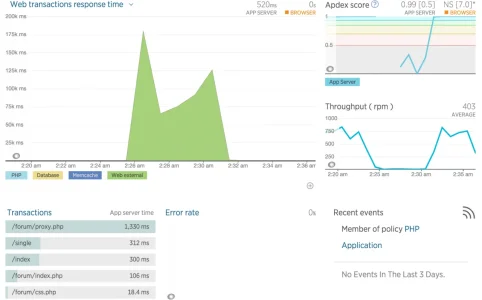

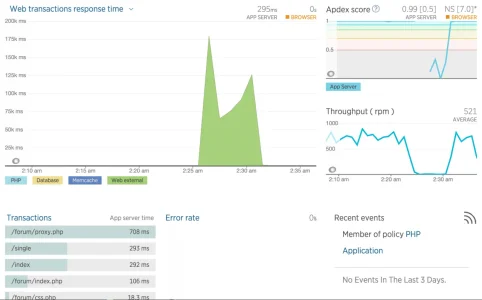

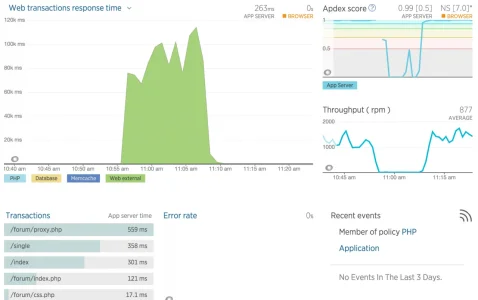

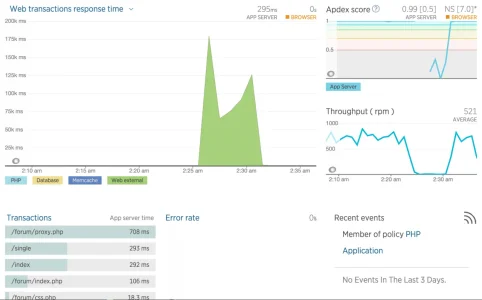

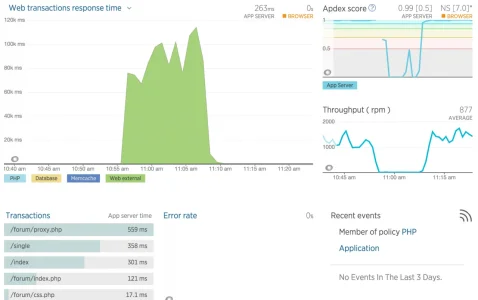

NewRelic graphs from two of the most severe incidents:

My php-fpm.conf:

PHP-FPM Status (about 10 minutes after a crash and manual restart):

I have also modified the timeout in /library/XenForo/Model/ImageProxy.php from 10 to 3 seconds, from line 426:

PHP-FPM has crashed about 8 times in the past 12 hours. There are no errors in the PHP-FPM error log. The server runs a LEMP web stack configured by @eva2000's Centminmod. Any help or advice would be greatly appreciated.

NewRelic graphs from two of the most severe incidents:

My php-fpm.conf:

Code:

log_level = debug

pid = /var/run/php-fpm/php-fpm.pid

error_log = /var/log/php-fpm/www-error.log

emergency_restart_threshold = 10

emergency_restart_interval = 1m

process_control_timeout = 10s

[www]

user = nginx

group = nginx

listen = 127.0.0.1:9000

listen.allowed_clients = 127.0.0.1

;listen.backlog = -1

;listen = /tmp/php5-fpm.sock

;listen.owner = nobody

;listen.group = nobody

;listen.mode = 0666

pm = static

pm.max_children = 8

; Default Value: min_spare_servers + (max_spare_servers - min_spare_servers) / 2

pm.start_servers = 8

pm.min_spare_servers = 8

pm.max_spare_servers = 8

pm.max_requests = 100

; PHP 5.3.9 setting

; The number of seconds after which an idle process will be killed.

; Note: Used only when pm is set to 'ondemand'

; Default Value: 10s

pm.process_idle_timeout = 10s;

rlimit_files = 65536

rlimit_core = 0

; The timeout for serving a single request after which the worker process will

; be killed. This option should be used when the 'max_execution_time' ini option

; does not stop script execution for some reason. A value of '0' means 'off'.

; Available units: s(econds)(default), m(inutes), h(ours), or d(ays)

; Default Value: 0

;request_terminate_timeout = 0

;request_slowlog_timeout = 0

slowlog = /var/log/php-fpm/www-slow.log

pm.status_path = /phpstatus

ping.path = /phpping

ping.response = pong

; Limits the extensions of the main script FPM will allow to parse. This can

; prevent configuration mistakes on the web server side. You should only limit

; FPM to .php extensions to prevent malicious users to use other extensions to

; exectute php code.

; Note: set an empty value to allow all extensions.

; Default Value: .php

security.limit_extensions = .php .php3 .php4 .php5

; catch_workers_output = yes

php_admin_value[error_log] = /var/log/php-fpm/www-php.error.logPHP-FPM Status (about 10 minutes after a crash and manual restart):

Code:

pool: www

process manager: static

start time: 26/Feb/2015:19:48:23 +0000

start since: 428

accepted conn: 9846

listen queue: 0

max listen queue: 129

listen queue len: 128

idle processes: 7

active processes: 1

total processes: 8

max active processes: 8

max children reached: 0

slow requests: 0I have also modified the timeout in /library/XenForo/Model/ImageProxy.php from 10 to 3 seconds, from line 426:

Code:

$response= XenForo_Helper_Http::getClient($requestUrl, array(

'output_stream' =>$streamUri,

'timeout' =>3

))->setHeaders('Accept-encoding', 'identity')->request('GET');Attachments

Last edited: