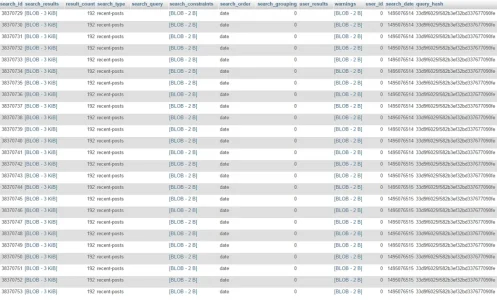

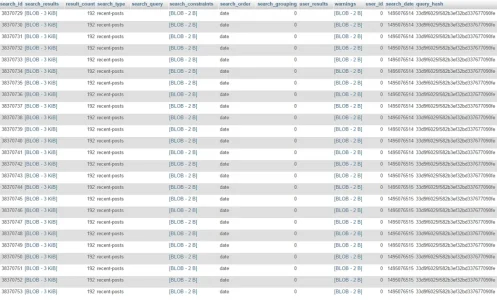

Googlebot has been actively accessing our Recent Posts page (multiple times per second). This translates to a heavy load on our database, because xenForo adds a record to the 'search' table each time Googlebot hits the page.

We migrated to a new server in order to cope with this load. However, Google has been continuously increasing the rate of scraping, averaging more than 10 times per second recently and this presented a new problem. As the 'Daily Clean Up' cron tries to purge the old entries from the 'search' table, it executes a query needing more than 100 seconds to complete. When this query hits, either the Elastic Search service fails or even worse, our whole database crashes.

How to deal with this issue? Is it possible to disable the continuous addition of new entries in the 'search' table in Googlebot's case?

We migrated to a new server in order to cope with this load. However, Google has been continuously increasing the rate of scraping, averaging more than 10 times per second recently and this presented a new problem. As the 'Daily Clean Up' cron tries to purge the old entries from the 'search' table, it executes a query needing more than 100 seconds to complete. When this query hits, either the Elastic Search service fails or even worse, our whole database crashes.

How to deal with this issue? Is it possible to disable the continuous addition of new entries in the 'search' table in Googlebot's case?