I've spent the last two hours doing some research on this. It's completely weird.

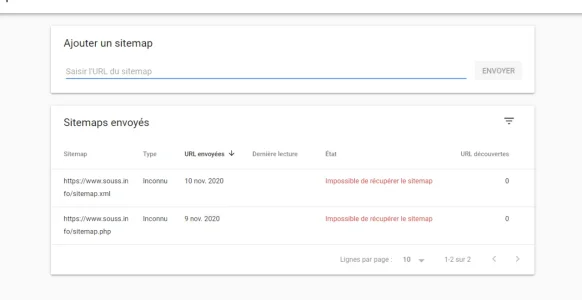

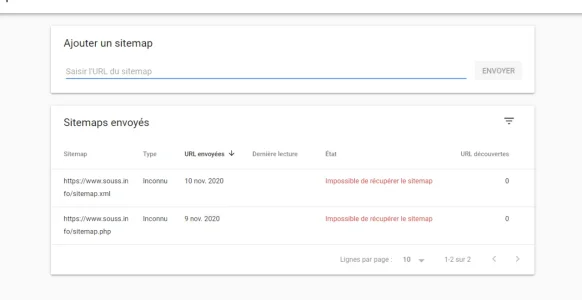

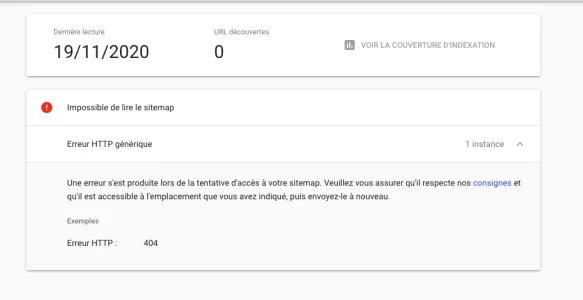

I took the automatically generated sitemap-1.xml, edited it manually and deleted the last 5000 urls, saved it under a different name and submitted it - it failed. I went on and cutted out the next 5000 urls, saved it under a different name, submitted it - it failed.

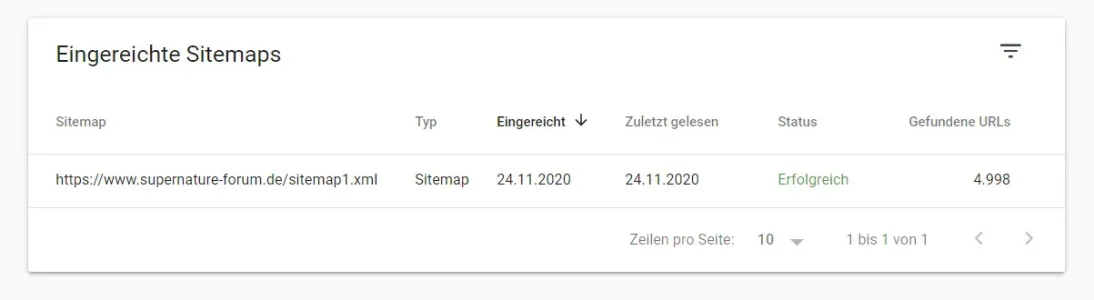

I repeated this until I had a file with only the first 5000 urls from the original file. I submitted that - and finally,

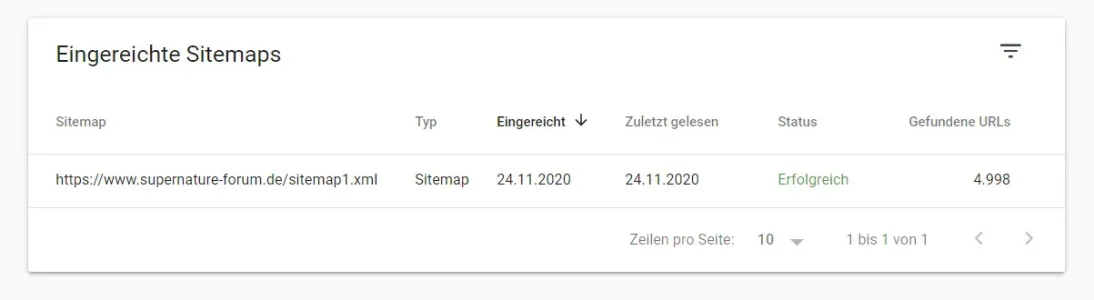

success!

But that seemed too odd to be true, I couldn't believe that Google is only able to process a maximum of 5000 urls.

So I took URLs 5001-10000 from the original file, submitted this as a separate sitemap - and it failed again.

It seemed clear to me now that there must be a problem with the content of the sitemap itself.

I took the file with the 5000 urls which failed and cuttet it down in steps of 500 entries as before.

To shorten this: I ended up with a failed file containing only 15 urls.

Im manually inspected the URLs, they all work. I then discovered that there was an URL containing the word "sex".

I removed it - and the file was processed.

Is it possible that Google rejects a sitemap file based on a "bad word filter"?